In this Article

A free spreadsheet application, Google Sheets, is widely used in workplaces for data management, reporting, and collaboration. If you’ve ever needed to track product prices, monitor competitors, or gather research data, you’ve maybe already had a chance to work with this web-based app.

In this guide, you’ll get info about Google Sheets’ main functions, like IMPORTXML, IMPORTHTML, and others, errors that may occur during the process, and practical tips.

Google Sheets is Perfect for Web Scraping, why?

In general, Google Sheets isn’t a typical tool for scraping data. However, there are valid reasons to give it a go. Many people turn to programming languages and coding libraries for web scraping. That’s why it is one of the most popular search queries on Google. If you need to gather specific data but don’t want to be overwhelmed by learning new skills, then Google Sheets may be a great assistant to save your time. That is one of the reasons why it’s worth considering.

Next, with just one account, you can access a wide array of Google services. Calendar, Gmail, Docs, Drive – all of them create an integral Google ecosystem where everything just clicks. You can simply store, share, and use your data across all platforms: scrape data with Google Sheets, back it up in Google Drive, and keep your tasks on the move.

Google Sheets is easy to use. The top menu has quick options for things like File, Edit, and View. The toolbar helps you undo, redo, and format your data. You can see the formulas or values in the formula bar. Sheets tabs let you switch between different pages in the same file. The grid area is where you enter and organize your data. Seems pretty easy to get started with, right?

Plus, you can access and edit your spreadsheets from anywhere in the world. No more worrying about losing your work due to a computer crash or misplaced files. Everything is saved automatically in a secure Google Drive. With just a link, anyone you invite can view or edit the file, depending on how you’ve customized the permissions.

Key Functions for Web Scraping

Now, let’s explore the top functions for web scraping in Google Sheets. With its versatile data importing and exporting capabilities, we’ll focus on the most popular IMPORT functions to help you scrape data like a pro.

IMPORTXML: Extract data from web pages

IMPORTXML has a broad scope of use. This function is used to get structured data from web pages using XPath queries from partial fragments to the entire content. It can handle data types like XML, HTML, CSV, TSV, and RSS. The syntax looks like this:

=IMPORTXML(“URL”, “xpath_query”, “locale”)

It includes the URL of the webpage, an XPath query that specifies what you want to import, and an optional locale for language and region. Commonly used XPath queries in Google Sheets include:

- //h1, //h2, //h3: Selects heading tags (titles).

- //img/@src: Extracts image URLs.

- //ul/li: Selects list items in unordered lists.

- //p: Selects all paragraphs.

- //span: Selects all span elements.

- //div: Selects all div elements for structured content.

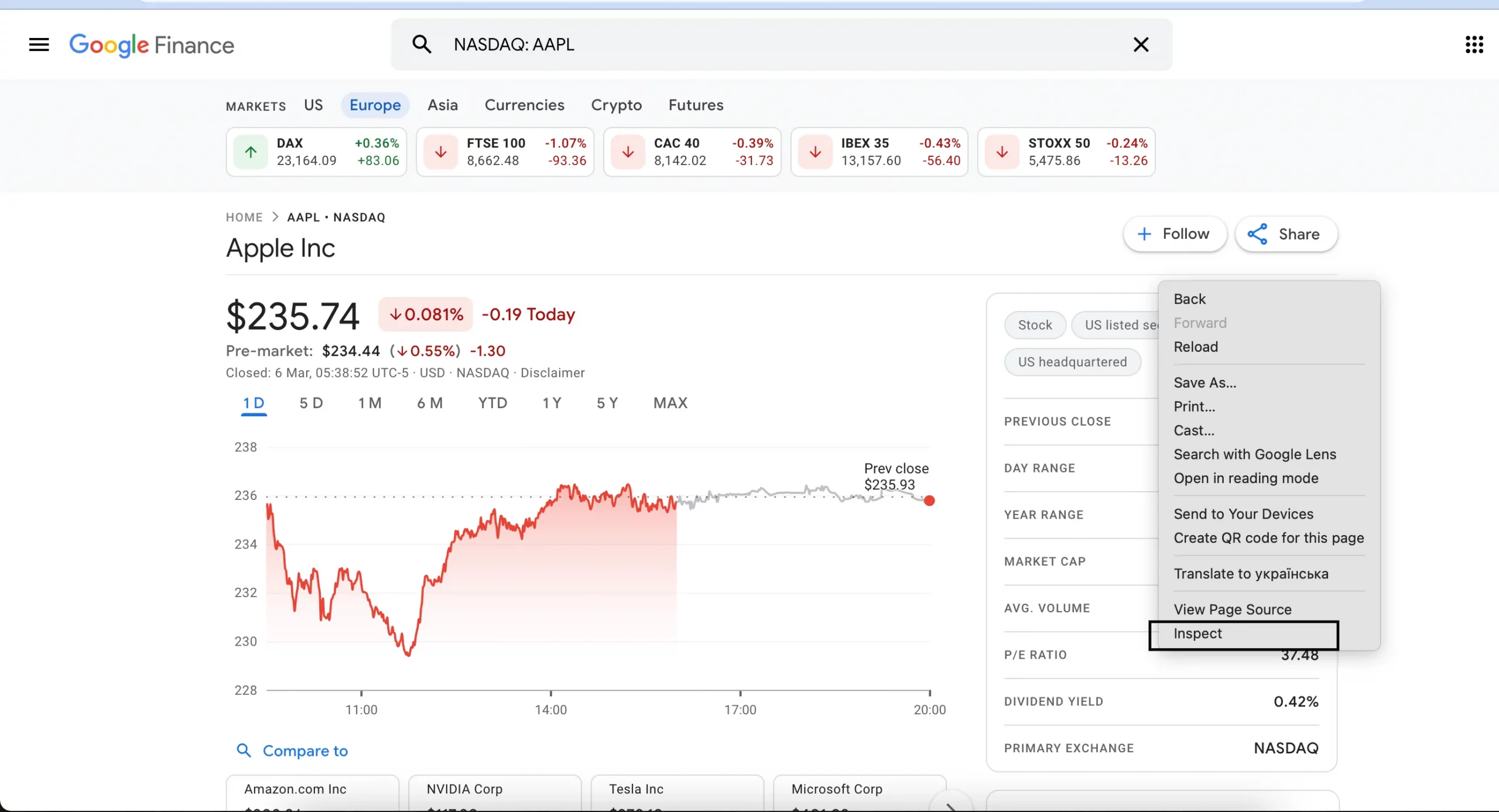

For example, let’s scrape the stock price for Apple from Google Finance. For the formula, we’ll insert the source link first.

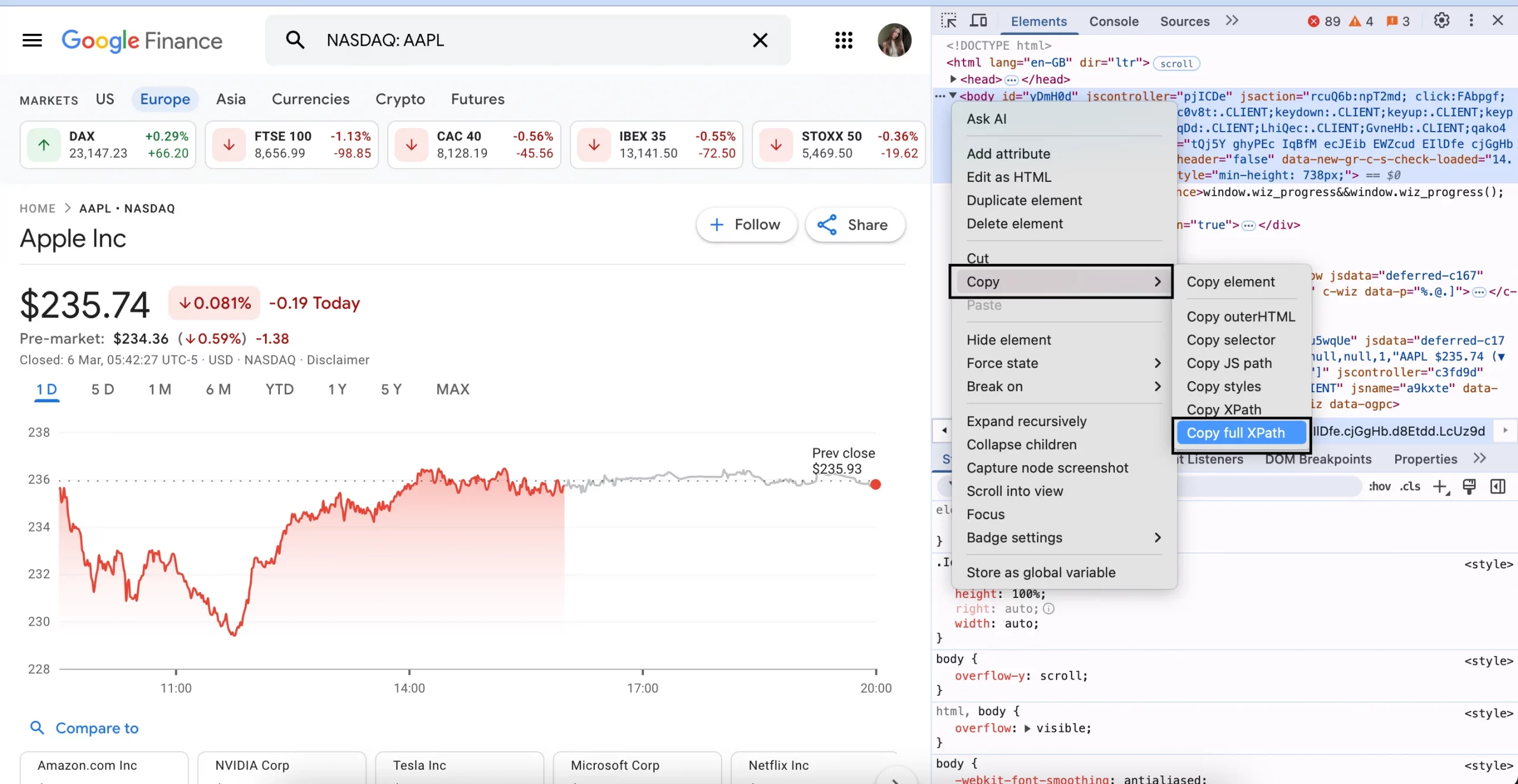

Next, we’ll have the XPath query. It navigates through the HTML structure from the root (/html/body/…) to get the price of Apple’s stock. To get the XPath, right-click on the data you want to export in the browser, select Inspect, and then copy the full XPath from the Elements tab. Use the copied XPath in the IMPORTXML function to import data from the web page.

The locale parameter is optional, but in most cases, it is used for text content in languages like en_US. The final formula will look like this:

=IMPORTXML(“https://www.google.com/finance/quote/AAPL:NASDAQ?sa=X&ved=2ahUKEwiZyb2g38r-AhUMs6QKHQOjDeEQ3ecFegQIKBAY”,”/html/body/c-wiz[2]/div/div[4]”)

In this case:

- The URL points to Apple’s stock page on Google Finance.

- The XPath query selects the element that contains the stock price.

The XPath must match the webpage structure. Always check the website’s terms of service to confirm that scraping is allowed!

IMPORTHTML: Pull data from tables and lists into HTML

Sometimes, you need structured data, like a list of cities, but available datasets may be incomplete or formatted incorrectly. Instead of manually searching and copying, you can scrape pages using Google Sheets’ IMPORTHTML function. The syntax includes a publicly accessible URL or the web page link, query (“list” or a “table”), and index (the position of the list/table on the webpage):

=IMPORTHTML(“URL”, “query”, index)

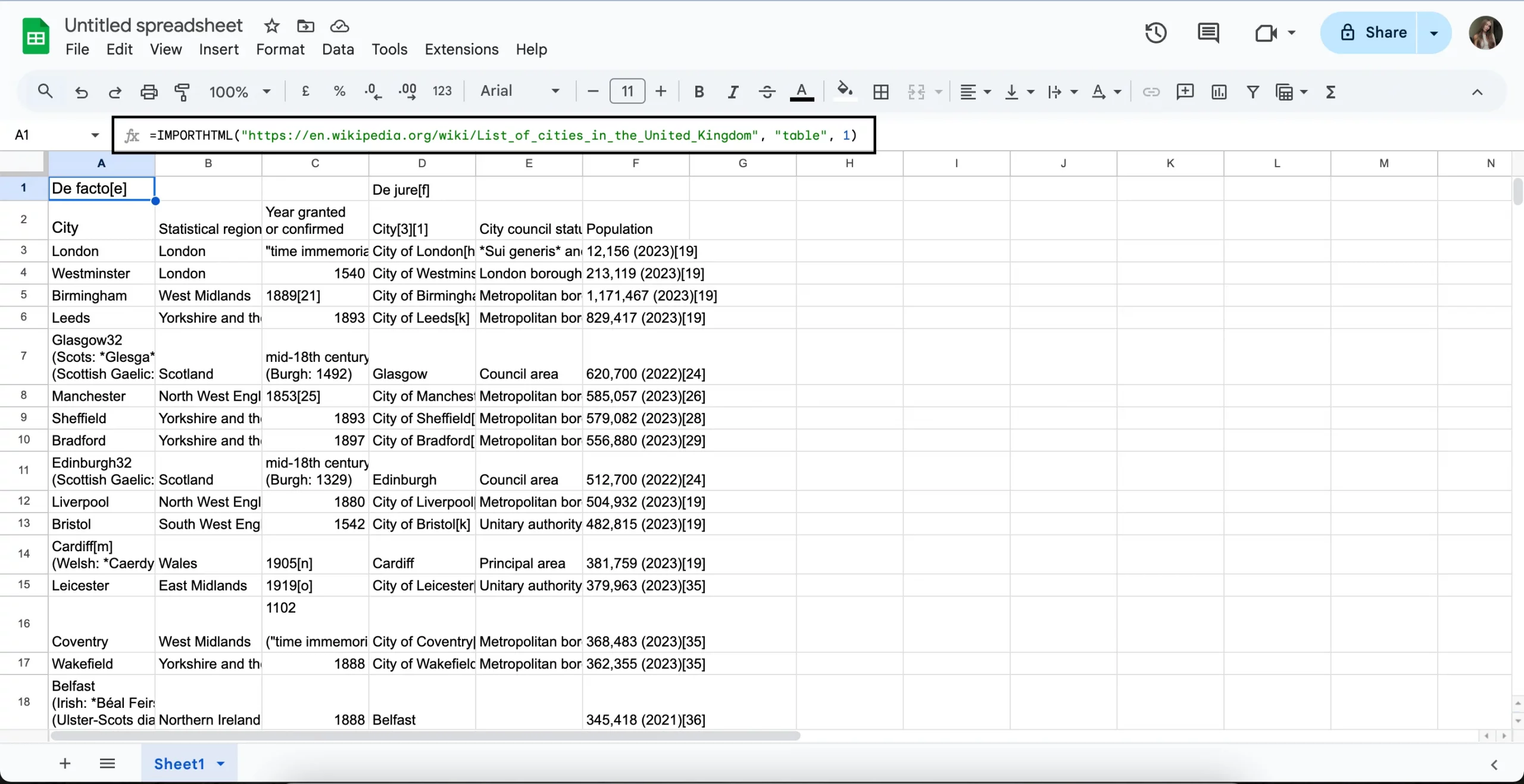

Let’s import a list of all the cities in the UK from Wikipedia. Firstly, find a webpage containing this data. Right-click anywhere on the webpage and select Inspect (press F12 or Ctrl+Shift+I on Windows, Cmd+Option+I on Mac). Navigate to the Elements tab and search for <table> tags to find the order of the table you want. In our case, the list of UK cities is in the first table on the page. Open a new Google Sheet. In a cell, enter the formula:

=IMPORTHTML(“https://en.wikipedia.org/wiki/List_of_cities_in_the_United_Kingdom”, “table”, 1)

Press Enter, and within seconds, the table will appear in your spreadsheet.

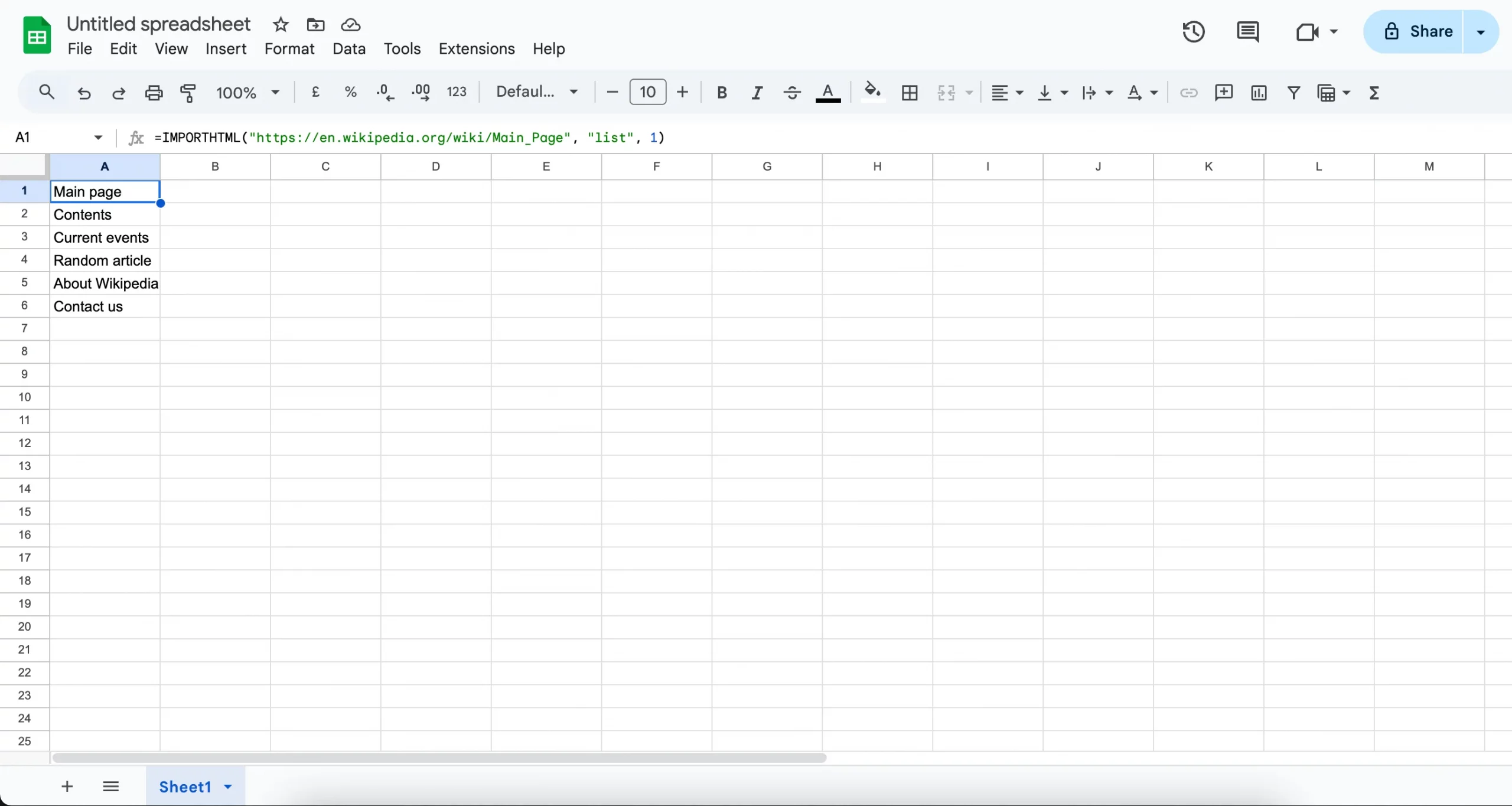

Let’s say you want to extract a website’s menu structure for quick analysis. You can use the same approach with IMPORTHTML. We’ll extract the main menu items from Wikipedia’s sidebar, which is structured as a list (<ul>…</ul>). Next, select a cell where you want the data to appear and use this formula:

=IMPORTHTML(“https://en.wikipedia.org/wiki/Main_Page”, “list”, 1)

Press Enter, and Google Sheets will automatically pull the first list (<ul>…</ul>) from the Wikipedia Main Page. You’ll see that this formula extracts the first unordered list from Wikipedia. You can adapt this method for other websites.

By using IMPORTHTML, you can quickly gather structured data, whether it’s tables of information or lists of items, without manual copy-pasting.

IMPORTDATA: Access data from CSV and TSV files

This function helps to easily import data from a URL in CSV (comma-separated value) or TSV (tab-separated value) format into a Google Sheets worksheet. The syntax of the IMPORTDATA function is:

=IMPORTDATA(“URL”)

It works only with CSV and TSV formats. The URL must be a direct link to the file. If the file requires authentication, IMPORTDATA will not work. With this function, you can import a dataset of any statistics directly into Google Sheets without manual downloading.

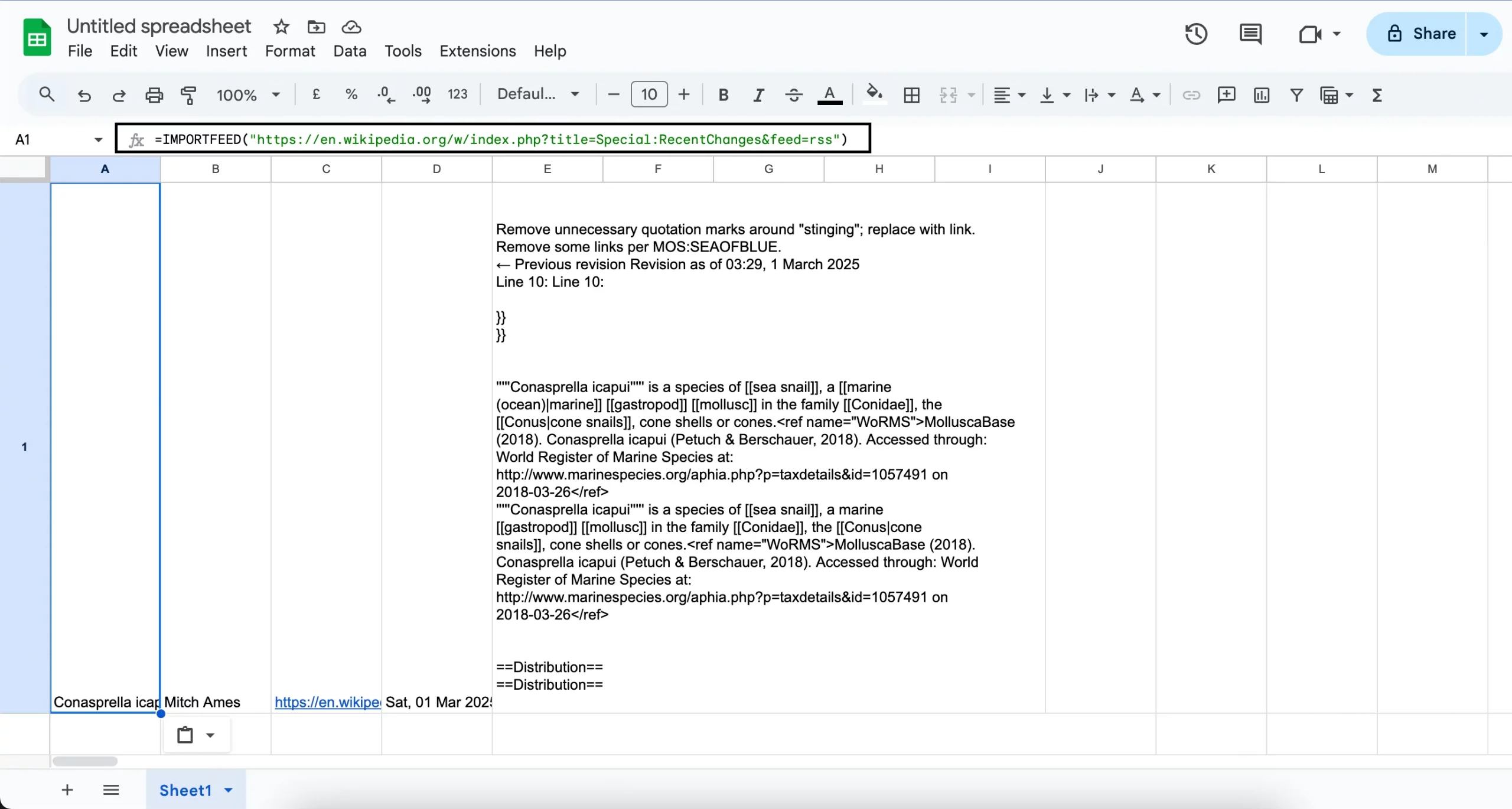

IMPORTFEED: Import RSS and ATOM feeds into Google Sheets

This function is used to import and display data from an RSS or Atom feed into a spreadsheet. Here is the syntax:

=IMPORTFEED(“url, [query], [headers], [num_items]”)

Query, headers, and the number of feed items are optional to include. In this example, we’re going to use Wikipedia’s RSS feed for recent changes. You can choose another website that has an RSS feed. Open Google Sheets and use the formula with the correct URL. This function will pull in the latest changes from Wikipedia’s feed and display them in your spreadsheet.

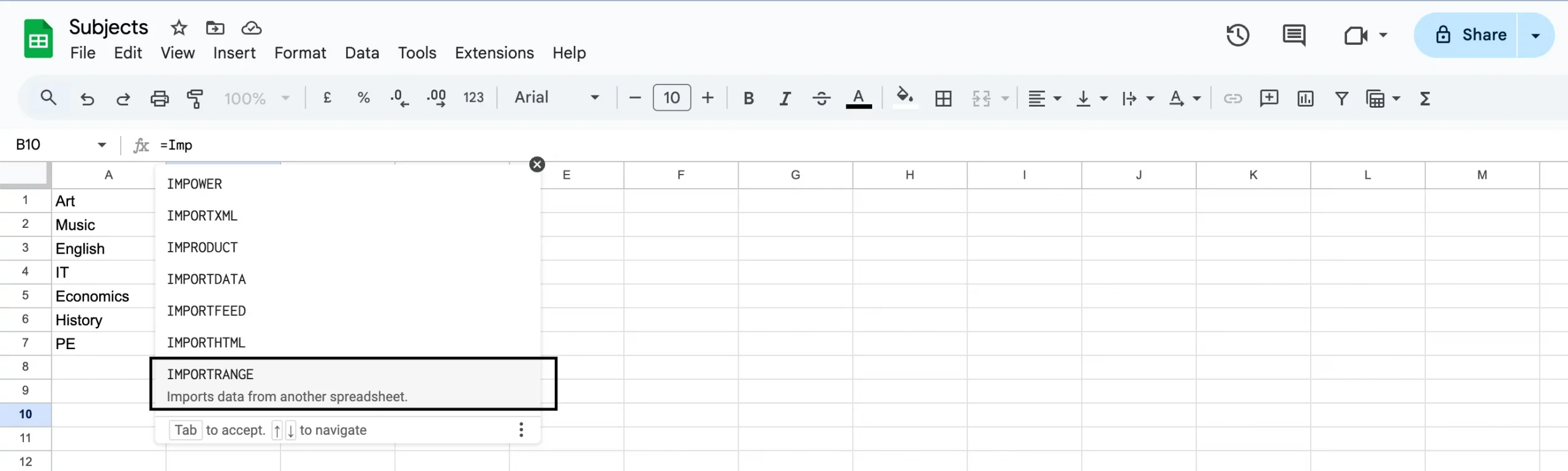

IMPORTRANGE: Link data across Google Sheets

The IMPORTRANGE function allows you to import data from one Google Sheet into another. Any changes made in the original sheet are automatically updated. The syntax is:

=IMPORTRANGE(spreadsheet_url, range_string)

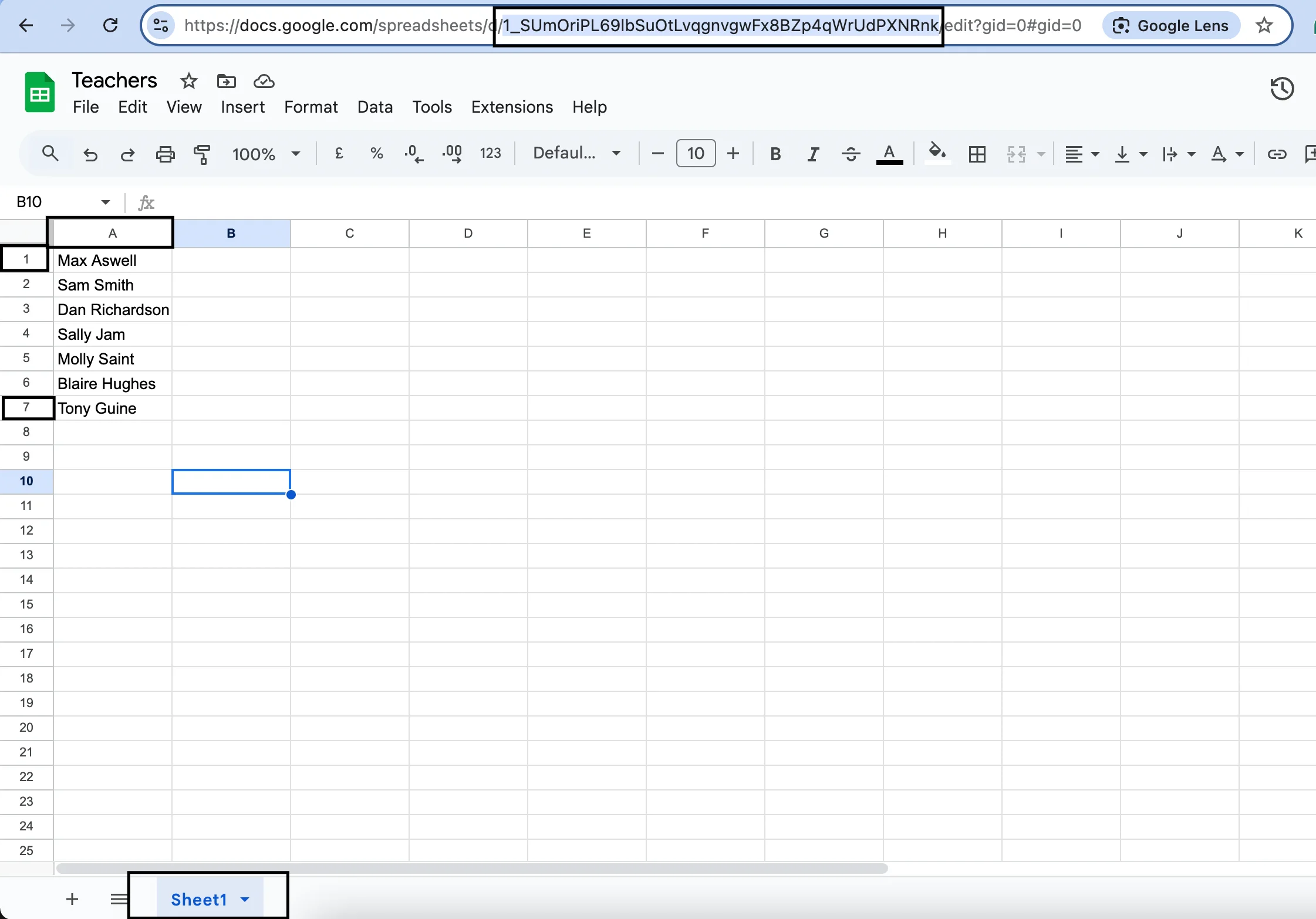

First of all, the original sheet must be accessible via a link. Also, check for case sensitivity and spaces in the name of the doc.

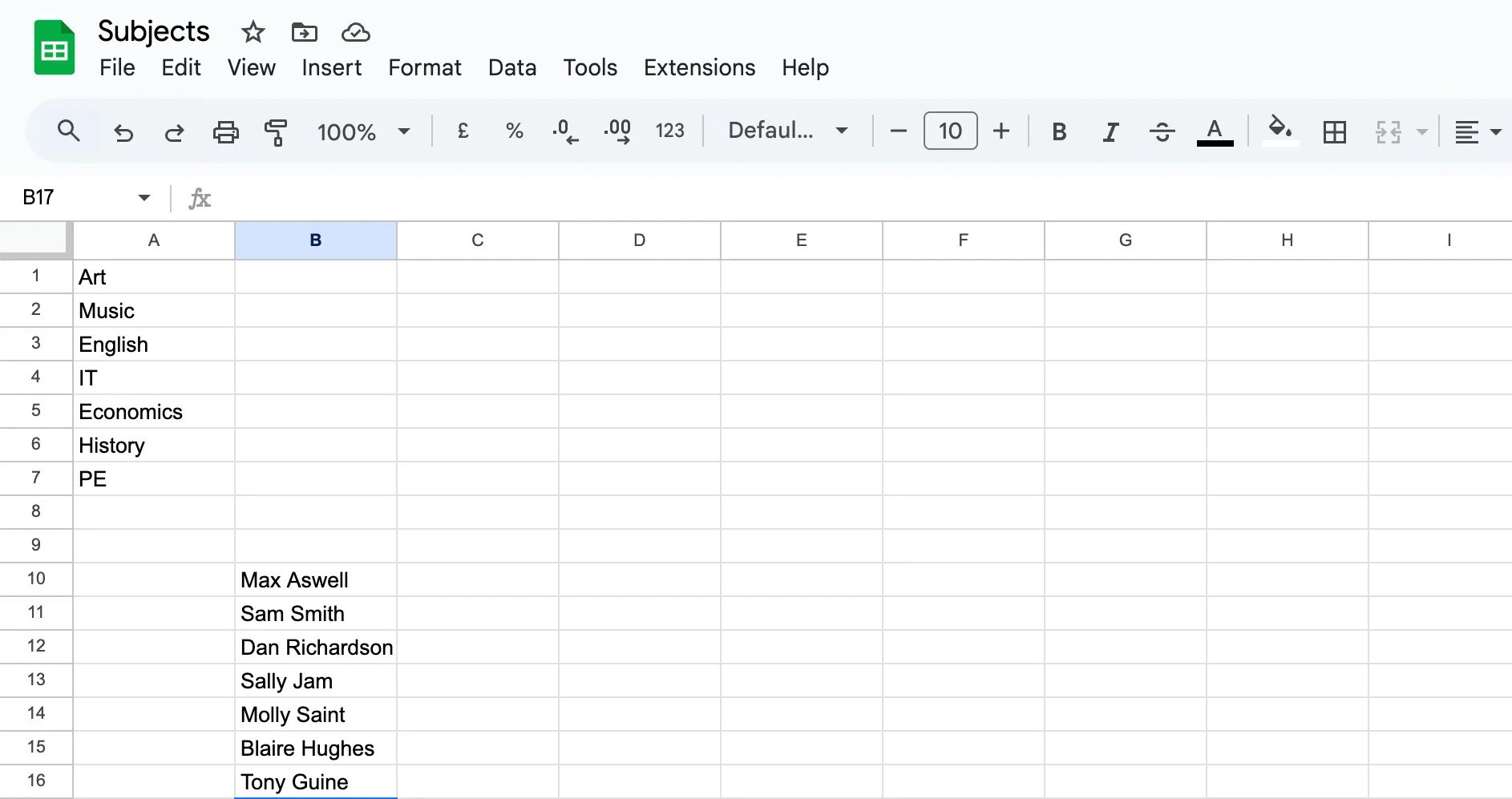

To use this function, select a blank cell and type =IMPORTRANGE(). Let’s look at the example:

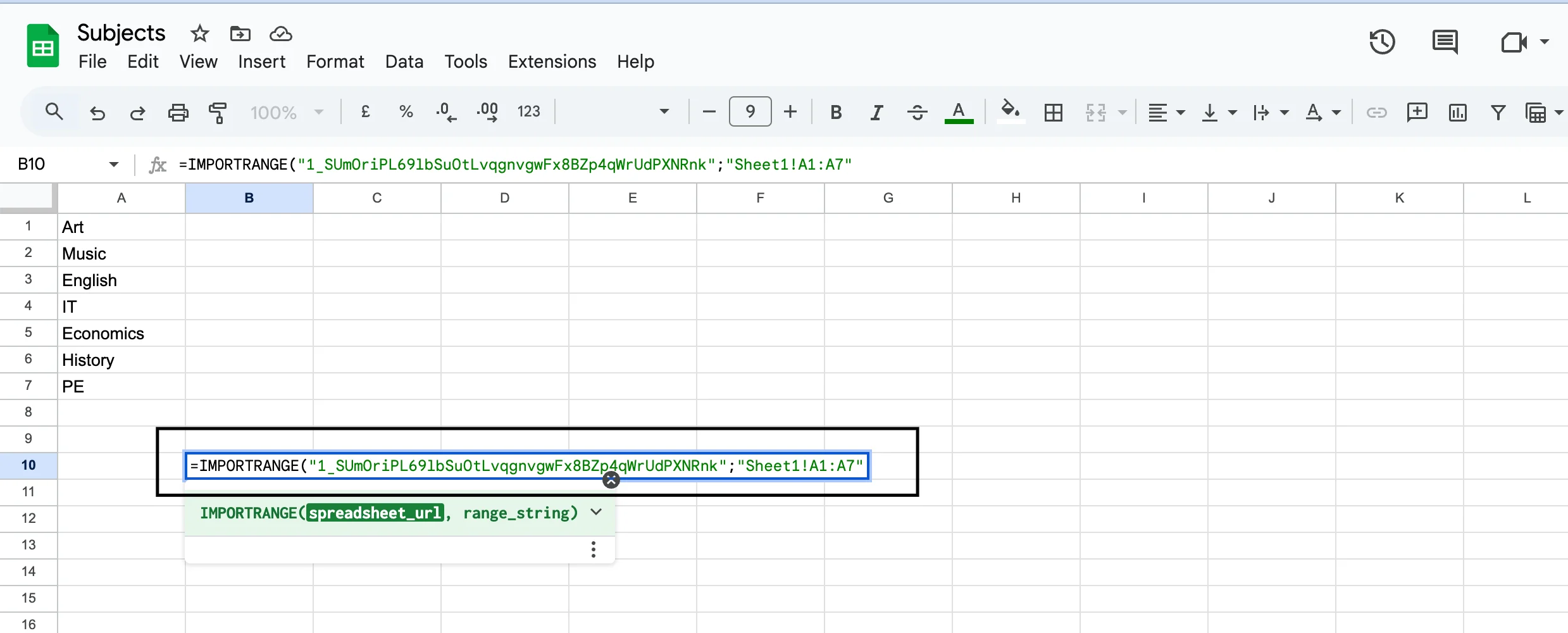

Then, insert the URL (just the code between slashes) of the spreadsheet you want to import and the range. In our case, we want to move the list of teachers to the subject list. Our teachers list range is A1:A7, where A1 is the first required cell at the top, and A7 is the last cell at the bottom.

So the final formula and the result look like this:

I am text block. Click edit button to change this text. Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Errors You’re Likely to Stumble Upon

You’re really lucky if you don’t get any errors during the scraping process. Many beginners can get confused when an unfamiliar message appears. Here are some of the most common errors you might come across:

- #N/A: when a value is not available or doesn’t exist in the referenced range.

- REF!: it can be displayed when a function refers to a cell that doesn’t exist, such as when rows or columns are deleted.

- Result Too Large: it means the output is too large for the sheet to handle. Try limiting the amount of data.

- Array Result Was Not Expanded: the function’s output is probably blocked by existing data in adjacent cells.

- #VALUE!: when the input is of the wrong type (e.g., a text value when a number is expected).

Be Aware of Google Sheets’ Limitations

There is a Google Sheets cell limit. Your spreadsheet can contain only 10 million cells or 18,278 columns. Moreover, each cell in Google Sheets has its data limit. A cell can have no more than 50,000 characters. It doesn’t support all data sizes to prevent errors. Google recommends not to overuse complex functions like IMPORTXML or IMPORTDATA because it can slow down the sheet.

Google Sheets works well for scraping static data but struggles with JavaScript-loaded content. It also has rate limits, restricting the number of requests within a short time. These factors make it unsuitable for large-scale data scraping or extracting content requiring complex interactions.

Why do you need proxies for web scraping in Google Sheets? Well, if you want to ensure the smooth performance of Google Sheets, alternative addresses can help you balance the load. Using them, you can route requests through different IPs and strengthen IP reputation and access stability. At the same time, you can browse region-specific data and stay anonymous.

Google Sheets Not an Option? Try Automated Scrapers

If your project is too large for Google Sheets or the data is protected by advanced anti-scraping measures, consider using specialized web scraping tools to extract data in the short term. Here are some of these automated scrapers:

- Octoparse – a no-code web scraping app for extracting data from websites without programming skills. This scraper was made to scrape data from dynamic websites that load content via JavaScript. Octoparse offers a 14-day premium trial.

- Scrapy – a free, open-source Python framework for web crawling and data extraction. It is not cloud-based; it requires users to set up everything themselves, including proxies, CAPTCHAs, and websites that use JavaScript. This scraper may not be the best option for beginners.

- Beautiful Soup – a Python library. It simplifies navigating and modifying documents with minimal code, handles encoding automatically, and works with parsers like lxml and html5lib for flexibility and speed.

- Apify – a cloud-based web scraping platform that provides pre-built scrapers and automation. Apify offers ready-made actors (scrapers) for common websites, which makes the scraping process faster. Users can also create custom workflows with its no-code editor or API. It offers a free trial, but its pricing model may become costly depending on your usage.

However, some websites with strong anti-bot protections may still block scrapers. For better performance, use proxies and scrapers in combination.

At DataImpulse, we focus on what our clients need the most – high-quality, ethically sourced proxy services. Get turbo speed, pay per GB, 24/7 human support, personalized approach, and much more with DataImpulse proxies.

*The information provided is only for educational purposes!