In this Article

Whether you’re working on your own idea or a company project, data is the element you need. When starting a new project or developing a strategy for an existing business, you need to analyze a large volume of data. This analysis helps frame solutions in various ways based on the data set and the problem statement. That’s where web scraping comes into play.

The greater focus on customer behavior analysis drives more retail businesses to use web scraping techniques, particularly data extraction. One of the important activities each company should implement is monitoring competitors’ pricing, product availability, and customer reviews.

In this tutorial, we’ll show how to start Python web scraping for better data analysis of products on Amazon.

Initial Setup: What to Download?

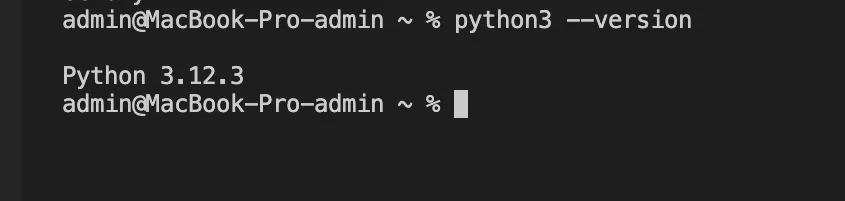

Before we start web scraping Amazon pages using Python, let’s make sure your environment is set up correctly. In case you don’t have Python 3.x installed on your computer, you can download it from https://www.python.org/.

- Visual Studio Code (VS Code)

To run all the necessary commands, you can use the terminal in Visual Studio Code (VS Code). Just download VS Code from the official website and follow the installation instructions for your operating system.

To set up your Python environment:

- Open VS Code and install the Python extension by clicking the square icon in the sidebar.

If you don’t have Python installed you’ll see not found: python. If everything works, you will see the current version as shown below.

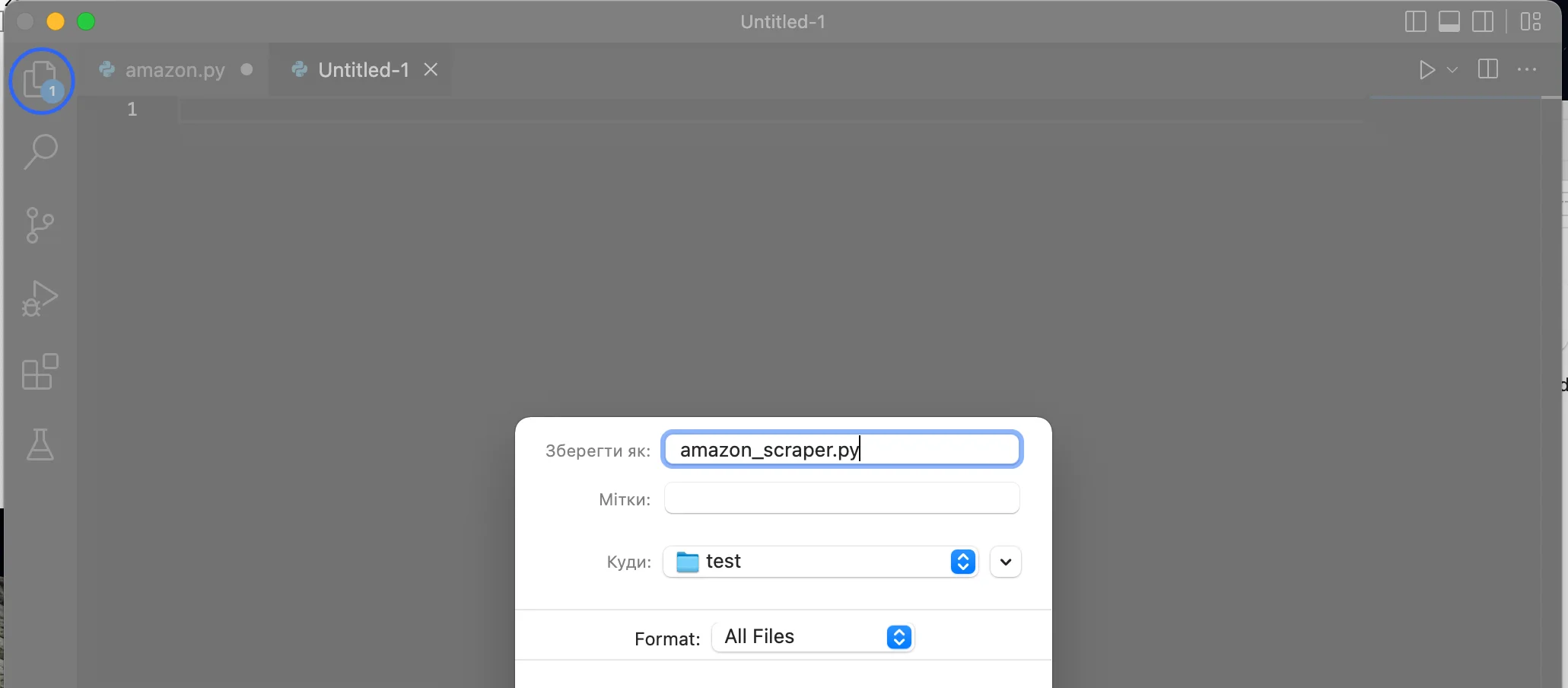

Create a New Project Folder:

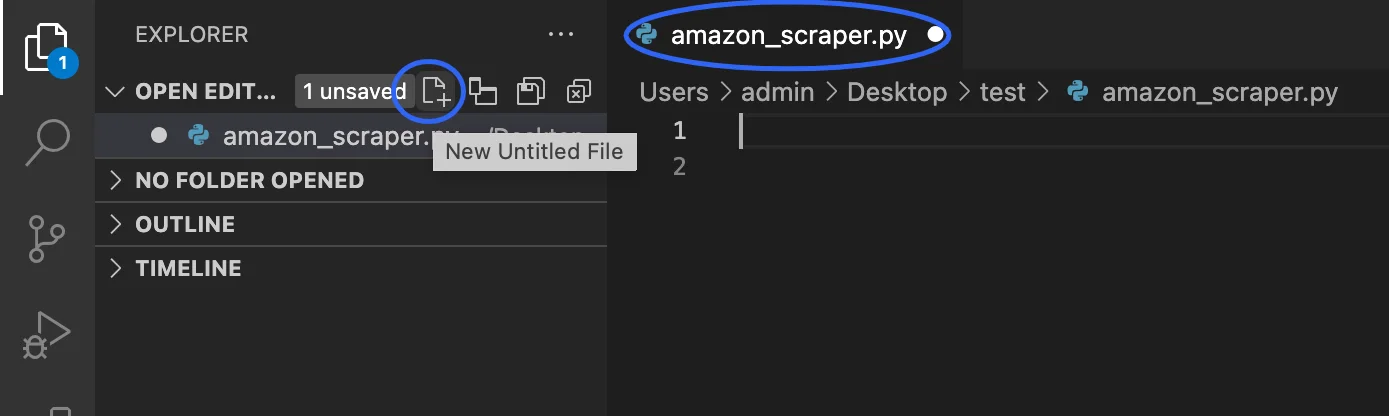

- In VS Code, open the folder where you want to create your project by selecting File – Open Folder. Create a new Python file for your script, e.g., amazon_scraper.py

Install two third-party libraries for Python

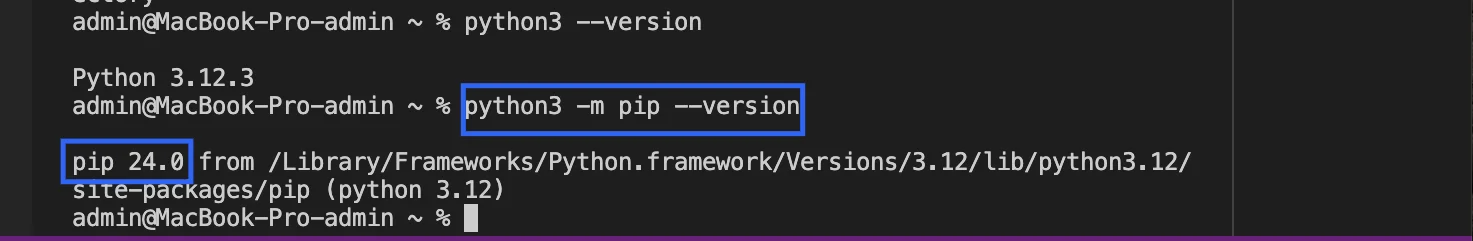

For this step, we will need to install pip. Pip, also known as pip3, is a package management system for Python used to install and manage software packages.

- Once you have the terminal open, you can install the necessary libraries using pip, which is the package installer for Python. So check if you have pip already installed:

*To install pip you can use Ensurepip, type one of these commands in Terminal:

python -m ensurepip

python3 -m ensurepip

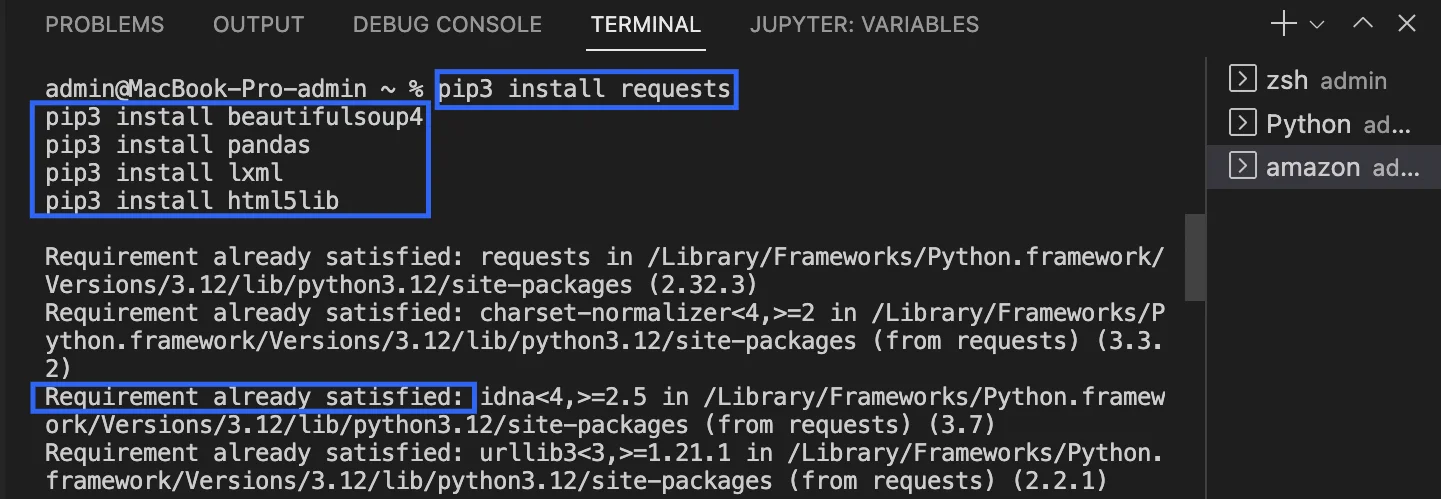

- Now, use pip to install the necessary libraries. We need “requests”, “beautifulsoup4”, “pandas”, “lxml” and “html5lib”.

pip3 install requests

pip3 install beautifulsoup4

pip3 install pandas

pip3 install lxml

pip3 install html5lib

Why do we need these Libraries?

-

- Requests: with this library, we can make HTTP connections to the Amazon page. It will help us extract the raw HTML content from the target page.

- Beautiful Soup: this powerful tool helps us pull out the required data from the raw HTML using the Requests library.

- Pandas: is used for data manipulation and analysis, you can organize scraped data into DataFrames for efficient analysis and export to various formats like CSV.

- lxml: a powerful library for quick parsing XML and HTML documents.

- html5lib: a pure-python library for parsing HTML that follows the HTML5 specification.

Scraping elements of a product

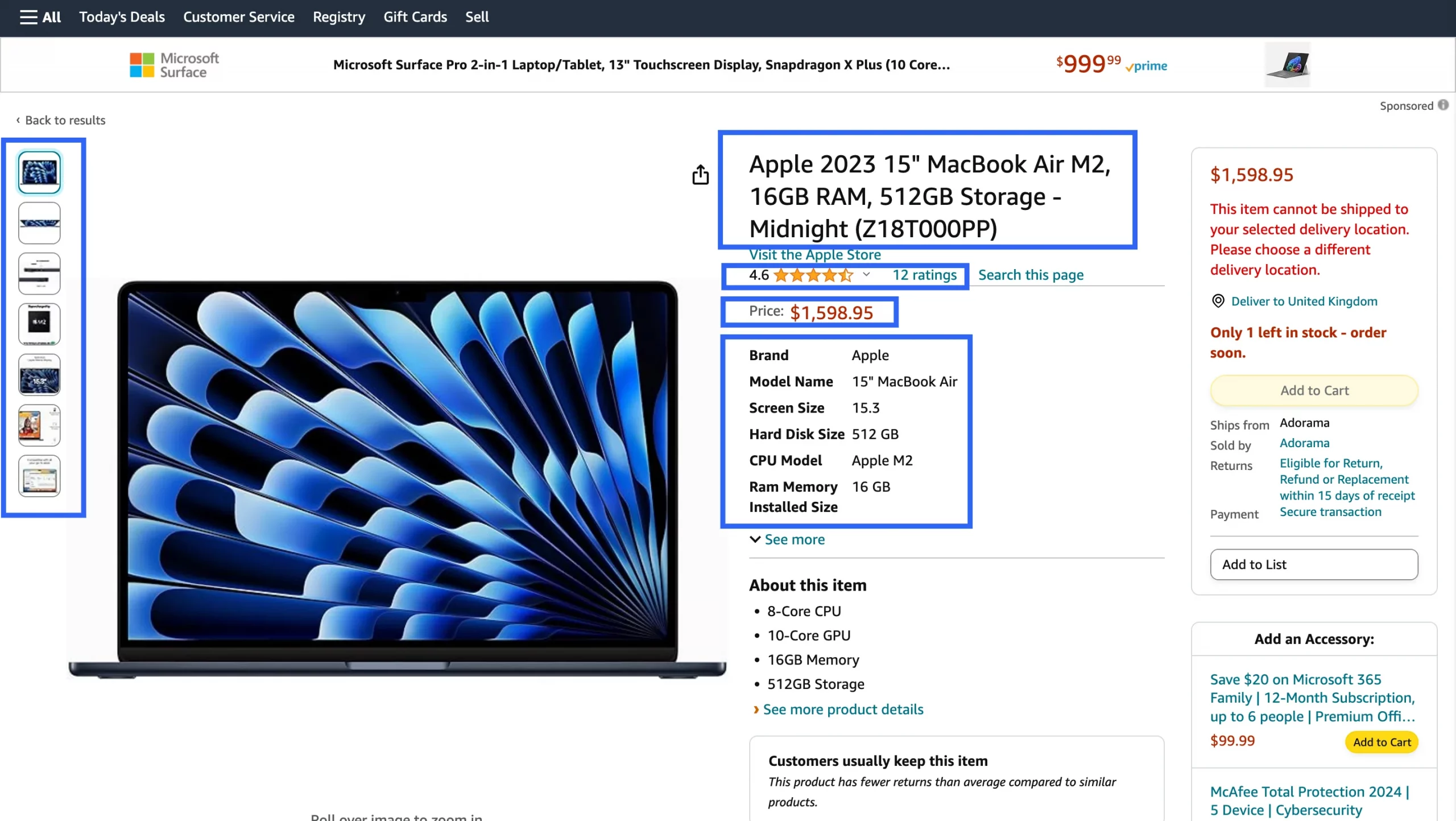

Let’s start the main process. Among all the given information on the Amazon site, we’ll focus on scraping such elements:

- Name of the product;

- Rating;

- Price;

- Images;

- Description.

For this tutorial, we chose this product: https://www.amazon.com/Apple-2023-MacBook-512GB-Storage/dp/B0CB9BWMPY

Sending a request to the product page

*Before sending a request, we also have to import the libraries that we’ve installed recently:

import requests

from bs4 import BeautifulSoup

import pandas as pd

In this step, we will send an HTTP request to the Amazon product page URL to retrieve its HTML content. By using the Requests library, you can simulate a web browser’s request to access the webpage. Include appropriate headers like User-Agent to mimic a real browser and prevent connection failure. The server’s response, containing the HTML content of the page, is then stored for further processing and data extraction.

import requests

from bs4 import BeautifulSoup

import pandas as pd

url = 'https://www.amazon.com/Apple-2023-MacBook-512GB-Storage/dp/B0CB9BWMPY'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Accept-Language': 'en-US, en;q=0.5'

}

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.content, 'lxml')

Product Name

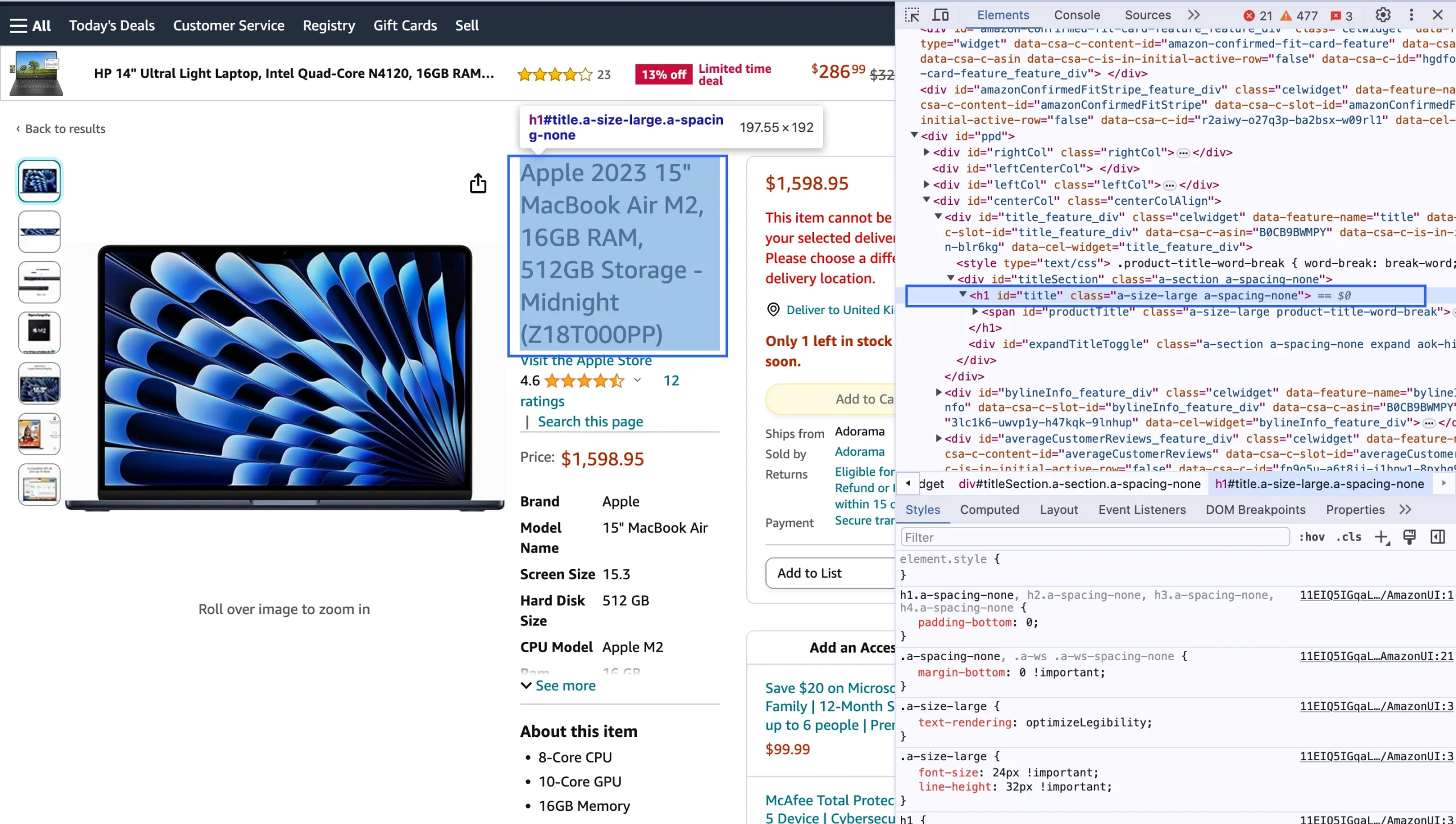

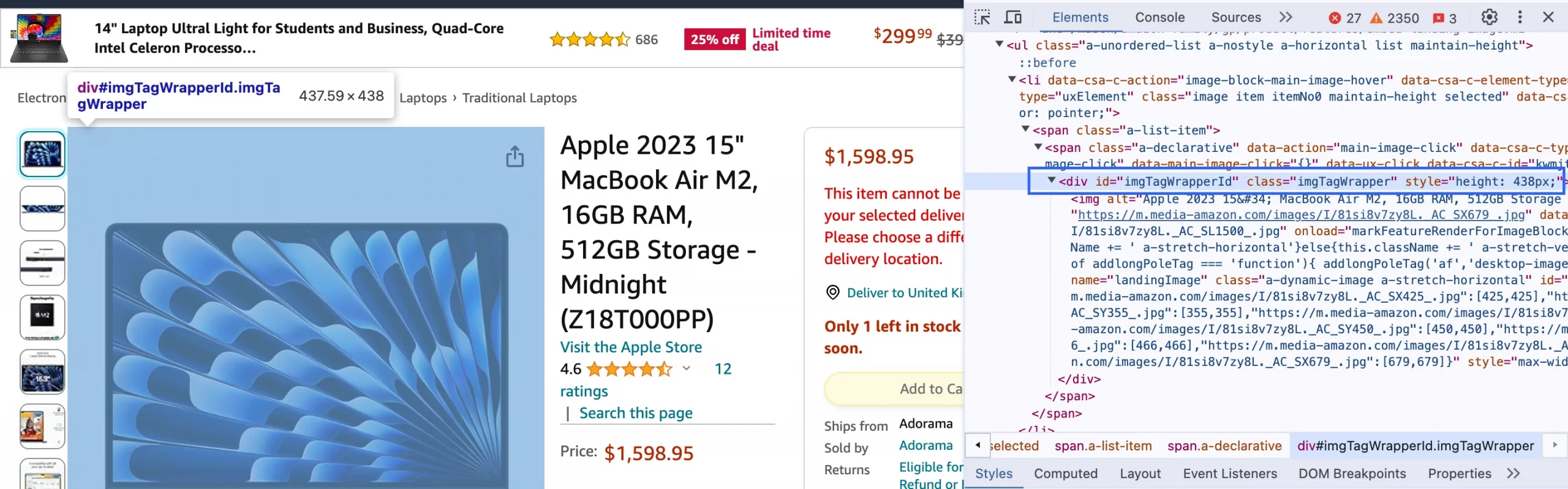

- Location

Once we Inspect the title we will find that the title text is located inside the h1 tag with the id title. Let’s go back to our file and write the code to extract this information from Amazon.

- Scrape Product Name

We will extract the product name from the HTML content of the Amazon product page. Using the BeautifulSoup library, we parse the HTML content and locate the element with the product name using its HTML tag and attributes. Once the element is found, we extract the text content, often using methods like find() or find_all() along with CSS selectors or XPath expressions. Finally, the extracted product name is formatted as needed before next step.

*By using the text attribute we can extract information from the text.

product_name_element = soup.find('span', {'id': 'productTitle'})

product_name = product_name_element.text.strip()

print('Product Name:', product_name)

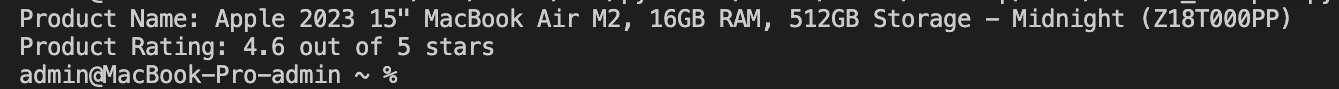

After running this code, we’ll have such result in the Terminal:

Product Images

- Location

To scrape product images from the Amazon product page, you need to locate the HTML elements that contain the image URLs. On Amazon product pages, images are often stored within ‘img’ tags. One common class for dynamic images on Amazon is ‘a-dynamic-image’.

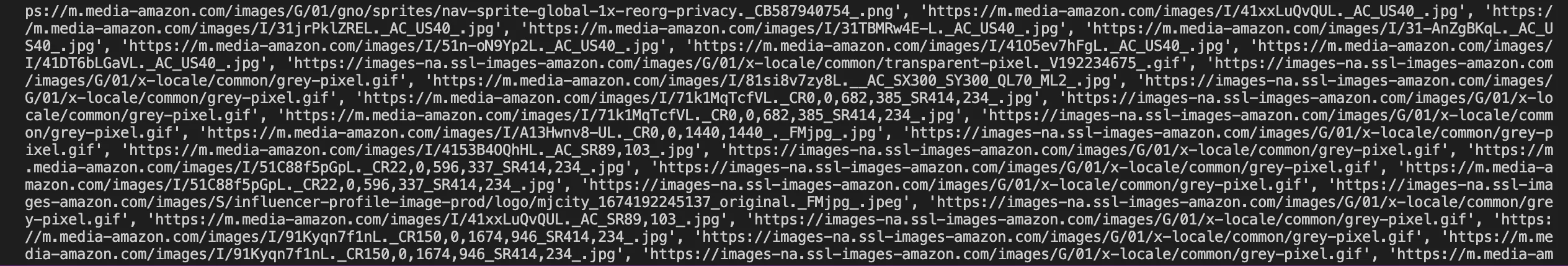

- Scrape Product Images

Use BeautifulSoup to parse the HTML content retrieved from the request. Extract the ‘src’ attribute from each ‘img’ tag, which contains the URL of the image.

image_tags = soup.find_all('img', {'class':'a-dynamic-image'})

product_images = [img['src'] for img in image_tags]

print('Product Images:', product_images)

*Sometimes after typing this code there may be some issues in getting the desired results. If it shows 0 images, better inspect the HTML structure and make sure you found the correct class and attribute. In such cases, you can try this code:

if response.status_code == 200:

soup = BeautifulSoup(response.content, 'html.parser')

image_tags = soup.find_all('img')

product_images = []

for img_tag in image_tags:

image_url = img_tag.get('src')

if image_url:

product_images.append(image_url)

print('Product Images:', product_images)

else:

print(f"Request failed with status code: {response.status_code}")

This will return all the high-resolution images of the product in a list.

* Amazon product pages often include multiple images for a single product. Capture all relevant images by processing all elements in the list.

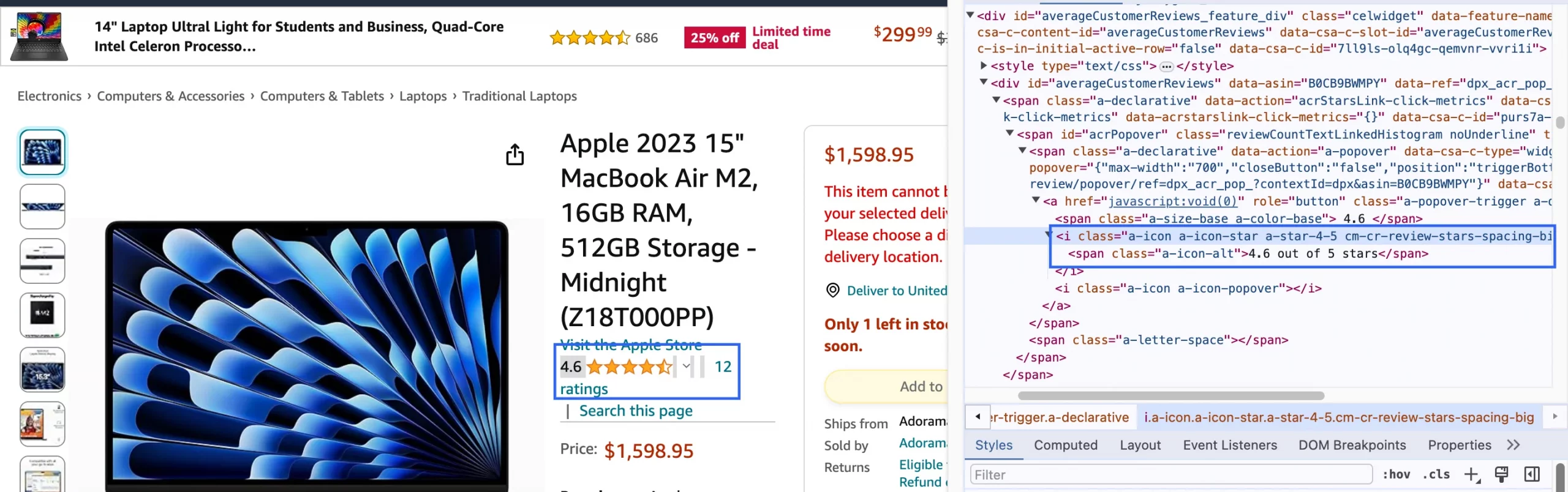

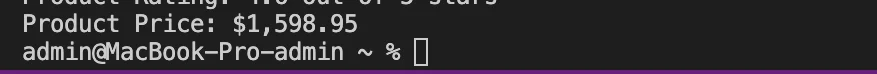

Product Rating

- Location

You can find the rating in the first ‘i tag’ with class ‘a-icon-alt’.

* Make sure to adjust the class name according to the specific HTML structure of the Amazon product page you are scraping. If the class name is different, you’ll need to replace ‘a-icon-alt’ with the correct class name.

- Scrape Product Rating

Now to extract the product rating, you locate the HTML element representing the rating on the Amazon product page using its ‘class’ attribute, such as ‘a-icon-alt. Once found, you retrieve the text content of the element, representing the rating value, and then clean it for further processing.

product_rating = soup.find('span', {'class': 'a-icon-alt'}).text.strip()

print('Product Rating:', product_rating)

You’ll see this result:

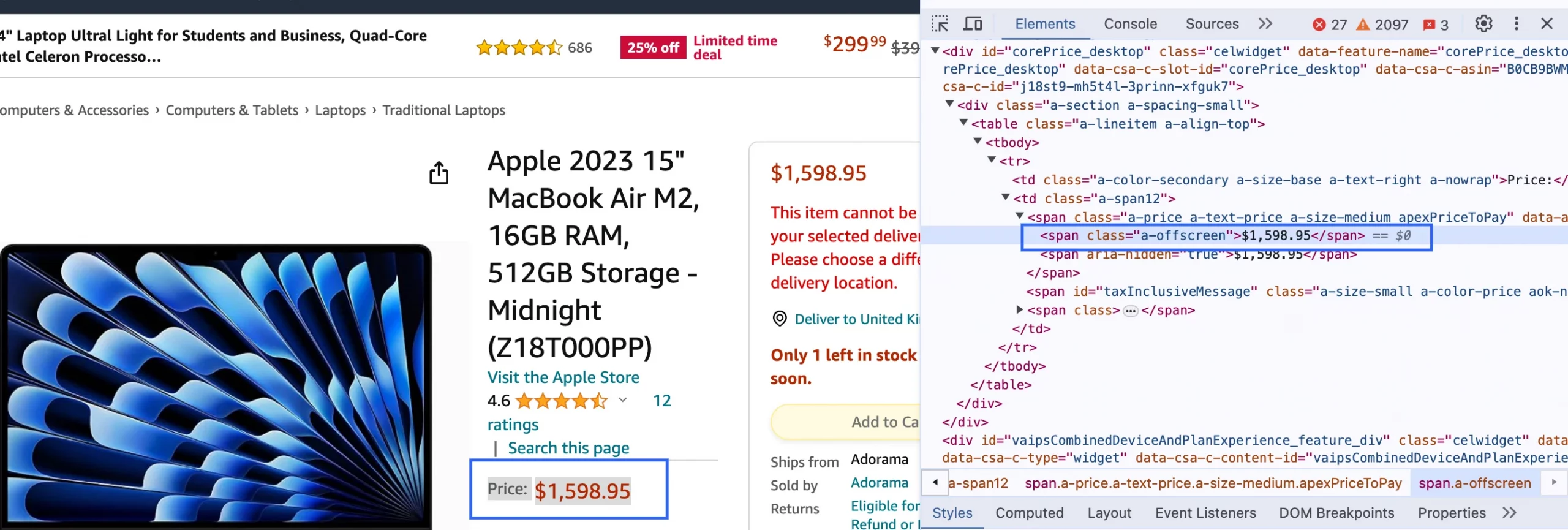

Product Price

- Location

To extract the product price, we’ll identify the HTML element that displays the price, specifically the one located just below the rating. On the Amazon product page, this price is typically contained within a ‘span’ tag with the class ‘a-price’. Once you have located this ‘span’ tag, you can navigate to its first ‘span’ tag to retrieve the actual price value.

- Scrape Product Price

In this code, the price container variable stores the ‘span’ element with the class ‘a-price’, and the price retrieves the text of its first ‘span’ tag with the class ‘a-offscreen’, which contains the price.

product_price = soup.find('span', {'class': 'a-offscreen'}).text.strip()

print('Product Price:', product_price)

The result will be:

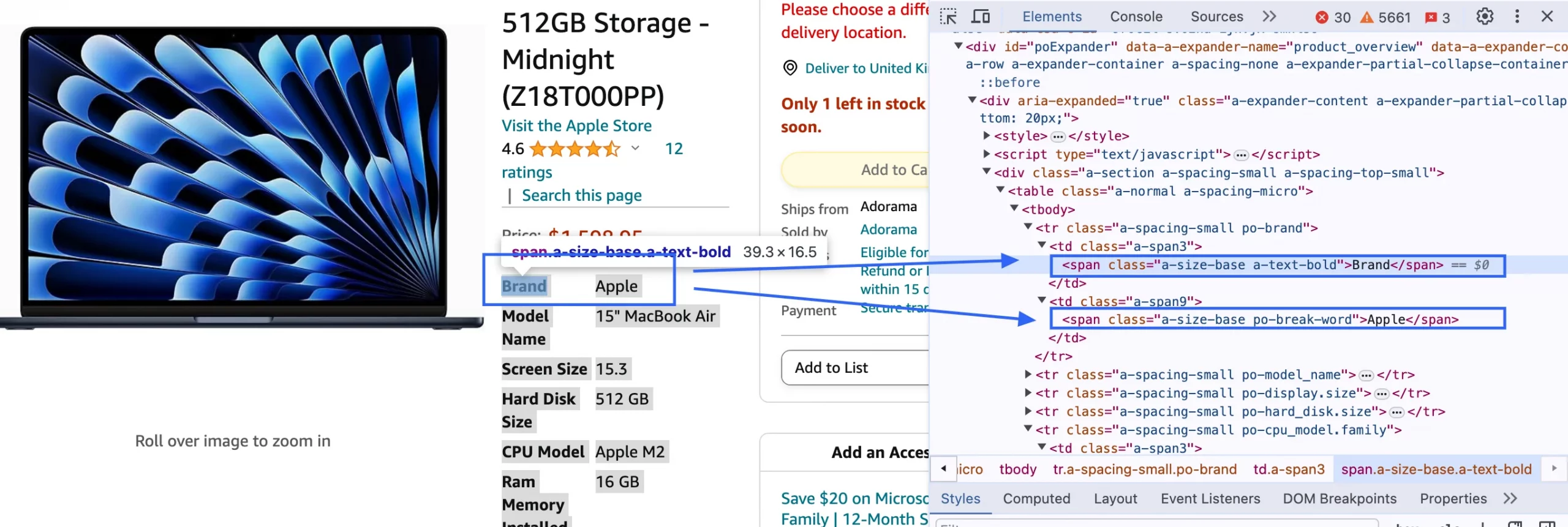

Product Description

- Location

To scrape the product description from the Amazon product page, you need to locate the HTML elements that contain the description. Amazon product descriptions are often found within a specific ‘div’ or ‘span’ tag with the ‘id productDescription’. If the specific ‘div’ is not found, it checks the ‘ul’ with the class ‘a-unordered-list a-vertical a-spacing-mini’ for bullet point descriptions.

*The description details are stored inside the ‘tr’ tags with class ‘a-spacing-small’. Once you find these you have to find both the ‘span’ under it to find the text.

-

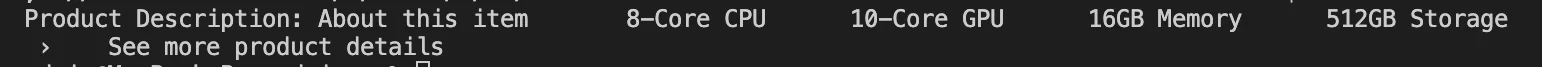

Scrape Product Description

By running this script, you should be able to extract the product description from the ‘feature-bullets’ section of the Amazon product page. This is a common section that contains bullet points describing the product features.

description_section = soup.find('div', {'id': 'feature-bullets'})

product_description = description_section.get_text(separator=' ').strip()

print('Product Description:', product_description)

You’ll get this:

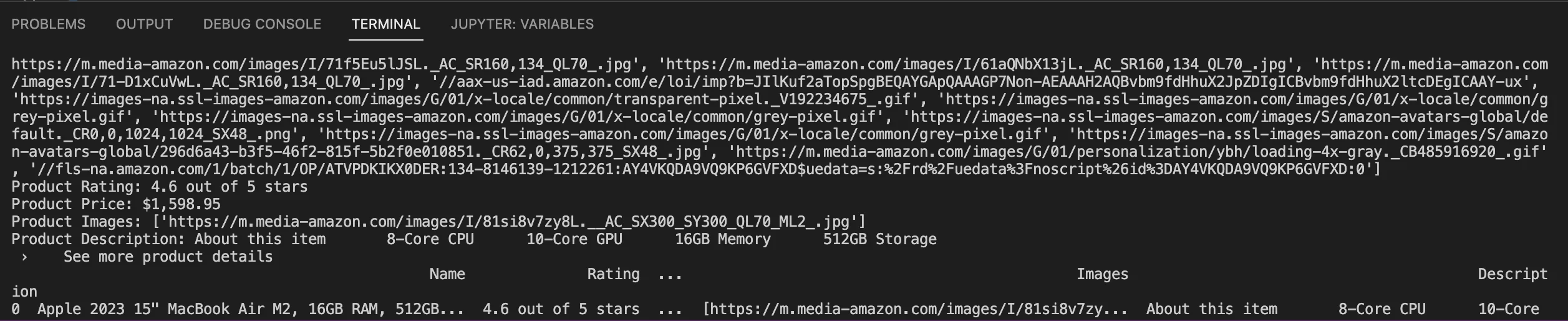

After scraping all the required information, you can store the data in a dictionary. Then, you can convert this dictionary into a pandas DataFrame for easier data manipulation and display.

product_info = {

'Name': product_name,

'Rating': product_rating,

'Price': product_price,

'Images': product_images,

'Description': product_description

}

df = pd.DataFrame([product_info])

print(df)

Complete Code

You can easily modify the code to collect more data from the Amazon product page, like customer reviews, product details, or seller information. By checking the HTML structure, you can adjust the code to extract these additional elements. It can be useful for personal use or integrating into an app that tracks Amazon prices, helping users find the best deals.

For now, the code looks like this. By following this step-by-step tutorial, you can see how each part of the code works and make further changes to fit your specific needs.

import requests

from bs4 import BeautifulSoup

import pandas as pd

url = 'https://www.amazon.com/Apple-2023-MacBook-512GB-Storage/dp/B0CB9BWMPY'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Accept-Language': 'en-US, en;q=0.5'

}

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.content, 'lxml')

product_name_element = soup.find('span', {'id': 'productTitle'})

product_name = product_name_element.text.strip()

print('Product Name:', product_name)

if response.status_code == 200:

soup = BeautifulSoup(response.content, 'html.parser')

image_tags = soup.find_all('img')

product_images = []

for img_tag in image_tags:

image_url = img_tag.get('src')

if image_url:

product_images.append(image_url)

print('Product Images:', product_images)

else:

print(f"Request failed with status code: {response.status_code}")

product_rating = soup.find('span', {'class': 'a-icon-alt'}).text.strip()

print('Product Rating:', product_rating)

product_price = soup.find('span', {'class': 'a-offscreen'}).text.strip()

print('Product Price:', product_price)

image_tags = soup.find_all('img', {'class': 'a-dynamic-image'})

product_images = [img['src'] for img in image_tags]

print('Product Images:', product_images)

description_section = soup.find('div', {'id': 'feature-bullets'})

product_description = description_section.get_text(separator=' ').strip()

print('Product Description:', product_description)

product_info = {

'Name': product_name,

'Rating': product_rating,

'Price': product_price,

'Images': product_images,

'Description': product_description

}

df = pd.DataFrame([product_info])

print(df)

The tabular format is displayed in your Python console or terminal when you run the script. After running the script, the DataFrame is printed directly to the terminal, where you can see the data organized in rows and columns.

Tips to scrape Amazon pages with Python:

- Respect Amazon’s Terms of Service;

- Parse HTML carefully and complete security checks;

- Regularly update your code since the structure of Amazon pages can change;

- Use proper headers such as ‘User-Agent’ and ‘Accept-Language’;

- Use Proxies and IP rotation.

Try DataImpulse for scraping Amazon

While using proxies, you send requests from different IPs — as if from different devices, locations, and time zones. Sites would never suspect you, ask you to enter a captcha, or even ban you. With us, you’ll get:

- Up-to-date data;

- IP rotation, no blacklisted IPs;

- Verification issues fixing;

- Intuitive APIs that quickly adapt to changes in Amazon’s HTML structure;

- Powerful infrastructure, perfect for big scraping projects;

- 24/7 human support.

Contact us at [email protected] or click the “Try now” button in the top-right corner.

*This tutorial is purely for educational purposes and serves as a demonstration of technical capabilities. It’s important to recognize that scraping data from Amazon raises concerns regarding terms of use and legality. Unauthorized scraping can lead to serious consequences, including legal actions and account suspension. Always proceed with caution and follow ethical guidelines.