In this Article

Many people believe that AI tools like ChatGPT or Gemini are doing too much of our work, replacing real human effort, and even changing the way we think and make decisions. This often raises concerns about the future of jobs and creativity. But is AI really an overestimated threat, or is it simply a mini assistant that helps us when used in balance?

Take web scraping as an example. Traditionally, building scrapers required solid coding knowledge, attention to detail, and debugging skills. With ChatGPT, even beginners can generate ready-to-use scraping scripts in seconds, while professionals can save hours by letting AI handle repetitive coding tasks. Instead of replacing developers, AI works as a powerful productivity tool that reduces routine work.

This kind of scraping can be useful for researchers collecting data, businesses monitoring competitors, or students learning Python. By combining ChatGPT’s ability to write and explain code with Python’s scraping libraries, anyone can build efficient and fast scrapers.

The Key Tools You Need to Get Started

Before we start web scraping Amazon pages using Python, let’s make sure our environment is set up correctly. We will need:

- Visual Studio Code (or any other code editor)

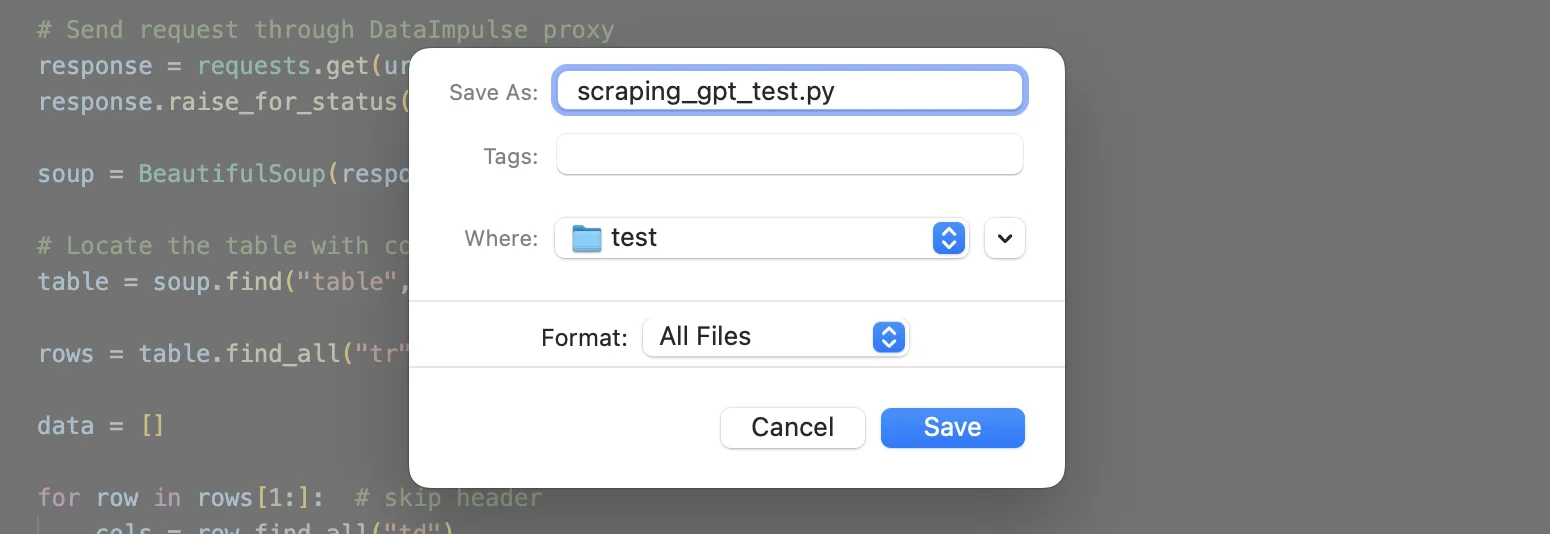

Download VS Code from the official website and follow the installation instructions for your operating system. Then, open it and install the Python extension by clicking the square icon in the sidebar. Open the folder where you want to create your project by selecting File – Open Folder. Create a new Python file for your script, e.g., scraping_gpt_test.py

- Python and its libraries (Requests, BeautifulSoup)

In case you don’t have Python 3.x installed on your computer, you can download it from https://www.python.org/. Check if Python is installed by running one of these commands:

python --version

python3 --version

Some systems still link python to Python 2, so use python3 (and pip3) to be sure you’re running Python 3. To use Python libraries, first install pip with one of these commands:

python -m ensurepip

python3 -m ensurepip

Then, open Terminal on your computer, install Python libraries using:

pip install requests beautifulsoup4

pip3 install requests beautifulsoup4

Press Enter, and you’ll see if they were installed.

- ChatGPT account

Create a free or paid OpenAI account to access ChatGPT, which you’ll use for writing, debugging, and improving your scraping scripts.

- DataImpulse proxies

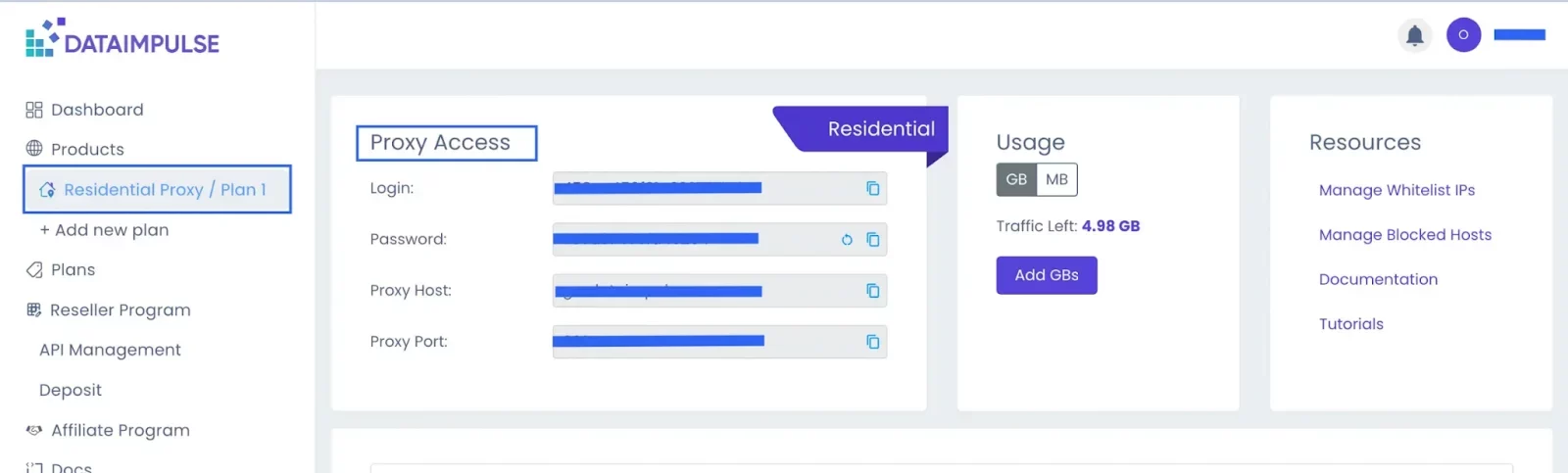

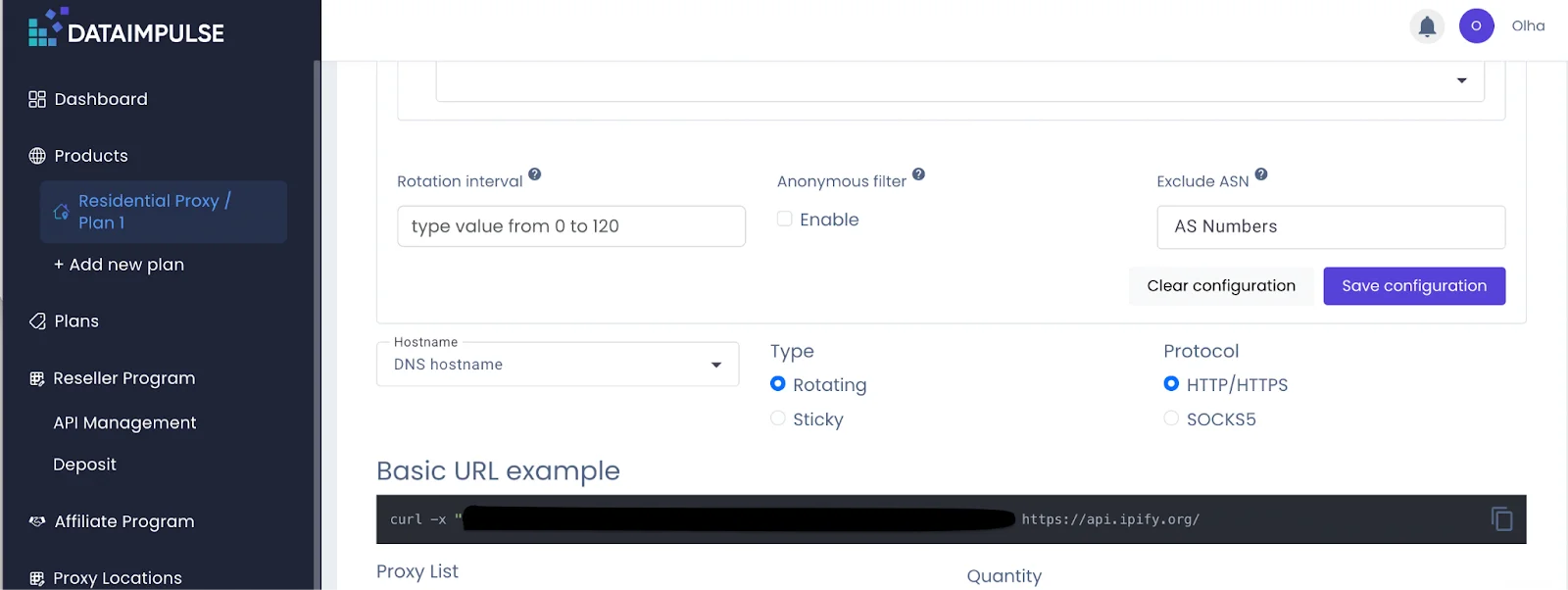

We will integrate proxies by using proxy credentials. You can access all the necessary information in your DataImpulse dashboard, within the details of your selected plan.

You can also copy the right details under the Basic URL example section.

- The website you’d like to scrape

In this tutorial, we’re using Wikipedia and its list of countries by population. Remember to replace it with your target website in the code.

👉 Always follow the rules: check a website’s terms of service and robots.txt file before scraping to stay safe and ethical.

Can ChatGPT Generate Python Scraping Code? Let’s test

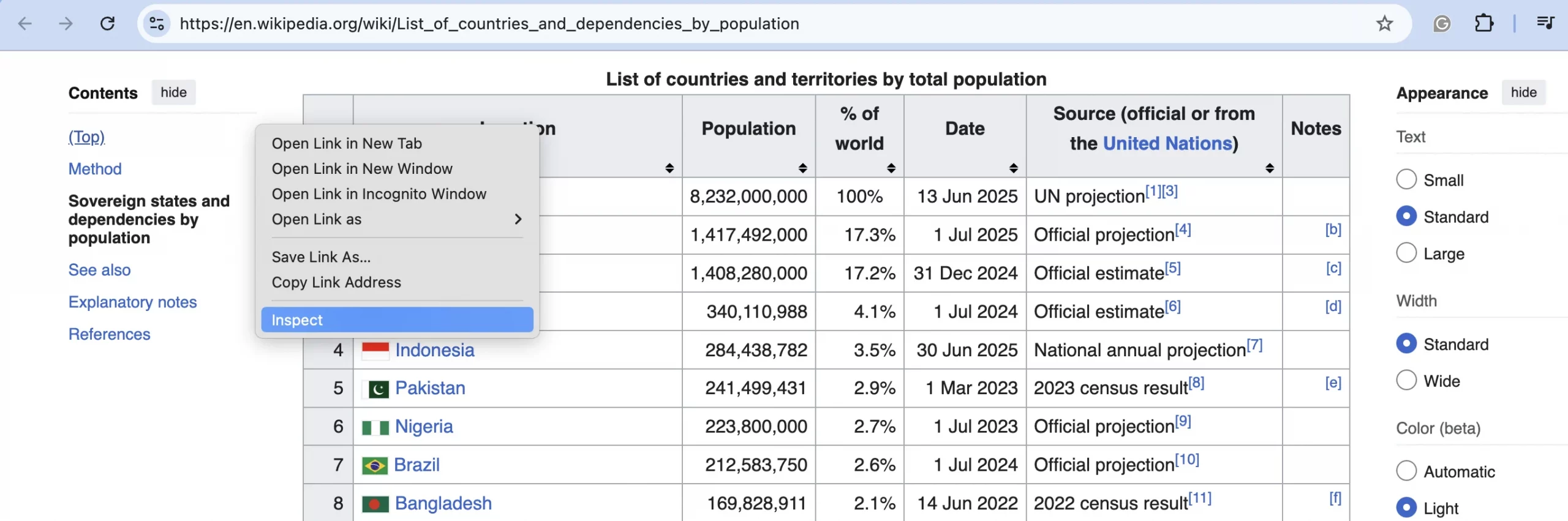

1. Inspect the target page and find the elements for scraping.

Right-click on the element you want (for example, a country name or population number) and choose Inspect in your browser. This will open the Developer Tools panel. Hover over the highlighted HTML to make sure you’ve selected the correct element.

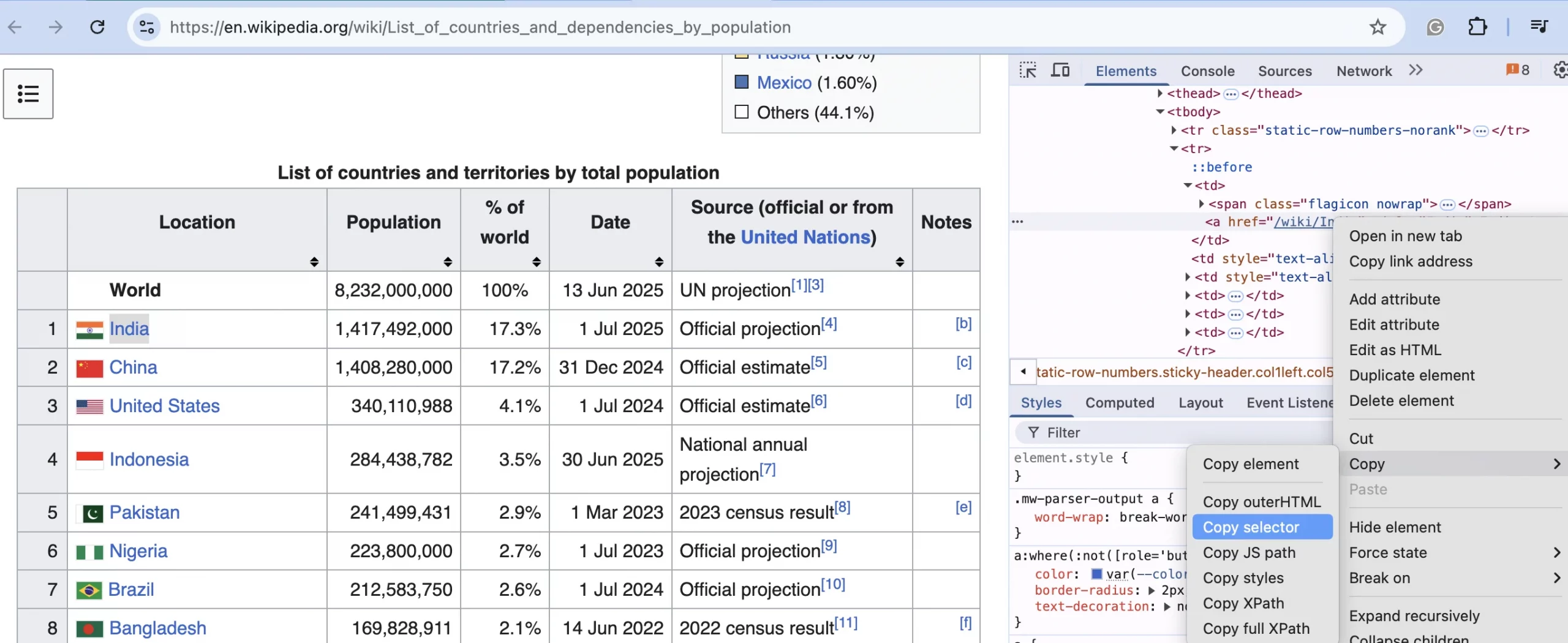

2. Save the HTML code of those elements.

Once you’ve located the element, right-click on the HTML in the panel and copy the CSS selector.

3. Make a detailed prompt for ChatGPT.

Write a prompt that includes:

- The target URL;

- The selectors you copied;

- The libraries you want to use (e.g., requests, BeautifulSoup);

Mention proxies and headers, BUT don’t share your credentials with ChatGPT.

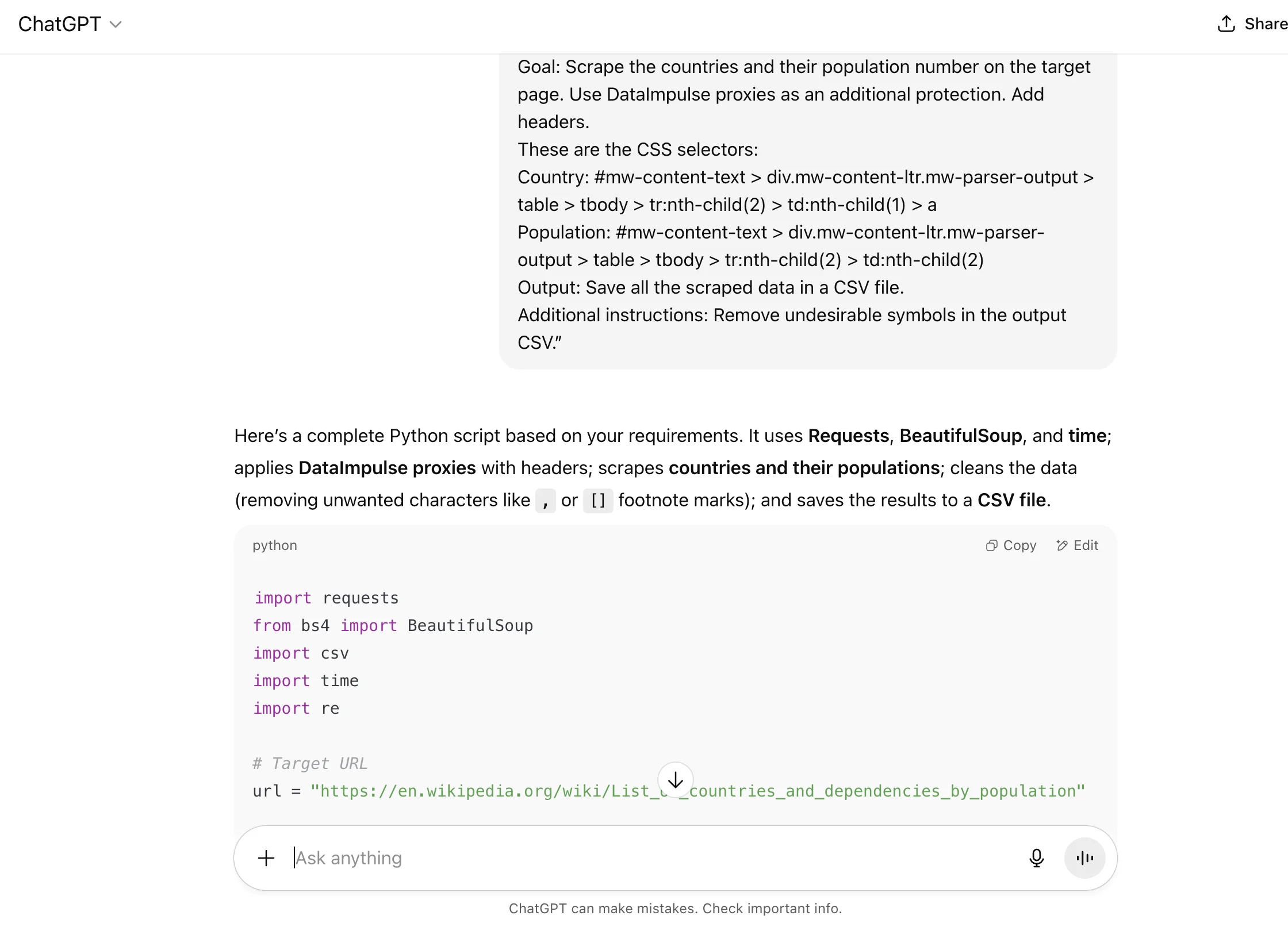

Paste your prompt into ChatGPT. Our prompt:

“Create a web scraper using Python, Beautiful Soup, and Requests libraries. Import time. Target website:

https://en.wikipedia.org/wiki/List_of_countries_and_dependencies_by_population

Goal: Scrape the countries and their population number on the target page. Use DataImpulse proxies as an additional protection. Add headers.

These are the CSS selectors:

- Country: #mw-content-text > div.mw-content-ltr.mw-parser-output > table > tbody > tr:nth-child(2) > td:nth-child(1) > a

- Population: #mw-content-text > div.mw-content-ltr.mw-parser-output > table > tbody > tr:nth-child(2) > td:nth-child(2)

Output: Save all the scraped data in a CSV file.

Additional instructions: Remove undesirable symbols in the output CSV.”

Here is the response of ChatGPT:

- Review and run the final code.

Copy the script into your editor and run it. Some debugging may be necessary if selectors or formatting differ. If you’re using proxies, always include User-Agent headers to supports consistent access. Adding import time lets you use delays between requests (time.sleep()), which helps balance request load. Here is the code:

import requests

from bs4 import BeautifulSoup

import csv

import re

import time

# DataImpulse proxy configuration

PROXY = "http://username:[email protected]:8000"

proxies = {

"http": PROXY,

"https": PROXY

}

url = "https://en.wikipedia.org/wiki/List_of_countries_and_dependencies_by_population"

headers = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) "

"AppleWebKit/537.36 (KHTML, like Gecko) "

"Chrome/119.0.0.0 Safari/537.36",

"Accept-Language": "en-US,en;q=0.9",

}

# Try with retry logic

for attempt in range(3):

try:

response = requests.get(url, headers=headers, proxies=proxies, timeout=15)

response.raise_for_status()

break

except requests.exceptions.HTTPError as e:

print(f"Attempt {attempt+1}: HTTP error {e}. Retrying...")

time.sleep(2)

else:

raise SystemExit("Failed to fetch the page after retries.")

soup = BeautifulSoup(response.text, "html.parser")

# Locate the first wikitable

table = soup.find("table", {"class": "wikitable"})

rows = table.find_all("tr")

data = []

for row in rows[1:]: # skip header row

cols = row.find_all("td")

if len(cols) >= 2:

country = cols[0].get_text(strip=True)

population = cols[1].get_text(strip=True)

# Clean unwanted symbols

population = re.sub(r"\[.*?\]", "", population) # remove [1], [note] etc.

population = population.replace(",", "").replace("\xa0", " ").strip()

data.append([country, population])

# Save results to CSV

with open("countries_population.csv", "w", newline="", encoding="utf-8") as f:

writer = csv.writer(f)

writer.writerow(["Country", "Population"])

writer.writerows(data)

print("Scraping completed. Data saved to countries_population.csv")

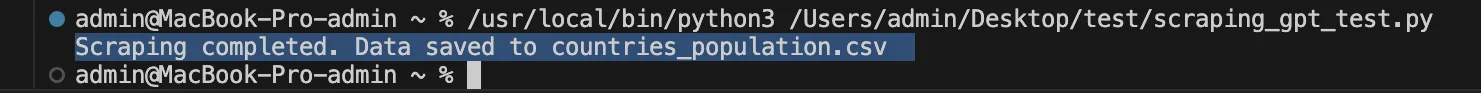

Once the script runs, your VS Code terminal will show:

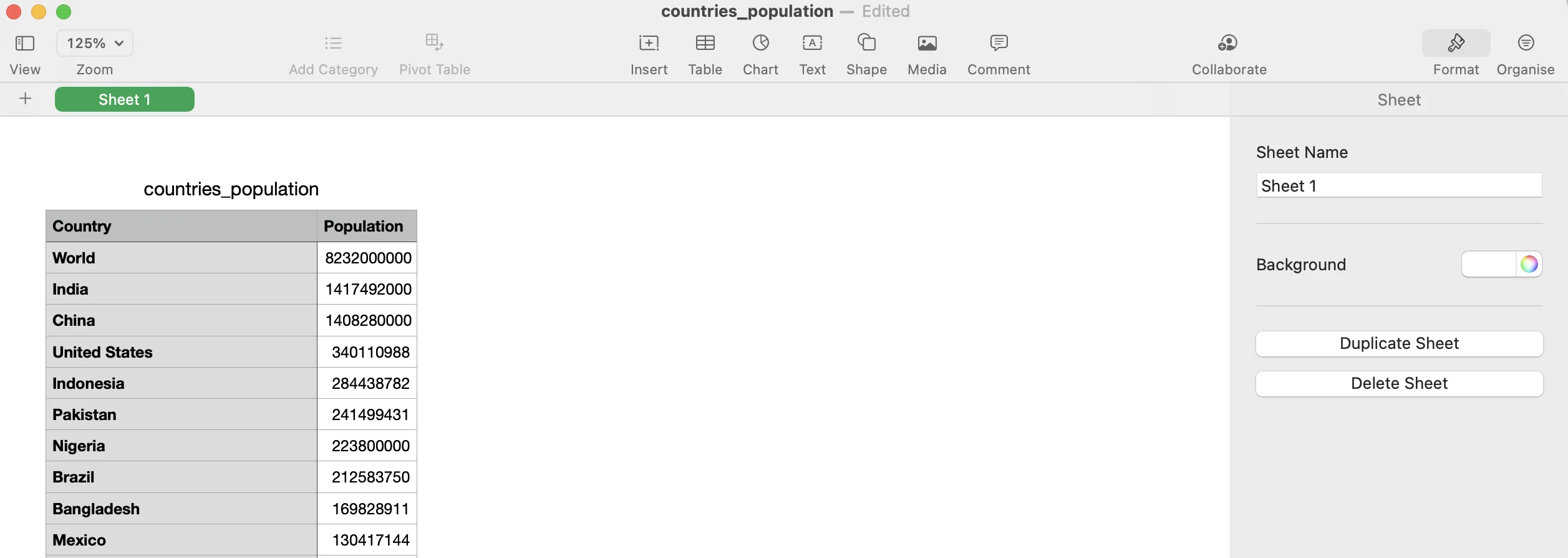

Now open the countries_population.csv file. Inside, you’ll find a structured sheet containing countries and their populations. Congrats on completing the task!

Here Come the Issues

When scraping, things don’t always work the way we expect. Here are some common problems:

- 403 Client Error: Some websites block requests that don’t look like they come from a real browser. Always include headers like User-Agent, especially when you use proxies.

- Blocked IP or Rate Limiting: Repeated requests usually reveal your IP, and websites may deny access or present you with verification tasks. Use residential proxies (fast and reliable, from real home devices) or mobile proxies (dynamic and hard to detect, from mobile carriers) to reduce verification interruptions.

- Timeouts or Slow Responses: The website or your connection may be slow, causing requests to fail. Add time.sleep() between requests and implement retry logic.

- Broken CSS Selectors: Websites often change their layout, so your saved selectors might stop working. Re-inspect the elements in the browser and update the selectors in your script.

- Messy Data: HTML may contain extra symbols, empty values, or unexpected formatting. Clean the data in Python before saving to CSV, e.g., remove special characters or trim whitespace.

Is There a Future for AI-Assisted Web Scraping?

A simple answer: yes. AI tools like ChatGPT are not replacing developers; they’re augmenting them. Such tools handle repetitive coding tasks, generate scraping scripts quickly, and suggest solutions for common errors. AI-assisted scraping will help professionals work faster, stay efficient, and focus on higher-level analysis instead of manual coding. Still, it’s important to maintain balance.

Of course, even with AI, it’s important to scrape responsibly. Use reliable proxies, respect rate limits, and follow a website’s terms of service for ethical scraping practices.

*This tutorial is intended strictly for educational purposes. It demonstrates web scraping techniques. It is not meant to encourage or instruct anyone to scrape websites in violation of their terms of service or applicable laws. Always ensure that your scraping activities are ethical, legal, and respectful of website rules.