In this Article

Developed to spot patterns that separate bots from humans, CAPTCHA systems can be tricked. Adding unpredictability and human-like actions to your scraper confuses the system, which helps avoid being blocked. Selenium is a Python library that offers a user-friendly API to control browsers. By default, Selenium runs with a visible browser window, but it can be set to run in headless mode. Some plugins are available and can help mask automation, making the browser seem like a real user and lowering the risk of detection.

In this tutorial, we’ll explore and analyze some ways of bypassing CAPTCHAs including Undetected ChromeDriver, Proxy Rotation, human behavior simulation, and external services (CapSolver). We’ll look into each of them and you’ll be ready to decide which one is the most appropriate for you.

Using Undetected ChromeDriver

Undetected ChromeDriver: what is it?

Developers try to use headless browsers like Selenium to get around anti-bot mechanisms websites. The Selenium Undetected ChromeDriver is an optimized ChromeDriver designed to avoid detection. Unlike the standard ChromeDriver, which reveals information that anti-bot systems use to identify bots, the Undetected ChromeDriver addresses these leaks, reducing the likelihood that systems such as DataDome, Imperva, and BotProtect.io will block your bot.

Preparation:

- Make sure to use Python 3.6 or a newer version and install Selenium.

- Download and install Chrome.

Key steps:

1. Install Selenium if you haven’t done it yet.

pip3 install selenium

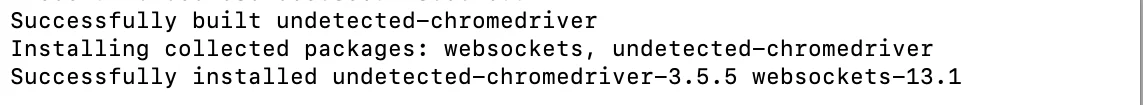

2. To proceed, install the undetected-chromedriver module by running the following command into Terminal:

pip3 install undetected-chromedriver

You’ll see this message if it is successful:

3. We use VS Code editor in this tutorial. The next step is to import libraries for Undetected ChromeDriver. After that, choose a webpage you’d like to scrape data from. Make a screenshot of your target webpage to check if it was loaded correctly without CAPTCHA. Run the following commands:

import undetected_chromedriver as uc

import time

# Initialize Chrome with undetected ChromeDriver

browser = uc.Chrome(headless=True, use_subprocess=True)

# Navigate to the target page

browser.get("https://example.com")

# Wait a few seconds to ensure the page loads fully

time.sleep(3)

# Save a screenshot to verify page loading

browser.save_screenshot("screenshot.png")

print("Screenshot taken and saved as 'screenshot.png'")

# Close the browser after the task

browser.quit()

You’ve done it! With Undetected ChromeDriver, CAPTCHA is no longer an issue, and you can now start scraping the website. This method is the best for small projects. The main reason it’s not very effective for large-scale scraping projects is that it fails to block your IP address. The next strategy is a safer alternative.

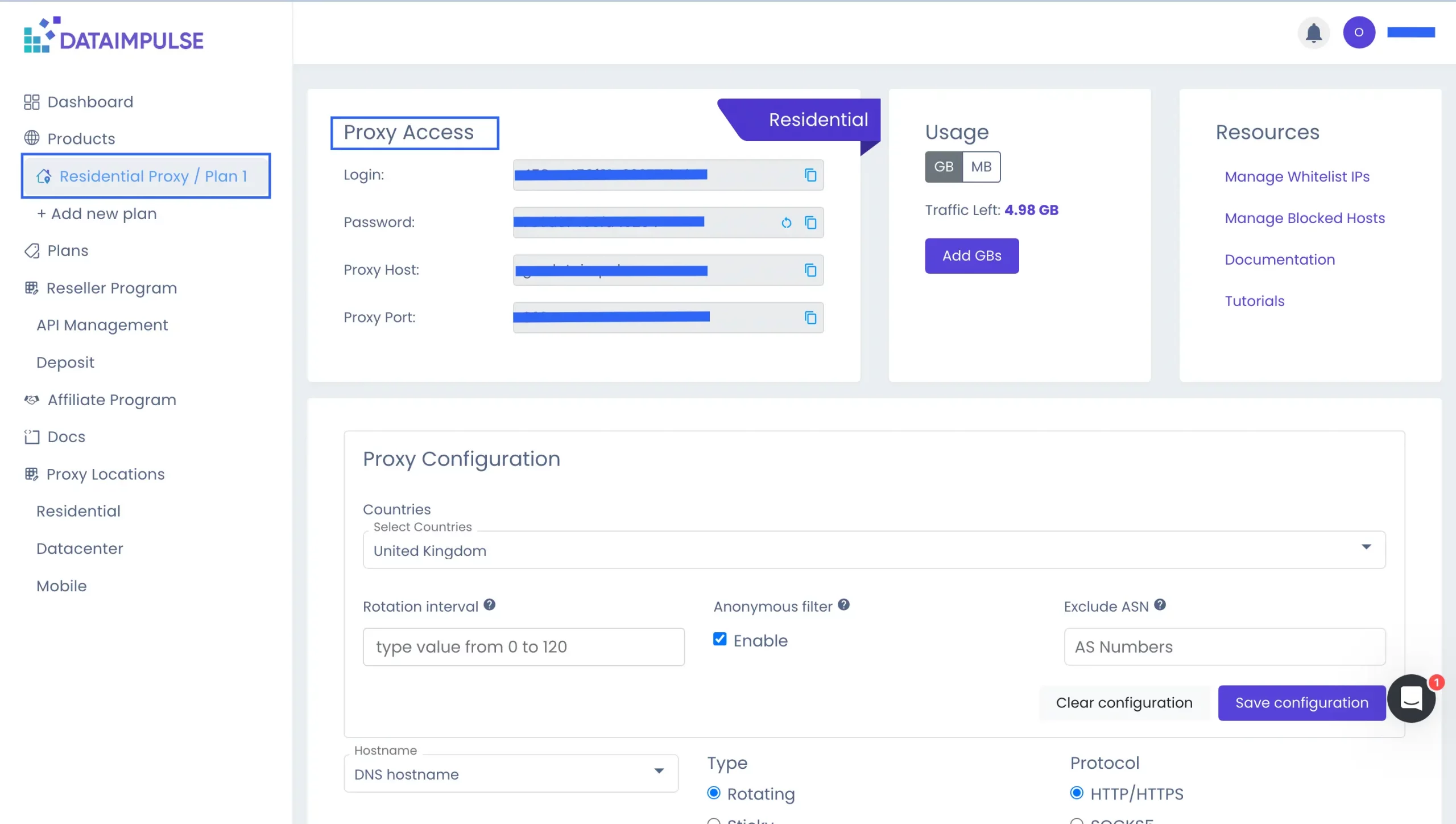

Using Proxy Rotation

Websites may implement a hidden CAPTCHA to spot bot activity. If many requests come from one IP address, the websites identify it as bot behavior and as a result, block access. Using rotating IP addresses can help solve this issue. In our tutorial, we are using residential proxies from DataImpulse. If you’d like to give them a try, just sign up and choose your preferred plan. All essential information is available on your Dashboard, and you can find your DataImpulse credentials within your plan under the Proxy Access section.

Moreover, in our comprehensive guide, you can find information about how to join our community, along with an overview of our user-friendly dashboard and the sections it includes.

You have to create a pool of proxies or you can try using a single proxy. To do so, simply use “your_single_proxy:port” instead of a proxy list.

For “your_single_proxy:port” specify your host and port.

For driver.get(“https://example.com“) enter the desired website. After installing all the necessary libraries, you can try this code:

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.by import By

# List of DataImpulse proxies

proxy_list = [

"proxy1:port",

"proxy2:port",

"proxy3:port",

# Add more proxies from your DataImpulse pool

]

def start_selenium_with_proxy(proxy):

# Set up Chrome options

chrome_options = Options()

chrome_options.add_argument("--headless=new")

# Add proxy to Chrome options

chrome_options.add_argument(f'--proxy-server=http://{proxy}')

# Initialize the Selenium browser with the proxy settings

driver = webdriver.Chrome(options=chrome_options)

return driver

# Example scraping function with using rotating proxies

def scrape_website():

for proxy in proxy_list:

driver = start_selenium_with_proxy(proxy)

try:

# Navigate to the target website

driver.get("https://httpbin.io/ip")

print(driver.find_element(By.TAG_NAME, "body").text)

finally:

driver.quit()

# Run the scraping function

scrape_website()

Free proxies generally offer weak protection and may even pose a risk. Slow connections, phishing, and blocked IP addresses are just some of the potential dangers of using them. This leads to the main problem of a Proxy Rotation approach which is the cost of proxy providers. In this case, research and choose what you find more reliable and budget-friendly including all the needed features.

Browse Without Leaving a Trace

With proxies, sites will never suspect you, ask you to enter a captcha, or even ban you. At DataImpulse, you get up-to-date data, no blacklisted IPs, Captcha handling, intuitive APIs, and 24/7 professional human support. We have one of the lowest rates on the market – just $1 per GB! Try now!

Using Human Behavior Simulation

By implementing human behavior synthesizers, your headless browser can simulate user interactions more authentically. Here are some of the main ways to simulate real user actions:

- Mouse movements – They create natural curves, varying speeds, and accelerations, imitating how real users move a mouse.

- Random clicks – Clicks are randomized in timing and slight positional shifts to mimic genuine user behavior.

- Keystrokes – Typing is adjusted to reflect natural typing speeds, pauses, and occasional typos for a human-like rhythm.

Add small delays between actions to simulate human behavior, randomize the mouse’s path, and adjust click locations. Let’s use 3 pages of https://www.scrapingcourse.com/ecommerce as the targets. Here’s an example of the code:

import time

import random

import requests

# make a GET request to the given URL and print the status code

def make_request(url):

response = requests.get(url)

print(f"Request to {url} returned status code: {response.status_code}")

# list of URLs to request

urls = [

"https://www.scrapingcourse.com/ecommerce/page/1/",

"https://www.scrapingcourse.com/ecommerce/page/2/",

"https://www.scrapingcourse.com/ecommerce/page/3/",

]

# range for random wait time (in seconds)

min_wait = 1

max_wait = 5

# iterate through each URL in the list

for url in urls:

make_request(url)

wait_time = random.uniform(min_wait, max_wait)

print(f"Waiting for {wait_time:.2f} seconds before the next request...")

time.sleep(wait_time)

print("All requests completed.")

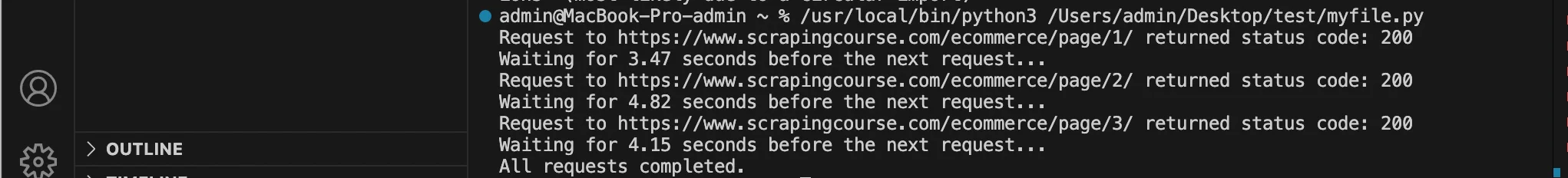

You’ll be able to see a similar response if it was done correctly:

In fact, using human behavior simulation adds realism to automated scripts. However, due to these randomized actions, these simulations can increase complexity and slow down overall scraping speed.

Using External Services (CapSolver)

There are many additional services that can help you with handling different types of CAPTCHAs. In this tutorial, we chose to mention the CapSolver service. CapSolver integrates with different proxy types so users can manage CAPTCHA-solving across several IP addresses. Using proxies makes requests appear as if they’re coming from multiple devices and locations, enhancing anonymity and lowering the risk of detection. To learn more about key stages in the integration of DataImpulse with CapSolver you can read a short guide.

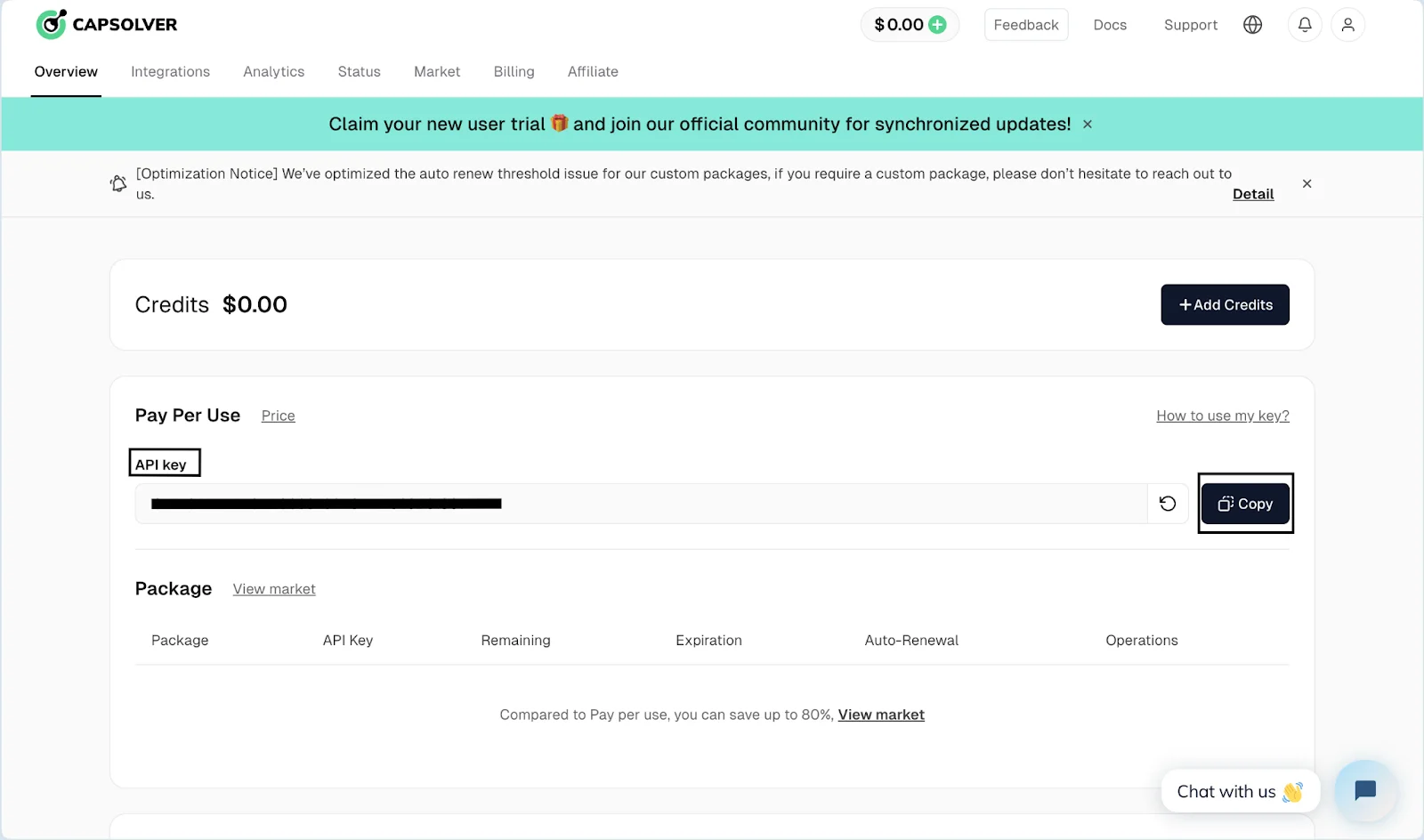

We’ll need an API key which you can find on your CapSolver dashboard.

Below is a Python example demonstrating how to utilize CapSolver’s API to handle CAPTCHAs. Before running the code, replace YOUR_API_KEY, XXX, and site_url with actual values.

# pip3 install requests

import requests

import time

# TODO: Set your configuration

api_key = "YOUR_API_KEY" # Your CapSolver API key

site_key = "XXX" # The site key for the target CAPTCHA

site_url = "" # URL of the page with the CAPTCHA

def capsolver():

payload = {

"clientKey": api_key,

"task": {

"type": 'ReCaptchaV2TaskProxyLess',

"websiteKey": site_key,

"websiteURL": site_url

}

}

response = requests.post("https://api.capsolver.com/createTask", json=payload)

result = response.json()

task_id = result.get("taskId")

if not task_id:

print("Failed to create task:", response.text)

return

print(f"Task ID received: {task_id} / Fetching result...")

while True:

time.sleep(3) # Delay between checks

payload = {"clientKey": api_key, "taskId": task_id}

response = requests.post("https://api.capsolver.com/getTaskResult", json=payload)

result = response.json()

status = result.get("status")

if status == "ready":

return result.get("solution", {}).get('gRecaptchaResponse')

if status == "failed" or result.get("errorId"):

print("Solution retrieval failed! Response:", response.text)

return

token = capsolver()

print(token)

External services are about efficiency, scalability, and a higher success rate for handling complex tasks. With such services, developers can easily focus on other project needs. Some disadvantages may be the costs, dependence on third-party reliability, and privacy concerns due to limited control over data.

To sum up

Python is well known for its simplicity and extensive library support, making web automation and data scraping straightforward. With libraries like Selenium and Playwright, you can automate browser tasks and bypass CAPTCHA with the right tools. The best way is to integrate your web scraper with proxies because it helps bypass these restrictions, maintain anonymity, and prevent bans.

Get in touch with us today to learn more about the multiple scraping tools and our custom solutions.