In this Article

Python can become the must-have skill for anyone who works remotely and wants to build their way up. It’s flexible, beginner-friendly, and syntactically simple. Sometimes we have projects with a mountain of data, and the final result will often depend on how to prioritize this or that task. Web scraping data using Python saves a lot of time and nerves.

In our previous tutorials, we’ve already covered web scraping for Amazon, eBay, ChatGPT, and this guide is dedicated to video content scraping. We’ll explain some techniques and share the final code with our readers.

What content is allowed to be scraped?

Retrieving data from the Web is still considered a legal “grey area”. Websites imply strict policies regarding web scraping because it can violate their security code. When you scrape and analyze web data, you have to be careful. Even research-driven findings can accidentally expose people’s private information or create biased and unfair conclusions. It’s not just about individuals; data can also reveal sensitive details about organizations behind the websites. And if data is used in the wrong way, it can negatively impact platform’s reputation and relationship with its audience.

You should understand that not everything is available, and as users have the right to privacy, companies also have the right to keep certain aspects confidential. So, if there is a red line, do not cross it. Below, you can see what info is generally allowed or prohibited for web scraping goals.

This tutorial focuses on public metadata, or in simple terms, “data about data”. When you scroll through YouTube videos on your device, you may notice such details as the channel, date created, a little description under the video, subscribers, and comments. They are metadata. If you read YouTube’s terms of service, you know that automatic collection of data is not permitted, BUT the platform allows web scraping for non-commercial use cases, for example, academic research. One of the rules is to use the official YouTube Data API, so make sure you have enabled it. Now, let’s move to the key process.

*This tutorial is intended for educational purposes only. We do not encourage or endorse any actions that violate platform policies, terms of service, or applicable laws. Users should always act responsibly and at their own risk.

Install Python and a few extras

Download Python 3.x from https://www.python.org/ and complete the installation on your computer. Open a terminal and install Requests using pip:

pip3 install requests

To run all the necessary commands, we use the terminal in Visual Studio Code (VS Code). Just download it from the official website and follow the installation instructions for your operating system.

To make sure our environment is safe and private, we use DataImpulse proxies, which are a strong guarantee of encrypted requests. Sign up and go to the Proxy section. Choose the plan you need. Make a note of the proxy credentials (login, password, host, port) provided by DataImpulse. We’ll need this info for the next steps.

Lastly, we need to install the yt-dlp library. It is a command-line program widely used to download videos from YouTube and other video apps. Use this command:

pip install yt-dlp requests

Code 1. Downloading videos

The download() method is performed with the help of YouTubeDL, an open-source software tool that downloads the segments of the video and audio. It’s free. In this tutorial, we’ll use the video about the proxy rotator from our YouTube channel.

Make sure to replace User and Password with your actual DataImpulse proxy credentials.

The host is gw.dataimpulse.com. Here is the code you need:

from yt_dlp import YoutubeDL

video_url = "https://youtu.be/S-9_AHRq-LI?si=07PJYpRm23m5uHCl"

opts = {

# Proxy format: "http://user:password@host:port" or "http://host:port"

'proxy': 'http://user:[email protected]:port',

"no_warnings": True,

}

with YoutubeDL(opts) as yt:

yt.download([video_url])

Code 2. Scraping video subtitles

Subtitles contain structured text that represents what’s actually being said in a video, so you don’t need to process the audio. They are useful in many cases. For example, training language models or searching videos by keywords. With the code below, you’ll be able to scrape subtitles and create a .vtt file with them.

import yt_dlp

VIDEO_URL = "https://www.youtube.com/watch?v=S-9_AHRq-LI"

PROXY = "http://username:[email protected]:port"

LANGS = ["en"] # subtitle languages (or ["all"])

def download_subtitles():

ydl_opts = {

"writesubtitles": True,

"writeautomaticsub": True,

"subtitleslangs": LANGS,

"subtitlesformat": "vtt",

"no_warnings": True,

"skip_download": True,

"quiet": True,

}

if PROXY:

ydl_opts["proxy"] = PROXY

print(f"Downloading subtitles for: {VIDEO_URL}")

with yt_dlp.YoutubeDL(ydl_opts) as ydl:

info = ydl.extract_info(VIDEO_URL, download=True)

vid = info.get("id", "unknown")

title = info.get("title", "Untitled")

print(f"Subtitles downloaded for: {title} ({vid})")

subs = info.get("subtitles") or {}

auto = info.get("automatic_captions") or {}

if subs or auto:

print("Available subtitle languages:")

for lang in sorted(set(list(subs.keys()) + list(auto.keys()))):

print(" -", lang)

else:

print("No subtitles found.")

print("Files saved successfully.")

if __name__ == "__main__":

download_subtitles()

Code 3. Getting video data

Under each YouTube video, you can find its title, duration, language, view count, and other details that show if the video may be relevant for you or not. The next piece of code demonstrates how to fetch basic metadata about a certain YouTube video. Save it for later:

from yt_dlp import YoutubeDL

VIDEO_URL = "https://www.youtube.com/watch?v=S-9_AHRq-LI"

PROXY = "http://username:[email protected]:port" # your proxy (or None)

def fetch_video_info(url: str, proxy: str | None = None):

"""Fetch basic metadata about a YouTube video using yt-dlp (with proxy support)."""

opts = {

"quiet": True, # suppress yt-dlp internal output

"no_warnings": True,

"skip_download": True, # do not download the video

"proxy": proxy, # proxy configuration

}

with YoutubeDL(opts) as ydl:

info = ydl.extract_info(url, download=False)

video_data = {

"title": info.get("title", "Unknown"),

"uploader": info.get("uploader", "Unknown"),

"duration_sec": info.get("duration", 0),

"width": info.get("width"),

"height": info.get("height"),

"language": info.get("language", "Unknown"),

"view_count": info.get("view_count", 0),

"like_count": info.get("like_count", 0),

"upload_date": info.get("upload_date"),

}

return video_data

if __name__ == "__main__":

print(f"Fetching video info via proxy: {PROXY or 'No proxy'}")

info = fetch_video_info(VIDEO_URL, PROXY)

print("\nVideo Information:")

for key, value in info.items():

print(f"{key:>15}: {value}")

Code 4. Collecting video comments

Want to understand how the audience feels about the content, train AI data, and spot viral ideas? Scrape the video comments. In this case, use getcomments and set them to True. Next, just use extract_info() method to see the first five comments from the YouTube video. Try this one:

from yt_dlp import YoutubeDL

VIDEO_URL = "https://www.youtube.com/watch?v=S-9_AHRq-LI"

PROXY = "http://username:[email protected]:10000" # your proxy (or None)

def fetch_comments(url: str, proxy: str | None = None):

opts = {

"quiet": True,

"no_warnings": True,

"skip_download": True,

"extract_flat": False,

"getcomments": True,

"proxy": proxy,

}

print(f"Fetching comments for: {url}")

with YoutubeDL(opts) as ydl:

info = ydl.extract_info(url, download=False)

comments = info.get("comments") or []

comment_count = info.get("comment_count", len(comments))

print(f"\nTotal comments found: {comment_count}")

if not comments:

print("No comments available or comments disabled.")

return

print(f"\nShowing first 5 comments:\n")

for i, comm in enumerate(comments[:5], 1):

author = comm.get("author", "Anonymous")

text = comm.get("text", "").replace("\n", " ")

print(f"{i:02d}. {author}: {text}")

return comments

if __name__ == "__main__":

fetch_comments(VIDEO_URL, PROXY)

fetch_comments is used to request and extract comment data from a video through the platform’s API.

Code 5. Scraping channel information

This method is great for identifying key SEO metrics — what topics rank well, which videos drive traffic, and how the channel performs in search. Before moving to the code, we have to get an API key from Google Cloud Console.

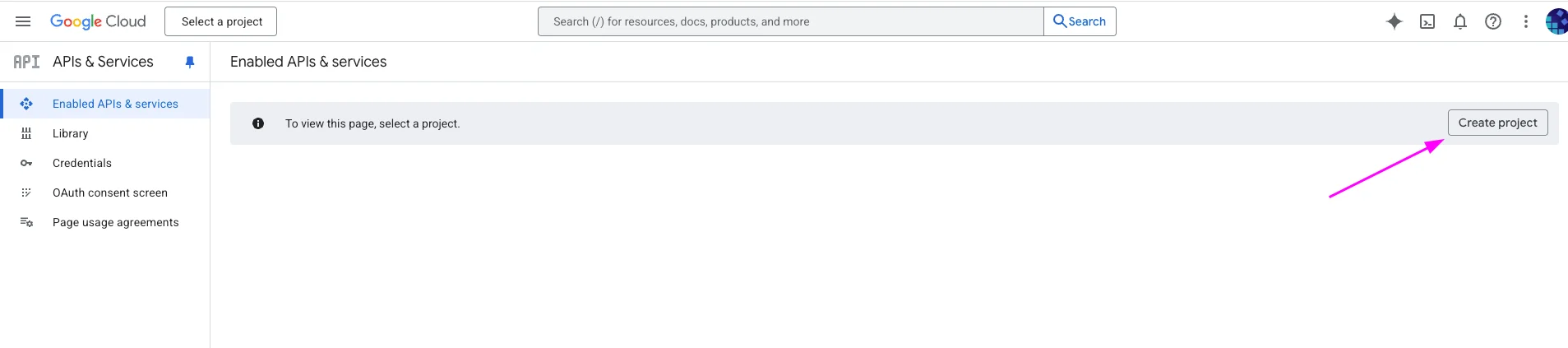

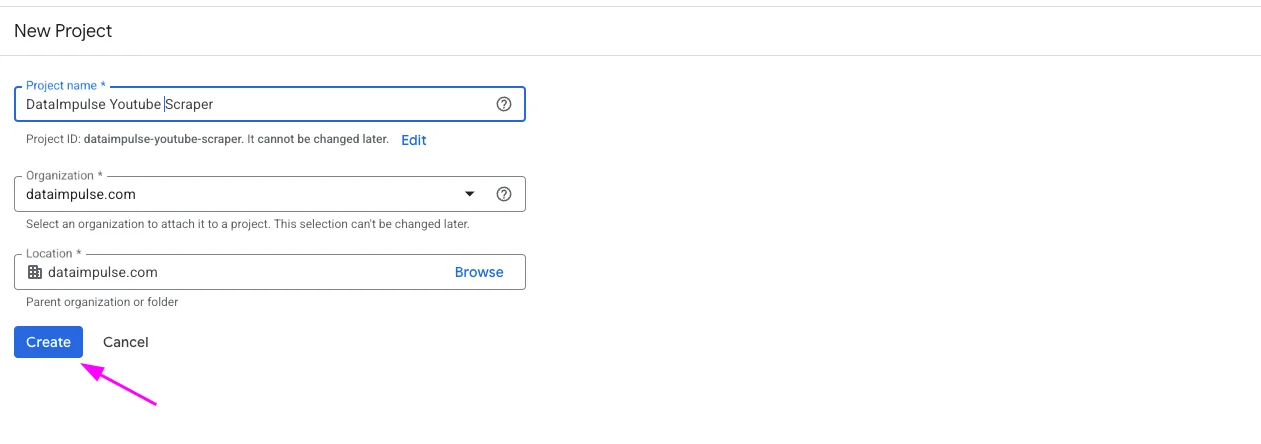

1. Go to Google Cloud Console and create a new project. Add a project name, organisation, location, and click Create.

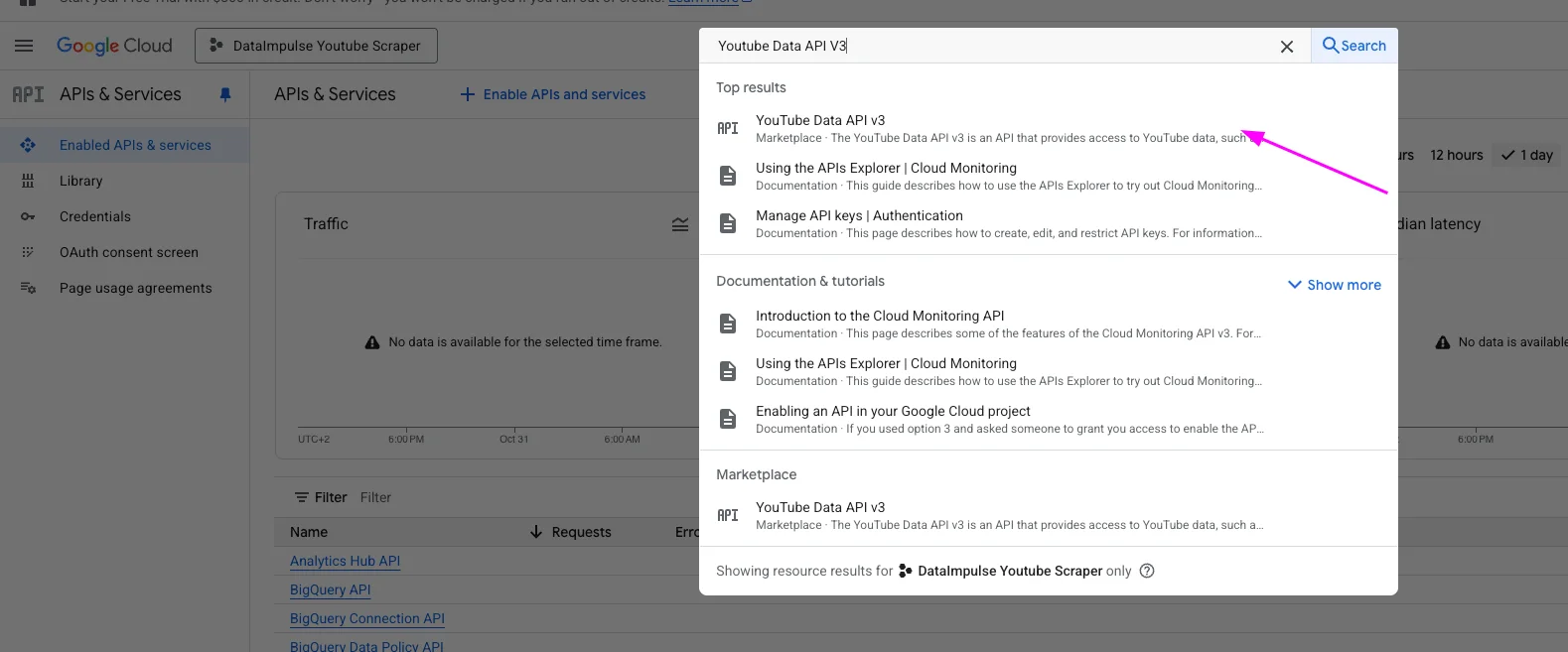

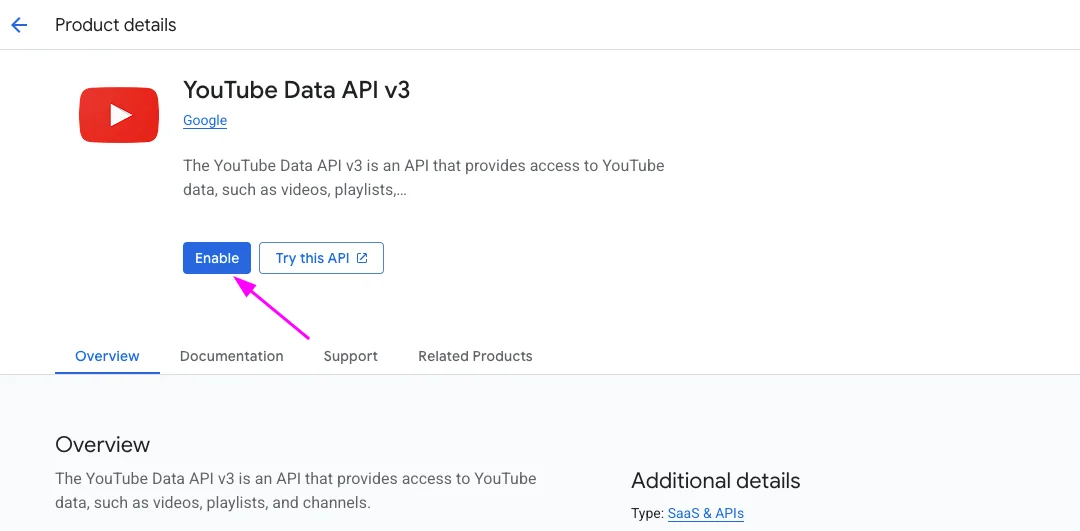

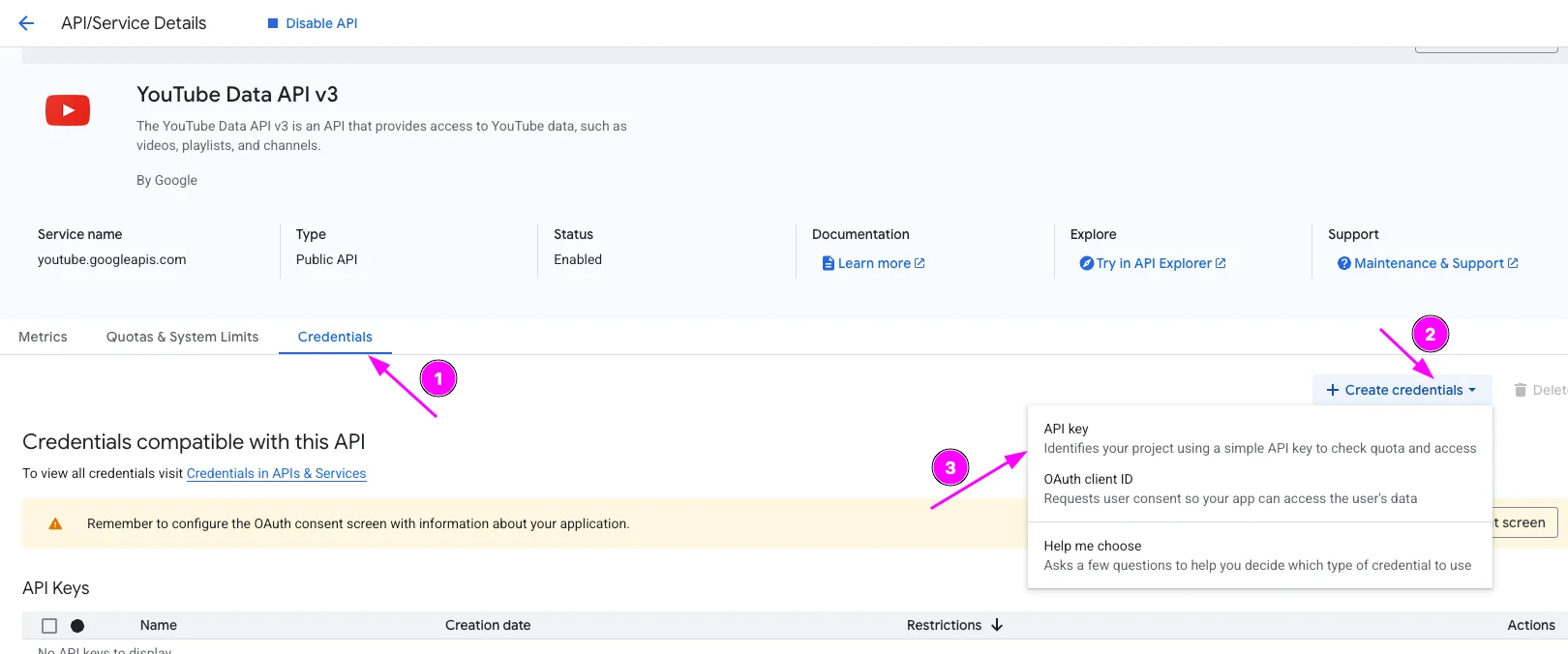

2. Open Enable APIs and Services and search for YouTube Data API v3 → enable it.

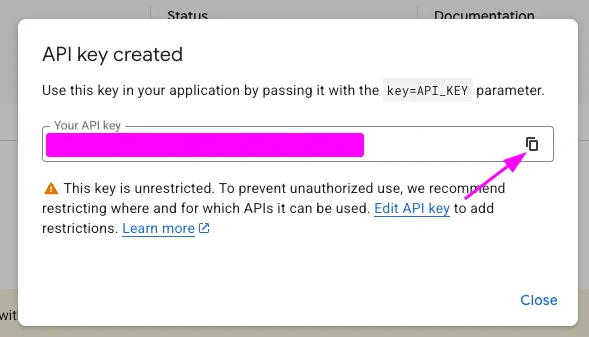

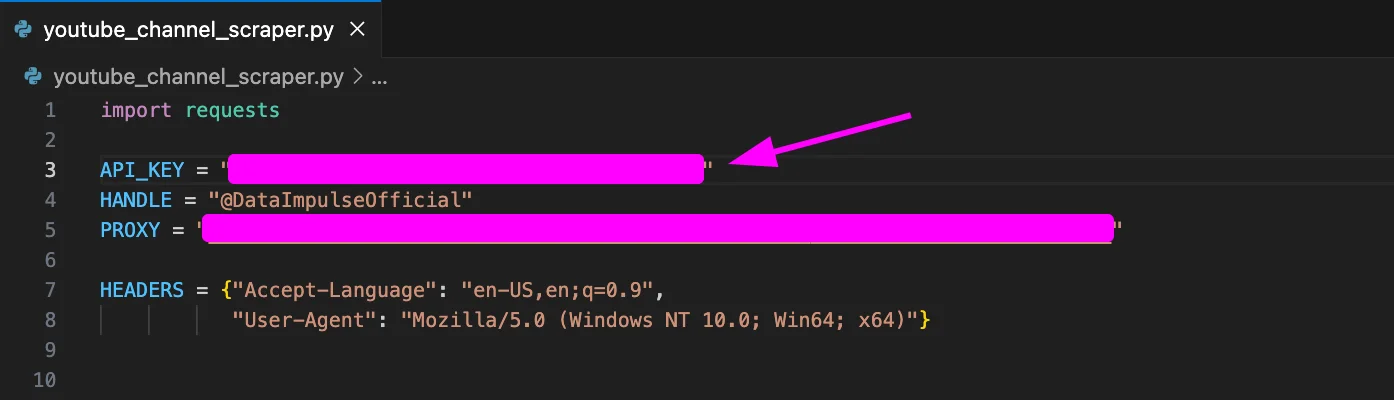

3. Go to Credentials, create an API key, and copy it into your code.

Now, with YouTube API v3, you can scrape the name, description of the channel, and the number of followers. Here is the code:

import requests

API_KEY = "Your API key"

HANDLE = "@DataImpulseOfficial"

PROXY = "https://username:[email protected]:port"

HEADERS = {"Accept-Language": "en-US,en;q=0.9",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64)"}

def get_channel_id_by_handle(handle):

url = f"https://www.googleapis.com/youtube/v3/channels"

params = {"part": "id", "forHandle": handle, "key": API_KEY}

r = requests.get(url, params=params, headers=HEADERS,

proxies={"http": PROXY, "https": PROXY} if PROXY else None)

r.raise_for_status()

items = r.json().get("items", [])

if not items:

raise SystemExit(f"No channel found for handle {handle}")

return items[0]["id"]

def get_channel_data(channel_id):

url = f"https://www.googleapis.com/youtube/v3/channels"

params = {"part": "snippet,statistics", "id": channel_id, "key": API_KEY}

r = requests.get(url, params=params, headers=HEADERS,

proxies={"http": PROXY, "https": PROXY} if PROXY else None)

r.raise_for_status()

return r.json()["items"][0]

def main():

channel_id = get_channel_id_by_handle(HANDLE)

data = get_channel_data(channel_id)

name = data["snippet"]["title"]

desc = data["snippet"].get("description", "")

subs = int(data["statistics"].get("subscriberCount", 0))

print("Channel:", name)

print("URL:", f"https://www.youtube.com/channel/{channel_id}")

print("Description: " + (desc or "(no description)"))

print(f"Subscribers: {subs:,}")

if __name__ == "__main__":

main()

Code 6. Scraping search results

In this example, the keyword is “DataImpulse” and the script returns the first 5 results (MAX_RESULTS = 5). We’ll use some settings to avoid playlists, warnings, and unnecessary output. For each video found, the script prints key details in the console: the title, the channel name, how many views it has, the duration of the video, and the links to both the video and the channel.

Save the code:

from yt_dlp import YoutubeDL

MAX_RESULTS = 5

QUERY = "DataImpulse"

PROXY = "http://username:[email protected]:port"

opts = {

"proxy": PROXY,

"default_search": "ytsearch",

"noplaylist": True,

"no_warnings": True,

"extract_flat": True,

"quiet": True

}

def format_duration(seconds):

"""Convert seconds → hh:mm:ss format"""

if not seconds:

return "00:00"

seconds = int(seconds)

h, m = divmod(seconds, 3600)

m, s = divmod(m, 60)

if h:

return f"{h:02d}:{m:02d}:{s:02d}"

else:

return f"{m:02d}:{s:02d}"

def main():

with YoutubeDL(opts) as yt:

search_query = f"ytsearch{MAX_RESULTS}:{QUERY}"

info = yt.extract_info(search_query, download=False)

entries = info.get("entries", [])

data = []

for video in entries:

data.append({

"title": video.get("title"),

"channel": video.get("channel"),

"view_count": video.get("view_count"),

"duration": video.get("duration"),

"url": video.get("url"),

"channel_url": video.get("channel_url"),

})

print(f"Showing {len(data)} video results:\n")

for info in data:

print("Title: " + info["title"])

print("Channel: " + info["channel"])

print("Views: " + str(info["view_count"]))

print("Duration: " + str(format_duration(info["duration"])))

print("Video URL: " + info["url"])

print("Channel URL: " + info["channel_url"])

print("-" * 60)

if __name__ == "__main__":

main()

Key Highlights:

- Web scraping is still in a legal and ethical “grey area”. Platforms have strict policies because scraping can affect security, privacy, or copyrighted content.

- Not everything can be scraped. Public domain and publicly accessible metadata may be allowed, but private or DRM-protected content is prohibited.

- You need YouTubeDL and the yt-dlp library to download and extract video data.

- To collect info about the channel, get an API key from Google Cloud Console and enable YouTube Data API v3.

- With DataImpulse proxies, you don’t have to worry about possible access denials or unsafe environments. We protect your privacy, keep your requests secure, and help you access the data you need.