In this Article

Every online activity leaves a trace behind; sometimes, it may endanger your safety or prevent you from scraping the web. Proxies help you get under the radar of anti-detection systems without hindering your tasks. In this article, we will show you how to integrate DataImpulse proxies in C# using .NET so you can stop worrying about bumping into the rate limit or leaving an opening for malicious actors.

Getting started

First, you need to make sure that you have several necessary tools installed on your device:

- Visual Studio Code (Visual Studio 2022 or any other IDE that supports .NET is also fine)

- .NET 8

- HtmlAgilityPack (this tutorial uses version 1.6.11)

As we use proxies in this tutorial, you need a DataImpulse account and a plan that suits your needs. We have a step-by-step guide on how to do that.

In this tutorial, we scrape our website, DataImpulse.com, as an example. However, you can easily adjust the code according to your tasks and scrape whatever sources you need. Of course, you should always ensure that you never violate the terms of service of websites you collect data from.

Creating an HTTP client

You must first create an HTTP client to route your traffic via a designated proxy server. It is a simple console app that sends requests to the necessary URL and retrieves responses—everything via a proxy server. You can copy the piece of code below.

using System.Net;

class HttpClientApp

{

public static void Main(string[] args)

{

DownloadPageAsync().Wait();

}

private static async Task DownloadPageAsync()

{

string page = "https://api.ipify.org/"; // Example API to get the public IP address

// Configure proxy

var proxy = new WebProxy("gw.dataimpulse.com:823")

{

UseDefaultCredentials = false,

Credentials = new NetworkCredential("your login", "your password")

};

// Set up HTTP client handler with proxy

var httpClientHandler = new HttpClientHandler

{

Proxy = proxy,

UseProxy = true

};

using (var client = new HttpClient(handler: httpClientHandler, disposeHandler: true))

{

try

{

// Send the HTTP GET request

var response = await client.GetAsync(page);

// Check if the response is successful

response.EnsureSuccessStatusCode();

// Get the content of the response

string result = await response.Content.ReadAsStringAsync();

Console.WriteLine("Response received: ");

Console.WriteLine(result);

Console.WriteLine("Press any key to exit.");

Console.ReadLine(); // Keep the console open to display the result

}

catch (HttpRequestException e)

{

Console.WriteLine($"Request error: {e.Message}");

Console.WriteLine("Press any key to exit.");

Console.ReadLine(); // Keep the console open to display the result

}

catch (Exception ex)

{

Console.WriteLine($"Unexpected error: {ex.Message}");

Console.WriteLine("Press any key to exit.");

Console.ReadLine(); // Keep the console open to display the result

}

}

}

}

To run this and the following apps, type the following command in the terminal under the root directory of your app.

dotnet run --project NameOfProject

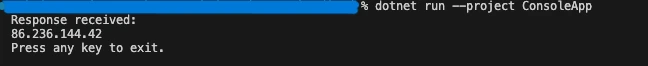

The output should look like this:

Implementing proxy rotation

It’s not enough to route your traffic via a proxy server. To operate in line with traffic guidelines, you have to use a new IP address for every request. So, the next step is to build a proxy rotator to pick a random address from our pool.

using System.Net;

class ProxyRotator

{

// List of proxies to rotate through

private static List proxies = new List

{

new WebProxy("http://gw.dataimpulse.com:10000"),

new WebProxy("http://gw.dataimpulse.com:10001"),

new WebProxy("http://gw.dataimpulse.com:10002")

};

public static void Main(string[] args)

{

MakeRequestsWithRotation().Wait();

}

private static async Task MakeRequestsWithRotation()

{

// Example URLs to request

string url = "https://api.ipify.org/";

for (int i = 0; i < proxies.Count; i++)

{

// Send request using each proxy

Console.WriteLine($"Using proxy: {proxies[i].Address}");

try

{

var response = await SendRequestWithProxy(url, proxies[i]);

if (response.IsSuccessStatusCode)

{

Console.WriteLine($"Request succeeded with proxy {proxies[i].Address}");

string responseBody = await response.Content.ReadAsStringAsync();

Console.WriteLine($"IP address from API website: {responseBody}"); // Print or process the response as needed

}

else

{

Console.WriteLine($"Request failed with proxy {proxies[i].Address}, Status Code: {response.StatusCode}");

}

}

catch (Exception ex)

{

Console.WriteLine($"Exception with proxy {proxies[i].Address}: {ex.Message}");

}

}

}

// Function to send request using a specific proxy

private static async Task SendRequestWithProxy(string url, WebProxy proxy)

{

HttpClientHandler handler = new HttpClientHandler

{

Proxy = proxy,

UseProxy = true

};

using (HttpClient client = new HttpClient(handler))

{

return await client.GetAsync(url);

}

}

}

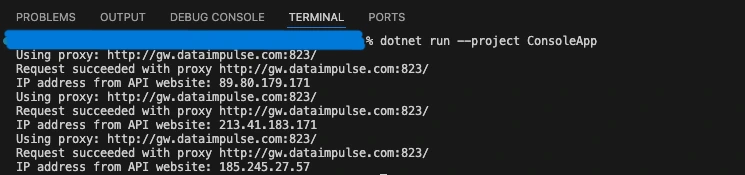

In the example above, we provide a list of proxies to rotate through (3 proxies in our case) and check the rotating IP addresses by the http://api.ipify.org/ URL. The result should look like this:

Building a proxy checker

To make sure proxies work, we have to set up a proxy checker. It sends an HTTP request to a proxy server and returns you the answer whether a server is valid or not. We use dataimpulse.com, but any other link will go.

using System.Net;

class ProxyChecker

{

// Entry point renamed to avoid conflict

public static void Main(string[] args)

{

string proxyAddress = "gw.dataimpulse.com"; // Replace with your proxy address

// Replace with your proxy ports

var proxyPortStart = 10000;

var proxyPortEnd = 10100;

var tasks = new List();

for (var proxyPort = proxyPortStart; proxyPort < proxyPortEnd; proxyPort++)

{

tasks.Add(CheckProxy(proxyAddress, proxyPort));

}

Task.WaitAll(tasks.ToArray());

}

public static async Task CheckProxy(string proxyAddress, int proxyPort)

{

var proxy = new WebProxy(proxyAddress, proxyPort)

{

// Uncomment and modify if the proxy requires credentials

Credentials = new NetworkCredential("your login", "your password"),

BypassProxyOnLocal = false

};

var httpClientHandler = new HttpClientHandler

{

Proxy = proxy,

UseProxy = true

};

using (var client = new HttpClient(httpClientHandler))

{

try

{

client.Timeout = TimeSpan.FromSeconds(10); // Set a timeout

var response = await client.GetAsync("https://api.ipify.org/"); // You can use any URL for testing

if (response.IsSuccessStatusCode)

{

Console.WriteLine("Proxy is working: " + proxyAddress + ":" + proxyPort);

}

else

{

Console.WriteLine("Proxy is not working (Invalid response): " + proxyAddress + ":" + proxyPort);

}

}

catch (Exception ex)

{

Console.WriteLine("Proxy is not working (Exception): " + proxyAddress + ":" + proxyPort + " - " + ex.Message);

}

}

}

}

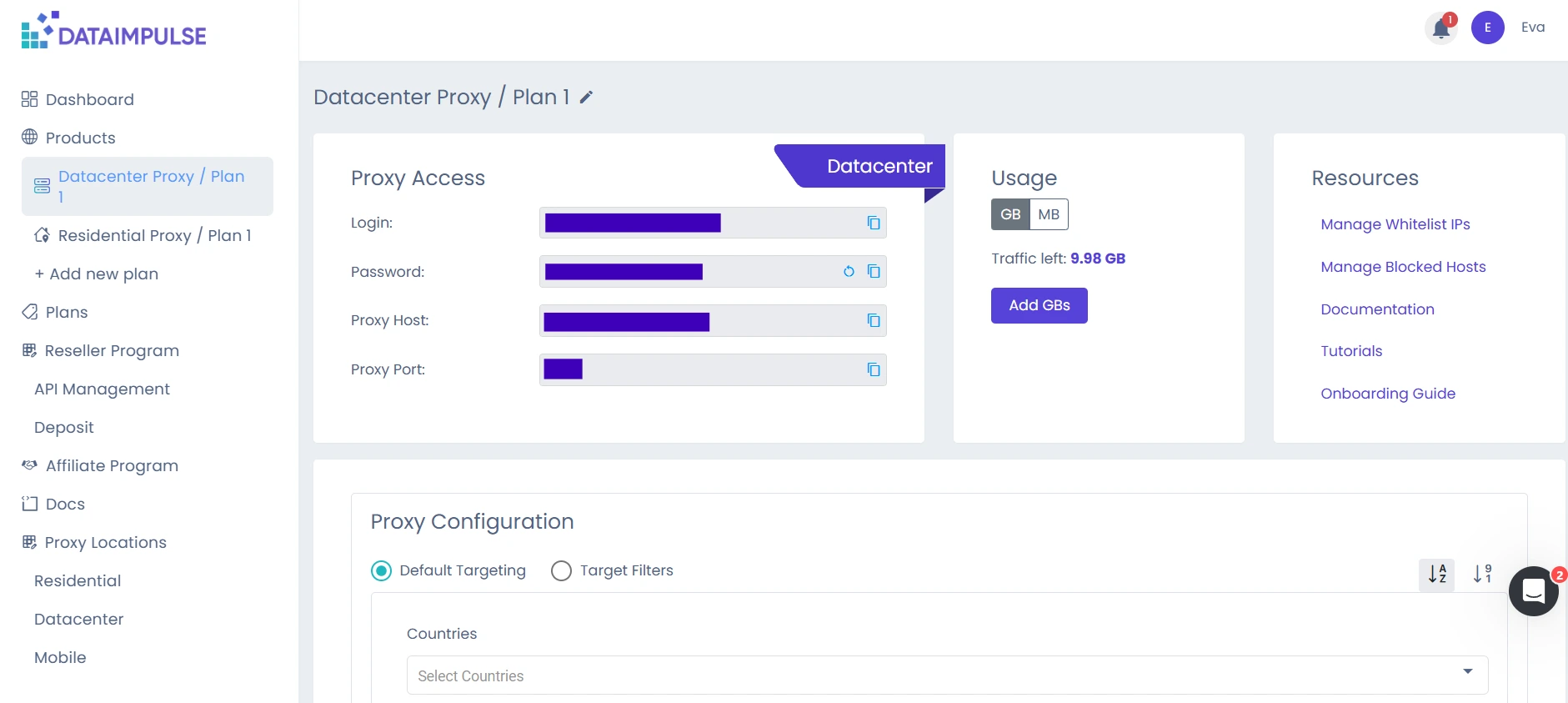

Here, you must specify the hostname, port, and credentials. You can find them on your DI dashboard, on a necessary plan tab. You also need to set a timeout in seconds.

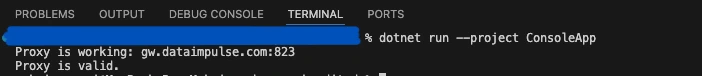

Here is an example of the result you get:

Scraping data

The heart of our project is a web scraper. It sends an HTTP request and collects HTML data from the DataImpulse Home page.

using System.Net;

using HtmlAgilityPack;

class WebScraper

{

public static void Main(string[] args)

{

Scrape().Wait();

}

private static async Task Scrape()

{

// Define the proxy details

string proxyAddress = "http://gw.dataimpulse.com:823"; // Replace with your proxy address

string proxyUsername = "your login"; // Replace with your proxy username (if needed)

string proxyPassword = "your password"; // Replace with your proxy password (if needed)

// Create a Proxy Handler

var httpClientHandler = new HttpClientHandler

{

Proxy = new WebProxy(proxyAddress)

{

Credentials = new NetworkCredential(proxyUsername, proxyPassword)

},

UseProxy = true

};

// Create an HttpClient using the handler with the proxy

using (var client = new HttpClient(httpClientHandler))

{

// Define the target URL to scrape

string url = "https://dataimpulse.com/";

var baseUri = new Uri(url);

try

{

// Send GET request

var response = await client.GetAsync(url);

// Use the new code snippet here

if (response.IsSuccessStatusCode)

{

var content = await response.Content.ReadAsStringAsync();

// Load the HTML content into an HtmlDocument

HtmlDocument doc = new();

doc.LoadHtml(content);

// Use XPath to find all tags that are direct children of , , or

var nodes = doc.DocumentNode.SelectNodes("//li/a[@href] | //p/a[@href] | //td/a[@href]");

if (nodes != null)

{

foreach (var node in nodes)

{

string hrefValue = node.GetAttributeValue("href", string.Empty);

string title = node.InnerText; // This gets the text content of the tag, which is usually the title

var fullUri = new Uri(baseUri, hrefValue);

Console.WriteLine($"Title: {title}, Link: {fullUri.AbsoluteUri}");

// You can process each title and link as required

}

}

else

{

Console.WriteLine("No matching nodes found.");

}

}

else

{

Console.WriteLine($"Failed to scrape. Status code: {response.StatusCode}");

}

}

catch (Exception ex)

{

Console.WriteLine($"An error occurred: {ex.Message}");

}

}

}

}

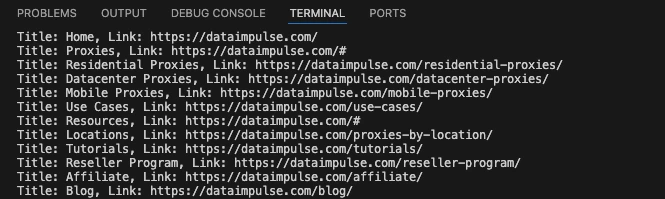

To build a web scraper, you need to provide your proxy address and credentials and specify the target URL. You also need to specify what content you want to collect. In our example, we retrieve all the titles and links. Our response looks like this:

However, you can modify that and get the data you need.

Why DataImpulse?

You may be tempted to use public proxies for web scraping projects as it seems an excellent way to save money. However, free proxies pose numerous risks, from failed or slow connection to personal data leaks, as you never know who manages those servers. If you’d like to read about all the reasons for avoiding free proxies, check out these articles: Why shouldn’t you use fake IPs and free proxies and From low speed to security threats: why you should avoid free proxies. That’s why you should turn to trustworthy providers. DataImpulse offers you legally obtained IP addresses that aren’t associated with malicious activities and provides budget-friendly plans. For example, you can buy residential proxies for $1 per 1 GB. We operate on a pay-as-you-go pricing model, so you don’t have to worry that traffic will expire. Click on the “Try now” button in the top right corner of the screen or write to us at[email protected].