In this Article

As Java is a platform-independent language with robust libraries, multithreading capabilities, and effective error-handling mechanisms, it is a good choice for web scraping. In this detailed tutorial, we disclose what tools you need and what steps you should take to streamline scraping in Java.

Web scraping with Java: key points

While simpler languages, like Python, are more popular for web scraping, Java also has its strong points, for example:

- Libraries like JSoup or HtmlUnit simplify HTML parsing

- Performance, speed, and efficient memory management make Java good for large-scale projects

- Being a strongly typed language, Java allows for compile-time error-catching

Besides, if your infrastructure relies on Java or you aim for long-term projects, Java is also an option. At the same time, web scraping often comes with challenges like IP bans, geo-based limitations, and security concerns. Backing up your Java project with proxies helps you overcome those obstacles and get the necessary data.

Before we head into coding, let’s see what tools you are going to need:

- Visual Studio Code (actually, Visual Studio 2022 or any other IDE that supports Java is good)

- The Coding Pack for Java

- Extension Pack for Java

- Apache Maven

If you’re new to Visual Studio Code or Java, here is documentation with detailed explanations on how to install the Coding Pack and Extension Pack. You may also appreciate this tutorial on installing Apache Maven.

Note: Only the “hostname:port” format is supported, so you will need to whitelist your IP address before executing the code. You can do this in your DataImpulse account. Go to the necessary proxy plan, select “Manage Whitelist IPs” from the right-top menu, and whitelist your address. If you need help or face problems, please see our detailed guide on managing your DataImpulse account.

Getting started with HttpClientApp

You must create an HTTP client to route traffic via a proxy server. This app sends requests to the designated URL and receives responses. You may use the code below:

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.*;

public class HttpClientApp {

public static final String proxyHost = "gw.dataimpulse.com";

public static final int proxyPort = 823;

public static void main(String[] args) {

System.out.println("Performing request through proxy...");

try {

String response = request("https://api.ipify.org");

System.out.println("Your IP address: " + response);

} catch (IOException e) {

System.err.println("Request error: " + e.getMessage());

}

}

public static String request(String url) throws IOException {

// Configure the proxy without authentication

Proxy proxy = new Proxy(Proxy.Type.HTTP, new InetSocketAddress(proxyHost, proxyPort));

// Create and configure the connection

HttpURLConnection connection = (HttpURLConnection) new URL(url).openConnection(proxy);

connection.setRequestMethod("GET");

connection.setRequestProperty("User-Agent", "JavaHttpURLConnection");

// Handle the response

int responseCode = connection.getResponseCode();

String responseMessage = connection.getResponseMessage();

if (responseCode == 200) {

// Successful response

try (BufferedReader reader = new BufferedReader(

new InputStreamReader(connection.getInputStream()))) {

StringBuilder response = new StringBuilder();

String line;

while ((line = reader.readLine()) != null) {

response.append(line);

}

return response.toString();

}

} else {

// Error response with detailed output

StringBuilder errorResponse = new StringBuilder();

if (connection.getErrorStream() != null) {

try (BufferedReader reader = new BufferedReader(

new InputStreamReader(connection.getErrorStream()))) {

String line;

while ((line = reader.readLine()) != null) {

errorResponse.append(line);

}

}

}

throw new IOException("HTTP error: " + responseCode + " " + responseMessage +

(errorResponse.length() > 0 ? "\nError details: " + errorResponse : ""));

}

}

}

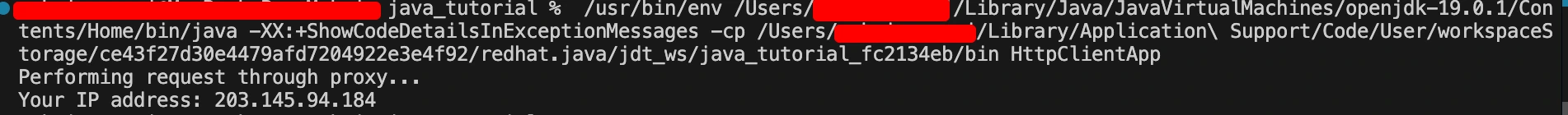

The output should look like this:

Building a proxy rotator

Routing traffic via a proxy server isn’t enough. To enhance privacy, you must use a new IP per every request. That’s why you need a proxy rotator. Here is an example of code you can use:

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.*;

import java.util.Arrays;

import java.util.List;

public class ProxyRotator {

// List of proxies to rotate through

private static final List proxies = Arrays.asList(

new Proxy(Proxy.Type.HTTP, new InetSocketAddress("gw.dataimpulse.com", 10000)),

new Proxy(Proxy.Type.HTTP, new InetSocketAddress("gw.dataimpulse.com", 10001)),

new Proxy(Proxy.Type.HTTP, new InetSocketAddress("gw.dataimpulse.com", 10002))

);

public static void main(String[] args) {

// URL to request

String url = "https://api.ipify.org";

for (Proxy proxy : proxies) {

System.out.println("Using proxy: " + proxy.address());

try {

String response = sendRequestWithProxy(url, proxy);

System.out.println("Request succeeded with proxy " + proxy.address());

System.out.println("IP address from API website: " + response);

} catch (IOException e) {

System.err.println("Request failed with proxy " + proxy.address() + ": " + e.getMessage());

}

System.out.println("------------------------------------------------");

}

}

// Function to send request using a specific proxy

private static String sendRequestWithProxy(String url, Proxy proxy) throws IOException {

HttpURLConnection connection = (HttpURLConnection) new URL(url).openConnection(proxy);

connection.setRequestMethod("GET");

connection.setRequestProperty("User-Agent", "JavaHttpURLConnection");

int responseCode = connection.getResponseCode();

if (responseCode == 200) {

try (BufferedReader reader = new BufferedReader(new InputStreamReader(connection.getInputStream()))) {

StringBuilder response = new StringBuilder();

String line;

while ((line = reader.readLine()) != null) {

response.append(line);

}

return response.toString();

}

} else {

throw new IOException("HTTP error code: " + responseCode + " " + connection.getResponseMessage());

}

}

}

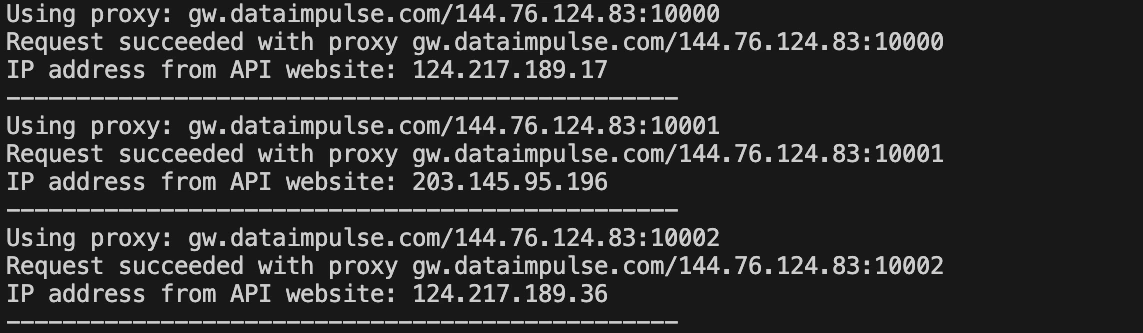

You should get such result from this:

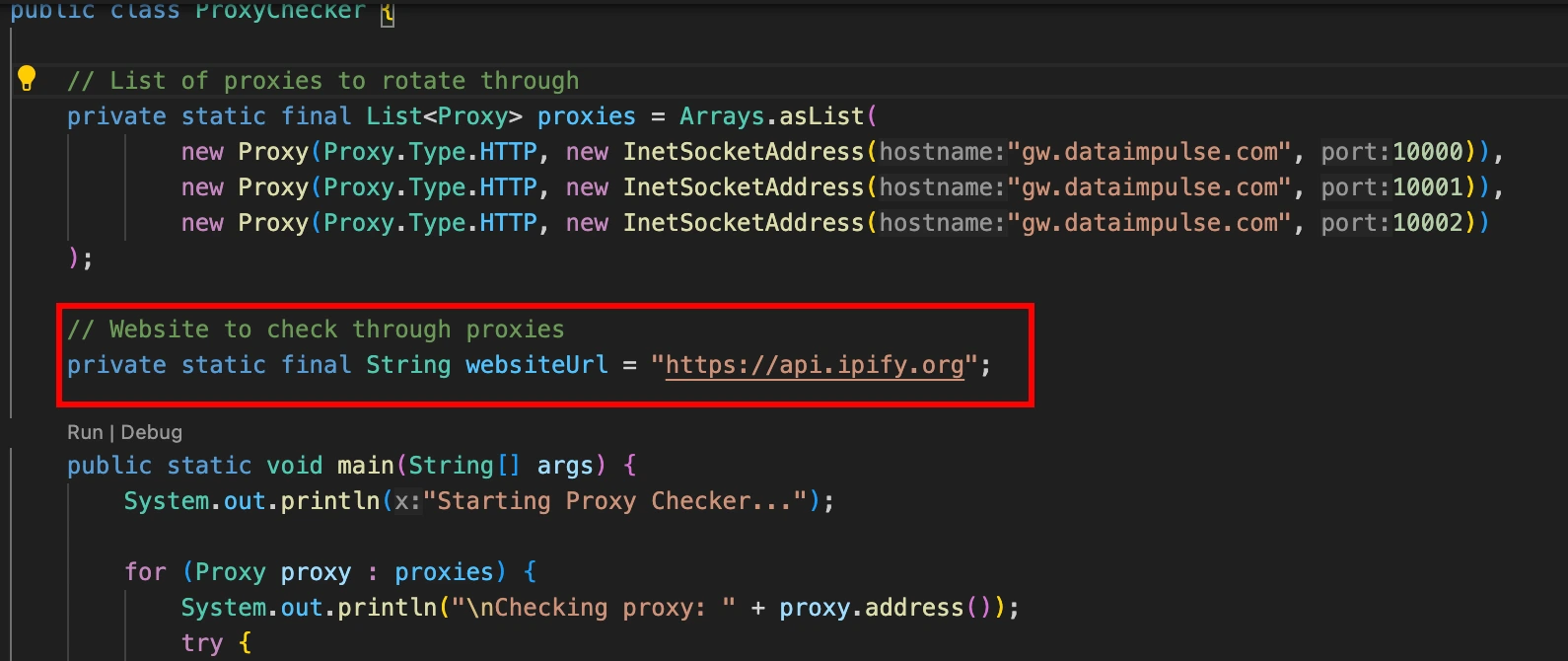

Compiling a proxy checker

It’s time to ensure our proxies work with a defined website. For this, you have to create a proxy checker app and provide a URL to check:

There is a code you can try:

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.*;

import java.util.Arrays;

import java.util.List;

public class ProxyChecker {

// List of proxies to rotate through

private static final List proxies = Arrays.asList(

new Proxy(Proxy.Type.HTTP, new InetSocketAddress("gw.dataimpulse.com", 10000)),

new Proxy(Proxy.Type.HTTP, new InetSocketAddress("gw.dataimpulse.com", 10001)),

new Proxy(Proxy.Type.HTTP, new InetSocketAddress("gw.dataimpulse.com", 10002))

);

// Website to check through proxies

private static final String websiteUrl = "https://api.ipify.org";

public static void main(String[] args) {

System.out.println("Starting Proxy Checker...");

for (Proxy proxy : proxies) {

System.out.println("\nChecking proxy: " + proxy.address());

try {

boolean isWorking = checkProxy(proxy, websiteUrl);

if (isWorking) {

System.out.println("✅ Proxy " + proxy.address() + " works with " + websiteUrl);

} else {

System.out.println("❌ Proxy " + proxy.address() + " does not work with " + websiteUrl);

}

} catch (IOException e) {

System.out.println("❌ Proxy " + proxy.address() + " failed: " + e.getMessage());

}

System.out.println("------------------------------------------------");

}

}

// Function to check if proxy works with the given website

private static boolean checkProxy(Proxy proxy, String url) throws IOException {

HttpURLConnection connection = (HttpURLConnection) new URL(url).openConnection(proxy);

connection.setRequestMethod("GET");

connection.setRequestProperty("User-Agent", "JavaHttpURLConnection");

connection.setConnectTimeout(5000); // 5 seconds timeout

connection.setReadTimeout(5000); // 5 seconds timeout

int responseCode = connection.getResponseCode();

String responseMessage = connection.getResponseMessage();

System.out.println("Response: " + responseCode + " " + responseMessage);

if (responseCode == 200) {

try (BufferedReader reader = new BufferedReader(

new InputStreamReader(connection.getInputStream()))) {

StringBuilder response = new StringBuilder();

String line;

while ((line = reader.readLine()) != null) {

response.append(line);

}

System.out.println("IP Returned: " + response);

}

return true;

} else {

try (BufferedReader reader = new BufferedReader(

new InputStreamReader(connection.getErrorStream()))) {

StringBuilder errorResponse = new StringBuilder();

String line;

while ((line = reader.readLine()) != null) {

errorResponse.append(line);

}

System.out.println("Error details: " + errorResponse);

}

return false;

}

}

}

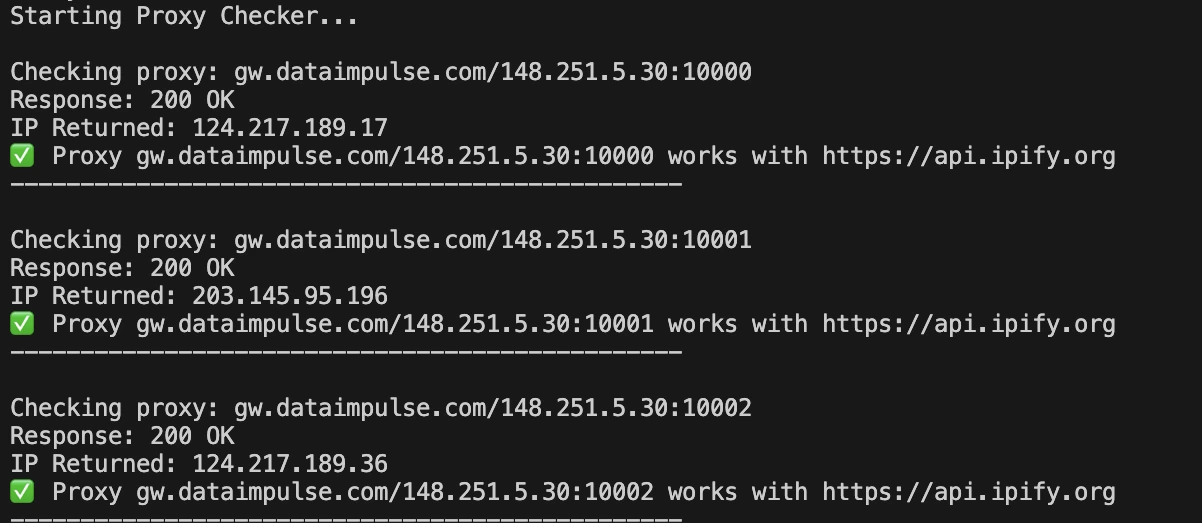

The results you see should look like this:

Getting to web scraping itself

Finally, it’s time to build a web scraping app. It will extract all the needed data, so you must provide the target URL to scrape and specify what data you want the program to retrieve. In our case, we will scrape links from our homepage. You can use this code as a reference:

package com.dataimpulse;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.*;

import java.nio.charset.StandardCharsets;

public class ProxyWebScraper {

// Proxy configuration

private static final String PROXY_HOST = "gw.dataimpulse.com";

private static final int PROXY_PORT = 823;

// Target website to scrape

private static final String TARGET_URL = "https://dataimpulse.com/";

public static void main(String[] args) {

System.out.println("Starting Proxy Web Scraper...");

try {

// Perform the web scraping through the proxy

String htmlContent = getHtmlContent(TARGET_URL);

if (htmlContent != null) {

// Parse and extract links from the HTML content

parseHtml(htmlContent);

} else {

System.out.println("Failed to retrieve HTML content.");

}

} catch (IOException e) {

System.err.println("Error during scraping: " + e.getMessage());

}

}

// Method to get HTML content through the proxy

private static String getHtmlContent(String url) throws IOException {

// Configure proxy

Proxy proxy = new Proxy(Proxy.Type.HTTP, new InetSocketAddress(PROXY_HOST, PROXY_PORT));

// Create and configure the connection

HttpURLConnection connection = (HttpURLConnection) new URL(url).openConnection(proxy);

connection.setRequestMethod("GET");

connection.setRequestProperty("User-Agent", "JavaWebScraper/1.0");

connection.setConnectTimeout(10000);

connection.setReadTimeout(10000);

int responseCode = connection.getResponseCode();

if (responseCode == 200) {

// Read and return the HTML content

try (BufferedReader reader = new BufferedReader(

new InputStreamReader(connection.getInputStream(), StandardCharsets.UTF_8))) {

StringBuilder content = new StringBuilder();

String line;

while ((line = reader.readLine()) != null) {

content.append(line);

}

return content.toString();

}

} else {

System.err.println("Failed to fetch HTML. HTTP error code: " + responseCode);

return null;

}

}

// Method to parse HTML and extract links using jsoup

private static void parseHtml(String htmlContent) {

Document doc = Jsoup.parse(htmlContent);

Elements links = doc.select("a[href]");

if (links.isEmpty()) {

System.out.println("No links found on the page.");

} else {

System.out.println("Links found:");

for (Element link : links) {

String href = link.attr("abs:href");

String title = link.text();

System.out.println("Title: " + title + ", Link: " + href);

}

}

}

}

However, there is something else you need to do. To finalize the process and ensure your scraping app works correctly, you need to install the JSoup library using Maven. Here is a step-by-step explanation of how to do it:

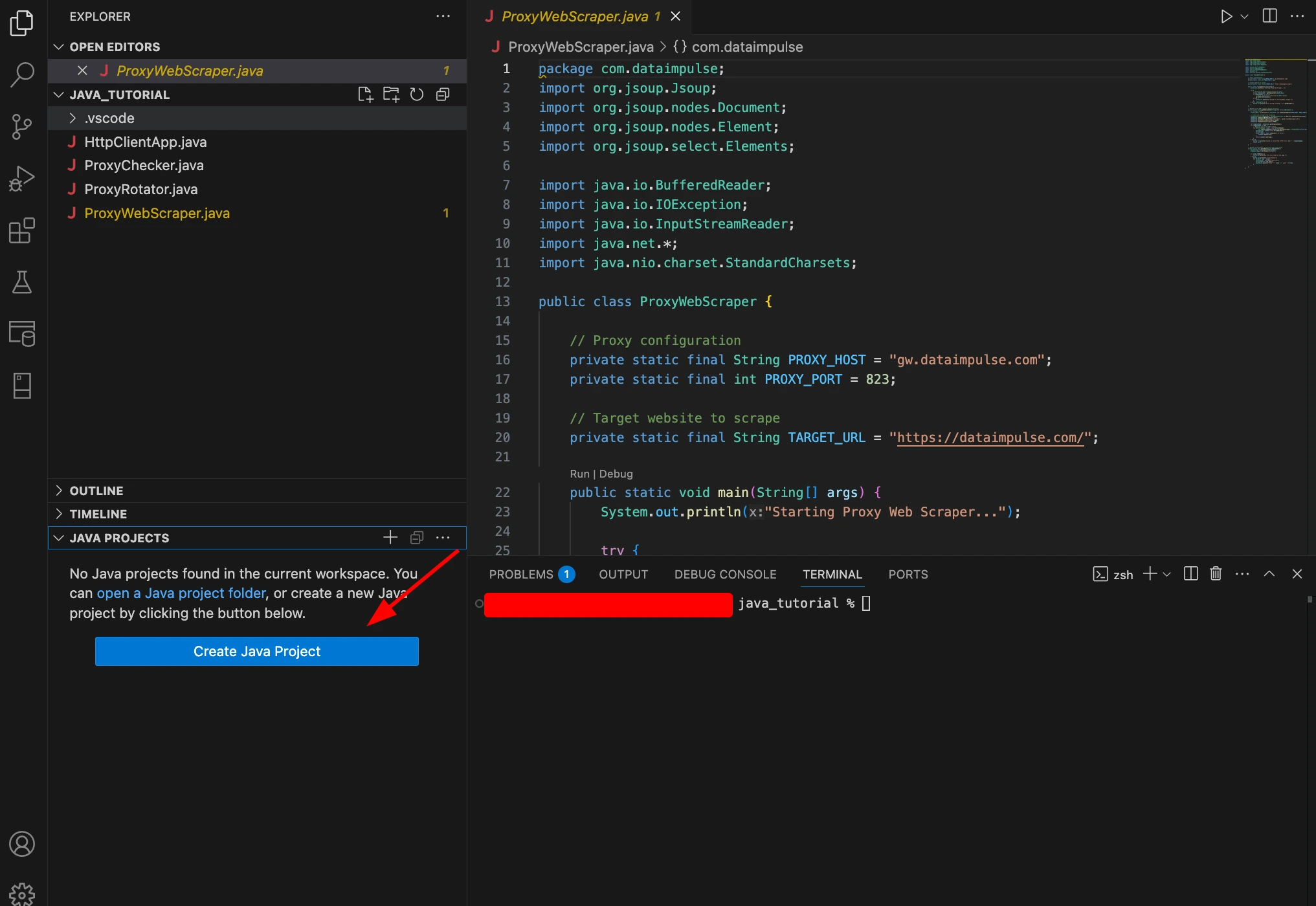

- Create a Java project by using the corresponding button.

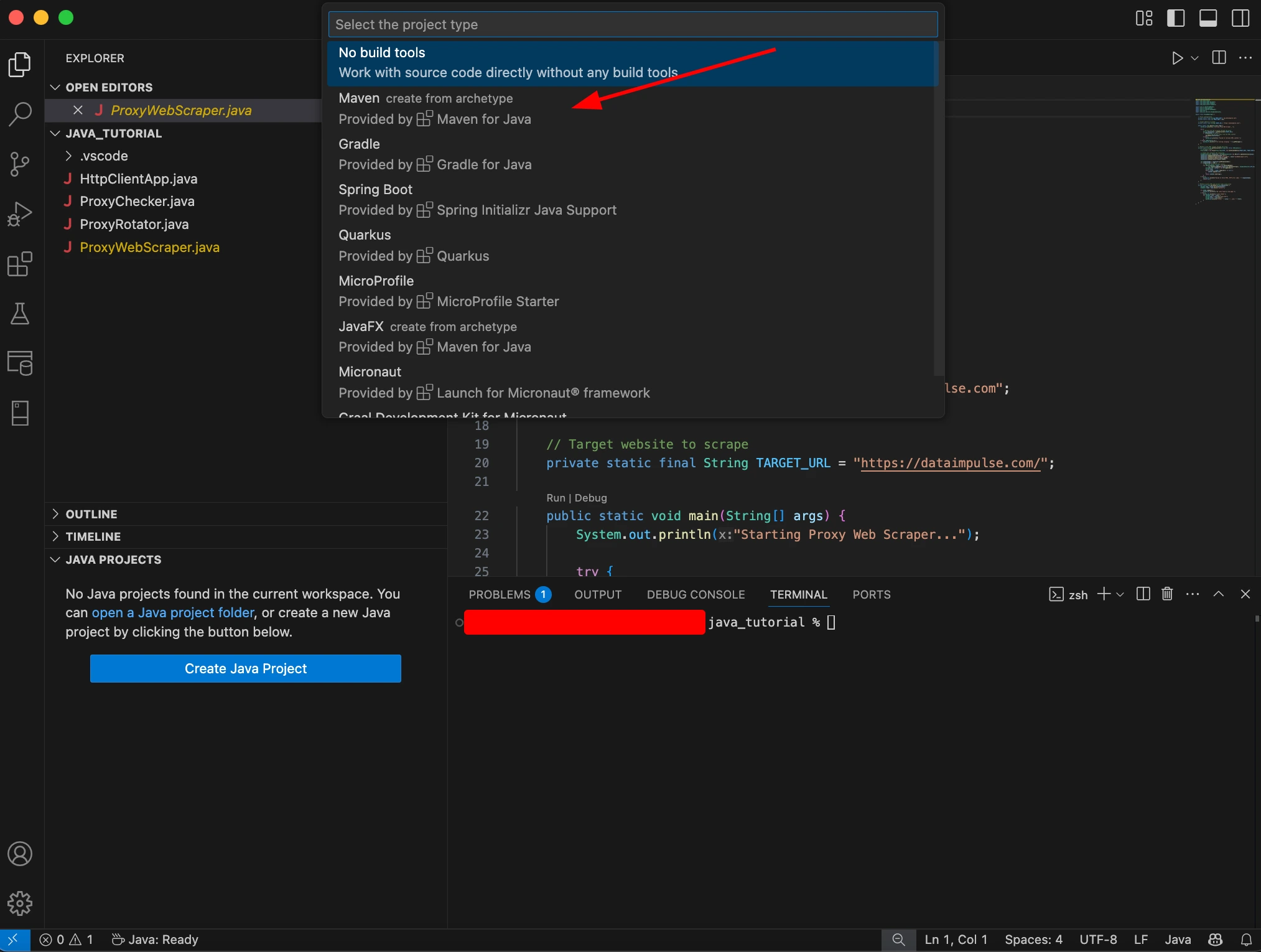

- Select Maven as the project type.

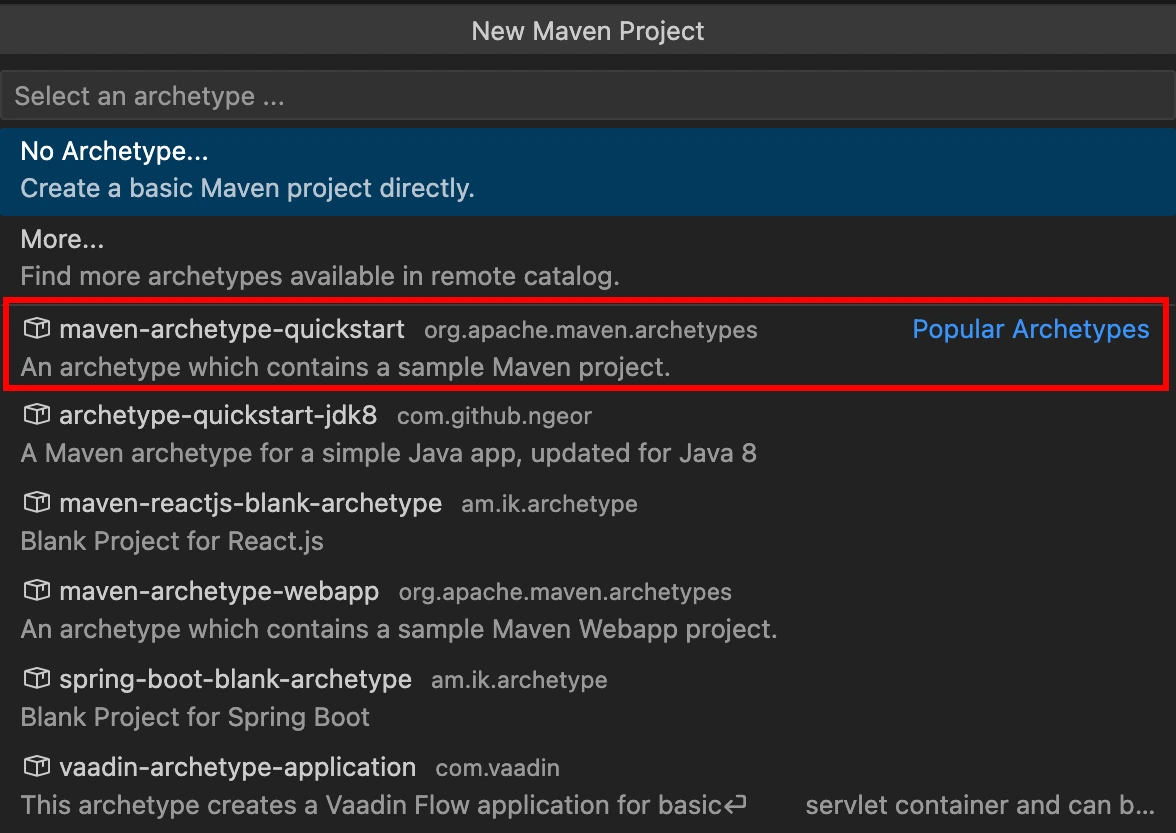

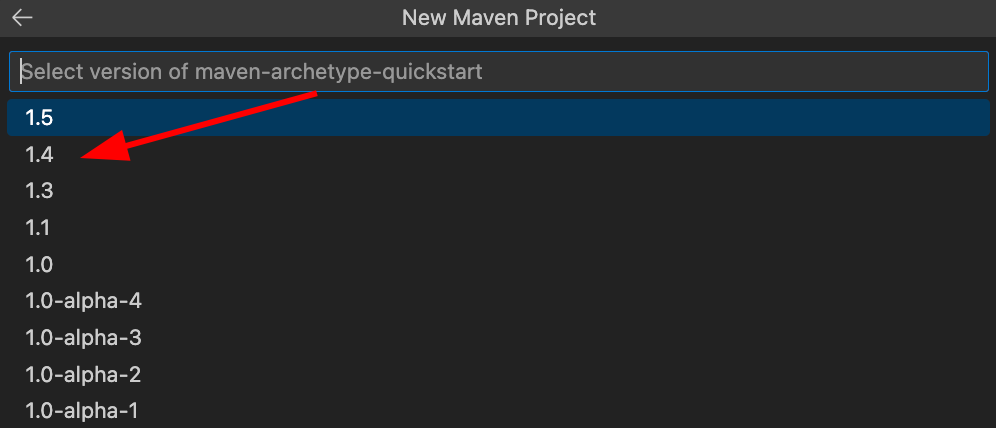

- From the list that appeared, choose “maven-archetype-quickstart”:

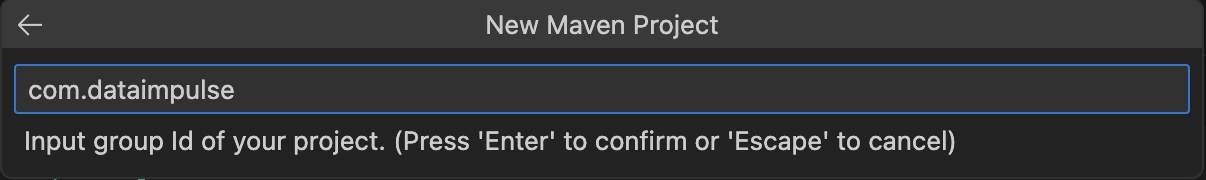

- Enter “com.dataimpulse” as group id.

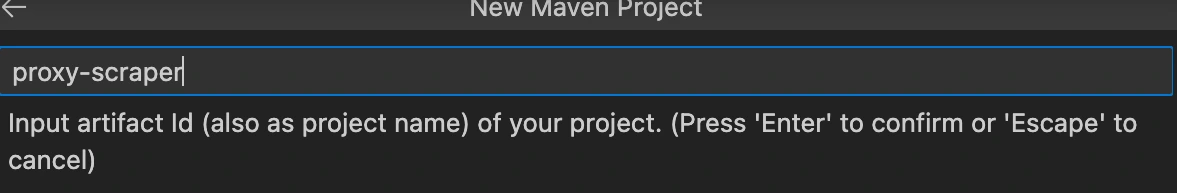

- Input “proxy-scraper” in the “artifact Id” field.

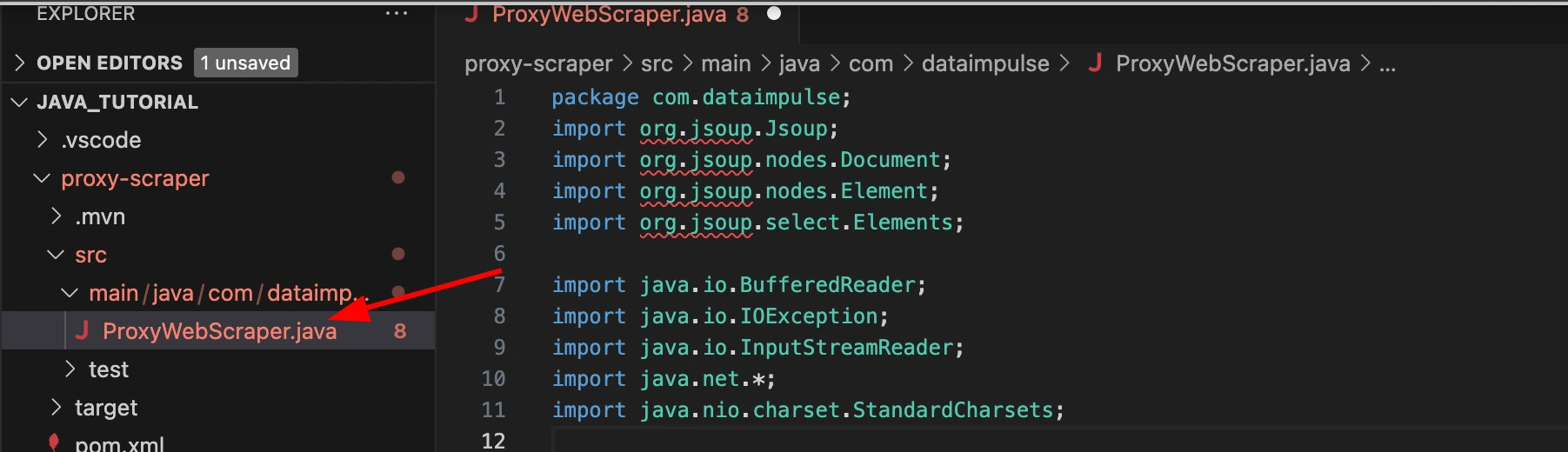

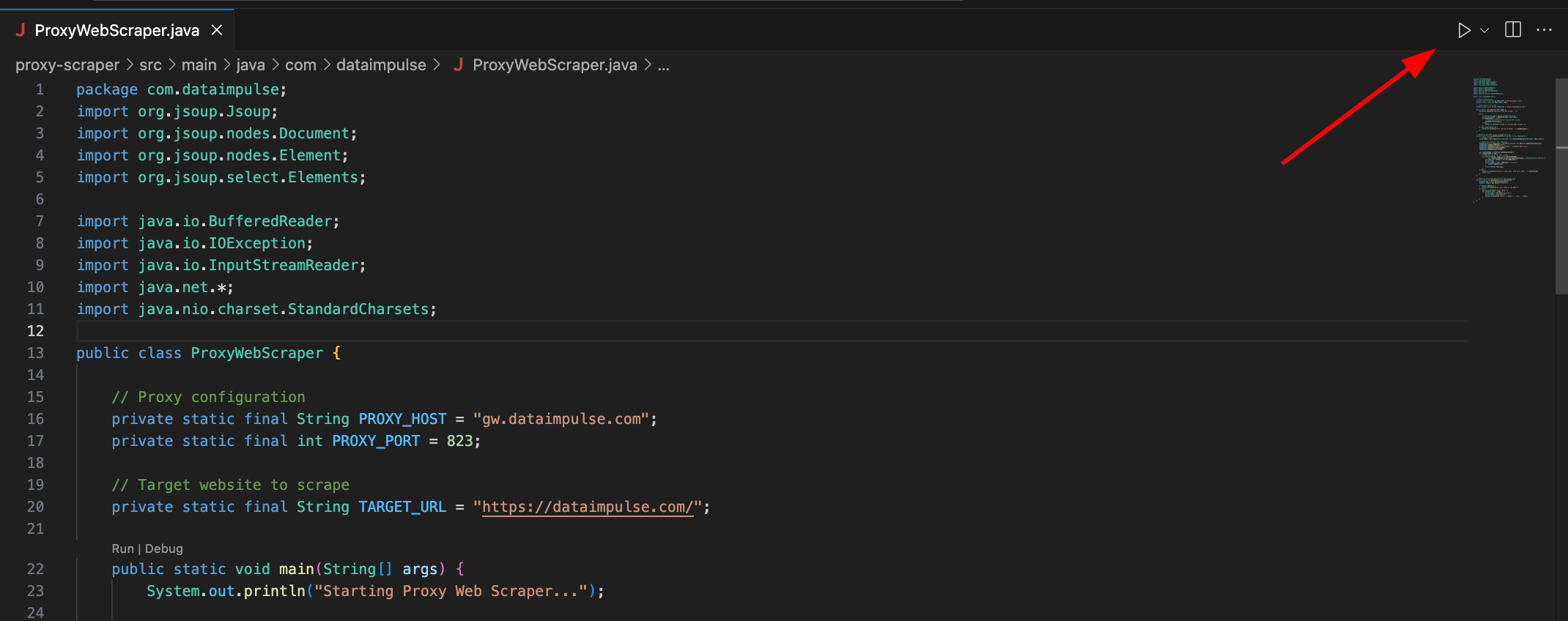

- Choose a folder to store the library and the ProxyWebScraper app itself. After that, the proxy-scraper folder should appear. Now, move the code to proxy-scraper/src/main/java/com/dataimpulse:

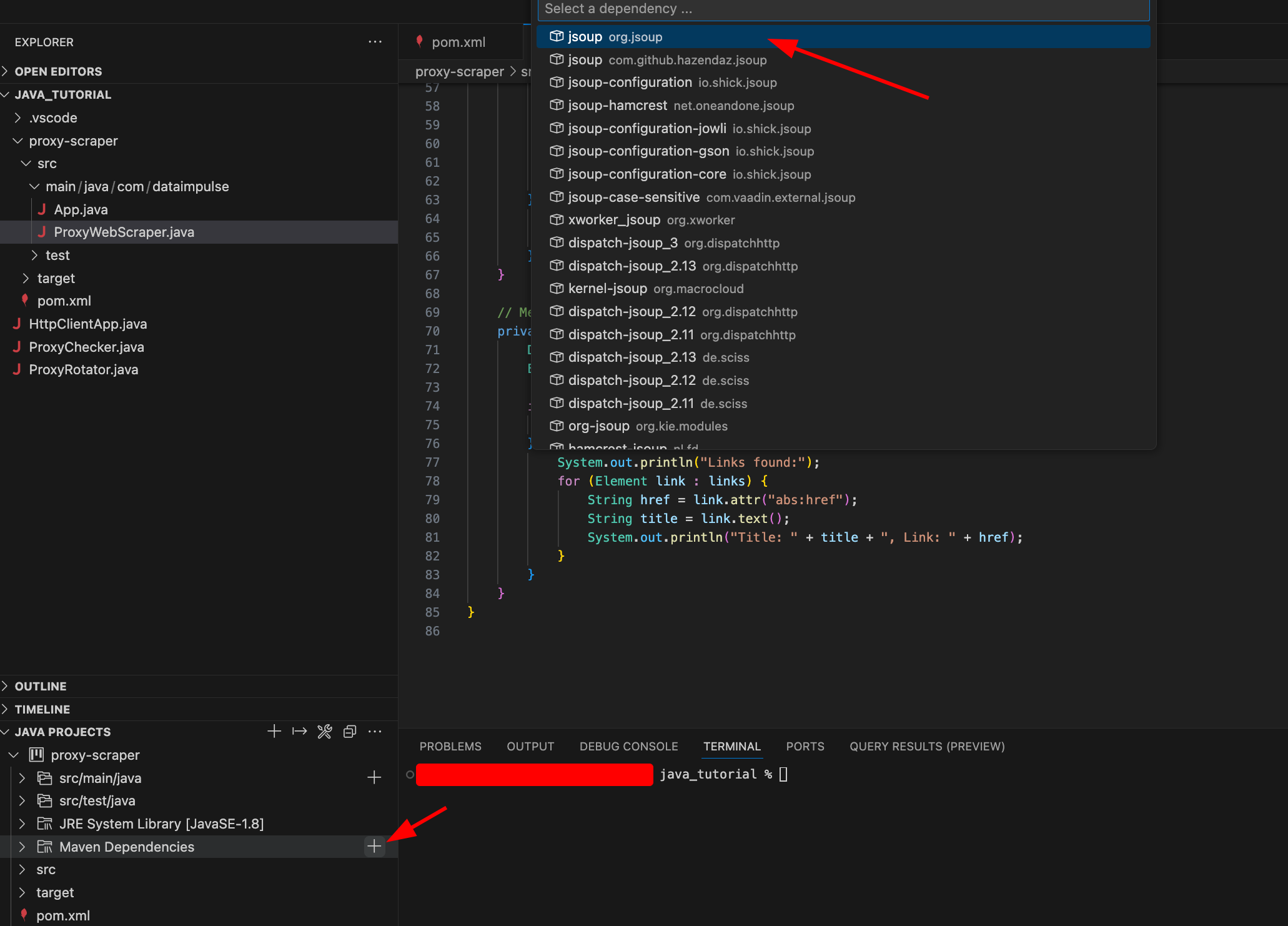

- Add the JSoup library via Apache Maven, as shown in the screenshot below. If you do it right, the library will appear in the “pom.xml” file.

- In the “pom.xml” file change the “maven.compiler.source” and “maven.compiler.target” parameters to 1.8. Save the file using CTRL+S for Windows or CMD+S for Mac.

- Now, your code should work just fine. To execute it, use the button in the top-right corner of the screen.

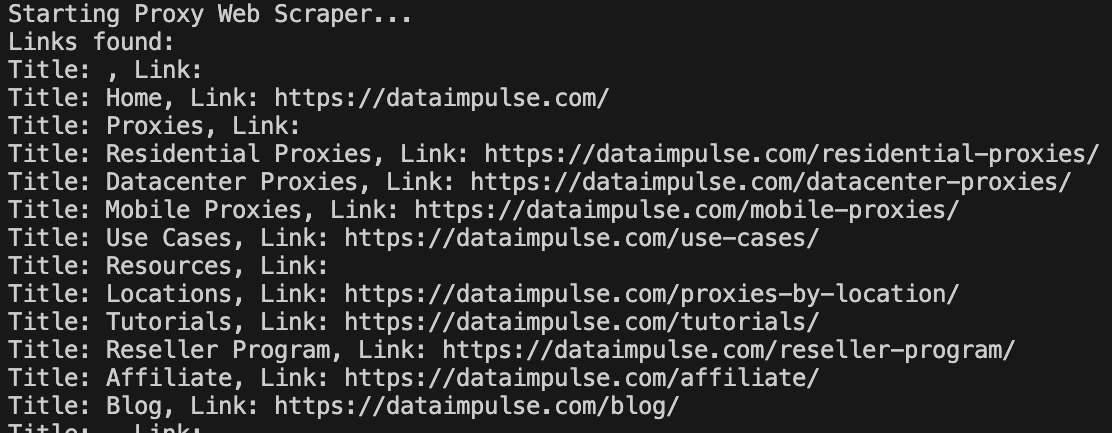

Here is the final result of our scraping project:

As you can see, we now have all the data we wanted. However, modifying the code lets you get any HTML data you need. Also, you should carefully choose proxies, as such results are impossible without legally-sourced IPs. At DataImpulse, you can get 15 million ethically-obtained addresses not associated with law-breaking activities like spreading malware. If you have any difficulties, our human support team is at your service 24/7. You can also adjust targeting to align with territorial access policies. At the same time, our proxies won’t eat up your budget as we operate on a pay-as-you-go pricing model and offer an affordable price of $1 per 1GB. Start with us at [email protected] or use the “Try now” button in the top-right corner of the screen.