In this Article

Some refer to it as web harvesting or content scraping, but no matter the name, web scraping is a popular method for collecting data from websites. Whether done manually or automatically, the success of your scraping depends on the programming language you choose.

When discussing the best language, we should consider multiple factors like development speed, ease of debugging, community support, overall performance, and more.

This article outlines the features of the main programming languages and helps you decide which one is the right fit for your web scraping tasks.

Top Five Programming Languages for Web Scraping

As a multipurpose tool, web scraping is utilized in many industries to collect and analyze large datasets. However, despite its widespread application, only a few programming languages are considered top choices for successful projects. The ones highlighted in this article are Python, Node.js, Ruby, PHP, and C++. They are among the most favorite options for web scraping among IT professionals. Let’s find out why.

-

Python

Key Features of Python programming:

- Free and Open source;

- High-level language;

- Easy to write and debug;

- Large standard library;

- Supports Multiple Programming Paradigms.

Python stands out due to its simple yet powerful object-oriented approach. Developers highlight its reliable library functions and modules with an interactive mode. It supports diverse programming concepts, provides dynamic type checking, and simplifies database and user interface development. The unique feature of Python is the lines of code are fewer compared to other languages. Python is both free and open-source, so it’s easy for everyone to access. Its flexibility and scalability are key advantages, and perhaps its greatest benefit is how beginner-friendly it is.

Also, check out our step-by-step tutorial Scraping Amazon Product Data with Python.

-

Node.js

Key Features of Node.js:

- JavaScript-Based;

- Real-Time Data Handling;

- Wide range of modules and libraries (Puppeteer and Cheerio);

- Manages multiple requests and connections simultaneously;

- Conducts API’s as well as socket-based activities.

Originally Node.js was built as a JavaScript runtime environment. With Node.js developers execute JavaScript on the server side. Node.js is known for its non-blocking and asynchronous processing. It is widely used for web page indexing because it supports distributed crawling and scraping simultaneously. Its ability to process many connections makes it highly effective for small- to mid-size scraping tasks. However, Node.js is best suited for simple web scraping tasks and may not be the ideal choice for large-scale or more complex projects.

-

Ruby

Key Features of Ruby:

- Simple and intuitive syntax;

- Nokogiri and Mechanize Gems;

- Dynamic typing and flexibility;

- Community support;

- Quick prototyping nature.

Ruby is a dynamic, open-source, and reflective programming language similar to Perl and Smalltalk. Known for its fully object-oriented nature, Ruby treats everything as an object, where each piece of code has its own properties and behaviors. Its syntax is user-friendly and easy to learn. Ruby features an abundant collection of open-source libraries and gems that aid in web scraping, including those for managing HTTP requests (like HTTParty) and browser simulation (Watir). The active developer community of Ruby improves these tools and provides valuable assistance.

-

PHP

Key Features of PHP:

- Platform independent;

- Rich Libraries and Frameworks;

- Content management systems;

- Database integration;

- Real-time access monitoring.

PHP is a server-side scripting language mainly used to manage dynamic website content. Some developers say it isn’t the best choice for web scraping or building crawlers because it doesn’t support multi-threading, which can cause issues. However, PHP’s cURL library is useful for scraping media like images and videos from websites. cURL supports file transfer through protocols like HTTP and FTP to create web spiders that automatically download content. While PHP may not be ideal for large-scale scraping projects, it can still handle simpler tasks and support databases.

-

C++

Key Features of C++:

- Abstract Data types;

- Rich library and memory management;

- Exception handling feature to support error handling;

- Compiler based;

- Parallel Processing.

C++ is a general-purpose programming language that is case-sensitive and supports object-oriented, procedural, and generic programming. While it’s fast and powerful, C++ might not be the most budget-friendly choice for web scraping unless you have specialized tasks focused on data extraction. Some benefits include its simplicity, the option to create custom HTML parsing tools, and its flexibility with dynamic coding. Using C++, you can run multiple scrapers together and complete your web scraping tasks faster.

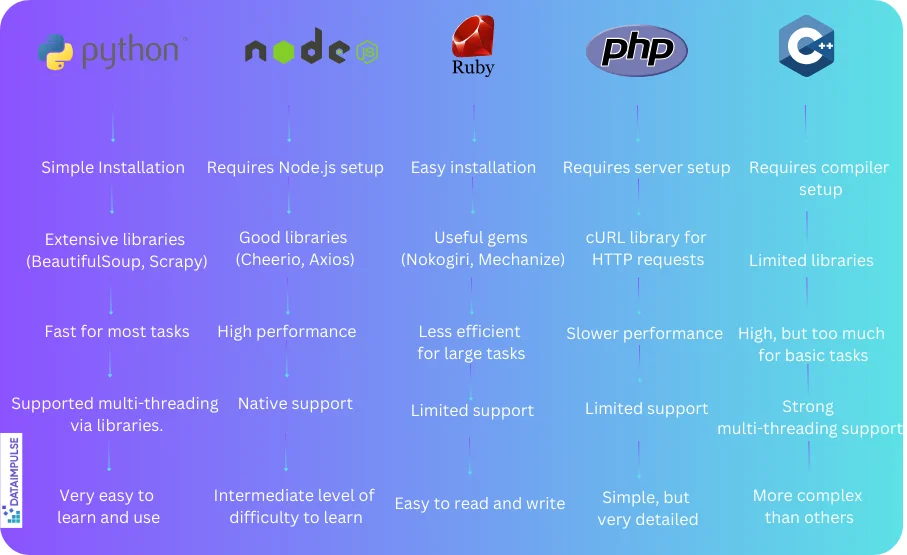

Which one to choose

Selecting the right programming language for web scraping comes down to the kind of project you’re working on. There’s no single answer that fits all situations – what matters most is that the language you choose can make HTTP requests and parse HTML. Almost every language, from PHP to Node.js to Python, can do this, so pick the one that best fits your needs and preferences.

Just take a look at this comparison table to better assess the features and capabilities of Python, Node.js, Ruby, PHP, and C++.

Benefits of using Proxies for web scraping

Servers often have security measures to control the number of requests sent from one IP address. Pairing your web scraper with proxies helps optimize request pacing, maintain anonymity, and ensure smooth connection. Here are a few reasons why you should integrate proxies when using programming languages for web scraping:

- Geolocation targeting – Use IPs from different countries or regions;

- Prevent connection failure – Proxies rotate your IP address;

- Access global content – Collect data from any location;

- Protection of identity – Proxies hide your real IP address and much more.

If you’d like to know more about DataImpulse proxies, hit the “Try now” button in the top-right corner or contact us at [email protected].