In this Article

eBay attracts both e-commerce professionals and occasional buyers, but why does it remain so popular? The answer could be hidden in eBay’s foundation: its mindset, structure, and unique approach. Their main aim seems to be connecting buyers and sellers globally. Yet beyond philosophy, its popularity also comes from specific features. The platform offers an enormous range of products, from the latest electronics to rare collectibles, and combines fixed-price listings with auctions. Amazon and Etsy may match some features, yet eBay’s unique blend of variety, auctions, and global scale keeps it popular.

Web scraping is a valuable skill for a variety of purposes, including market research, price comparison, competitor product analysis, and sentiment analysis of customer reviews. Python is the language that makes it possible. It is an easy-to-learn programming language that is widely used for web scraping, data analysis, and automation. In our tutorial, we’ll need not just the language itself but also Python libraries that help us send requests and parse HTML.

To understand this, explore eBay from the inside. In other words, after inspecting the AJAX calls made by the page, you’ll notice that most of the product data is embedded directly in the HTML document returned by the server. This means that a simple HTTP client to replicate the request, combined with an HTML parser, is enough to extract the information we need. That’s why we need libraries such as Requests and BeautifulSoup.

Key Instruments for Getting Started

The full equipment for this tutorial includes:

- Python 3 (the latest version)

Download here, install the Python extension in VS Code. Open terminal, check if it is installed with:

python --version

or

python3 --version

- Visual Studio Code (or another code editor, the latest version)

Download here, install, and open your project folder.

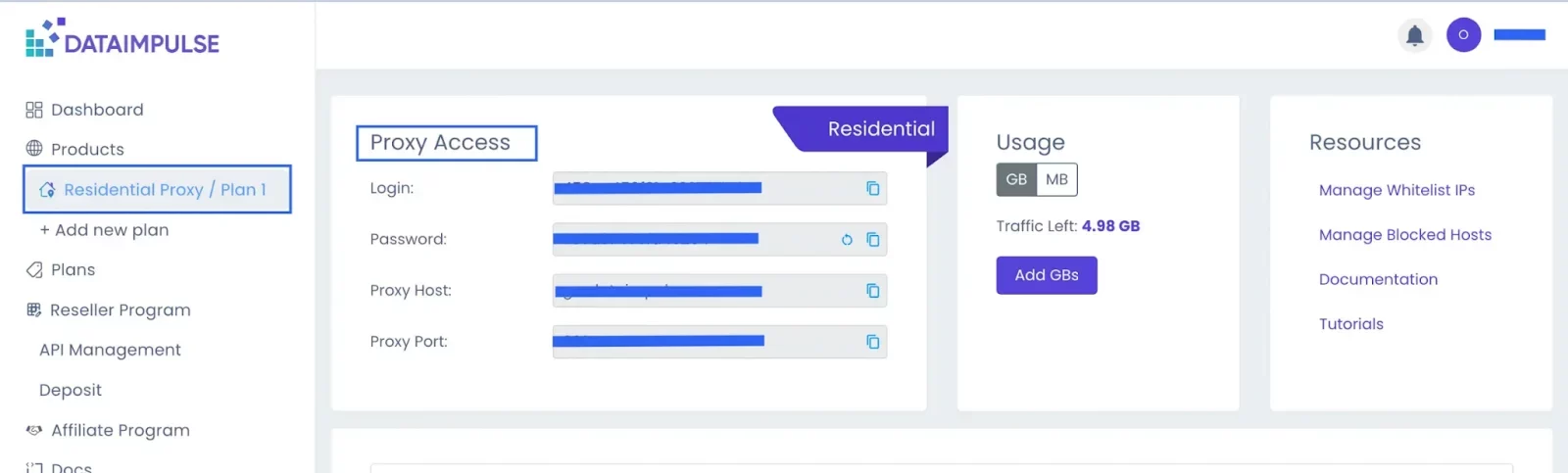

- Proxy credentials from the DataImpulse dashboard

Sign in to DataImpulse and copy your proxy login details.

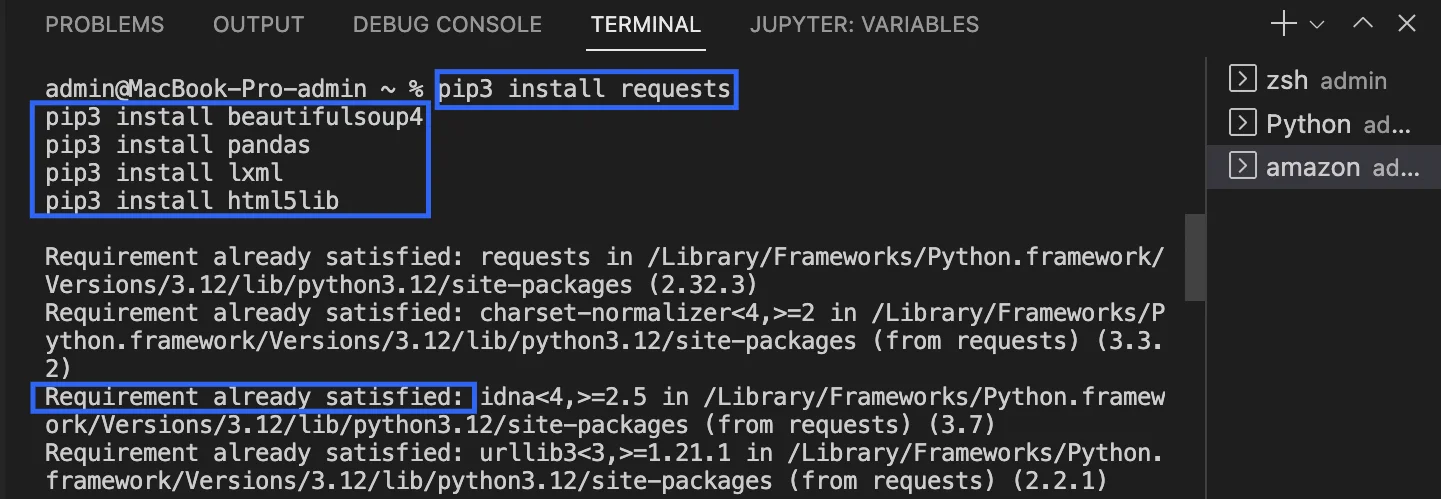

- Libraries: Requests and BeautifulSoup, lxml, Pandas (optional)

Firstly, install pip with one of these commands:

python -m ensurepip

python3 -m ensurepip

Then, open Terminal on your computer, install Python libraries using:

pip3 install requests beautifulsoup4 lxml pandas

Press Enter, and you’ll see if they were installed. Here is the result you should receive:

*Why sometimes python3/pip3 instead of python/pip?

On some systems (especially macOS and Linux), python might still point to Python 2.x. To make sure you’re running Python 3, you may need to type python3 instead. Both commands work the same once you’re on Python 3; it just depends on how your system names the executable.

One more important additional step is to initiate a Python environment by entering these commands in your Terminal:

mkdir ebay-scraper

cd ebay-scraper

python -m venv env

Next Steps

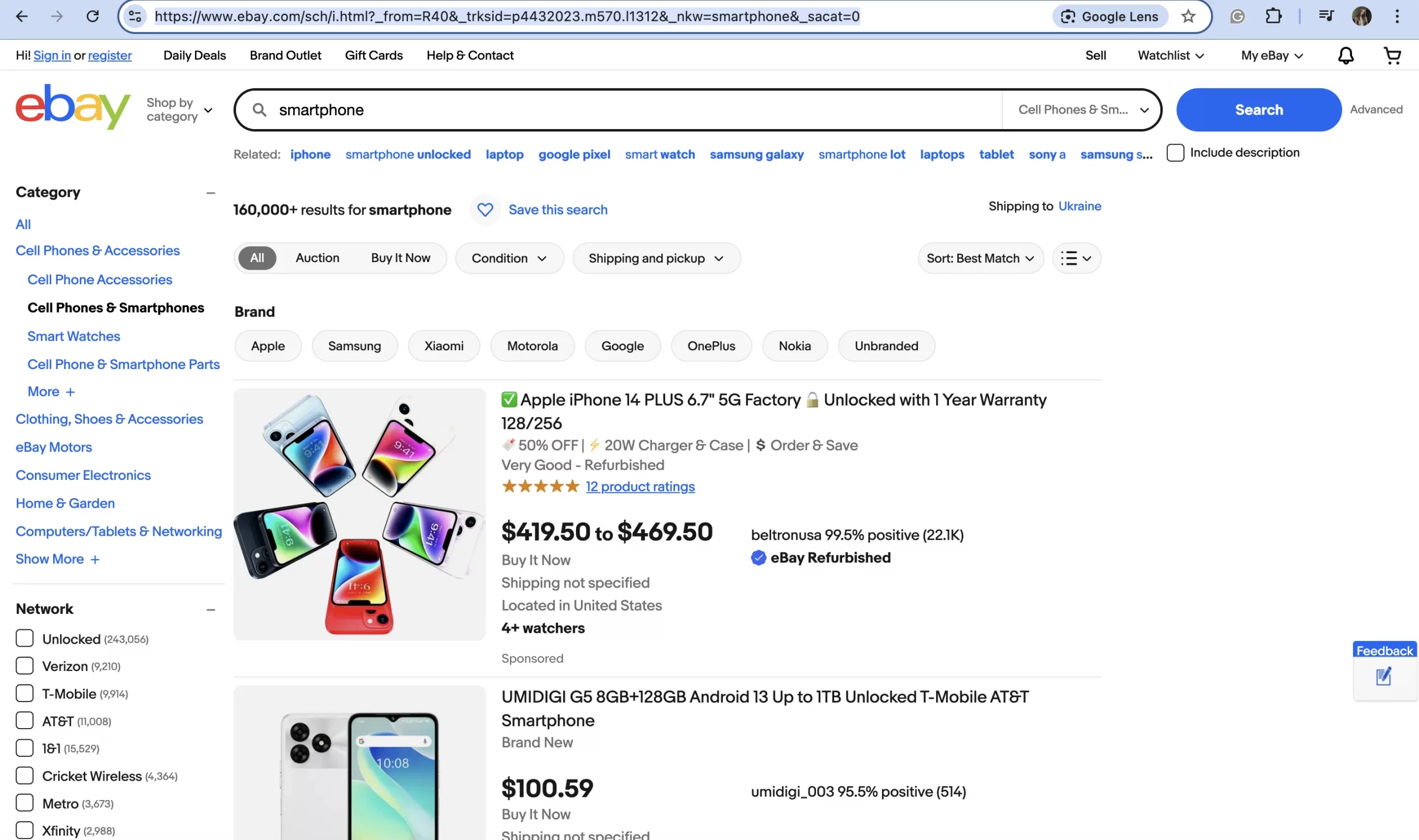

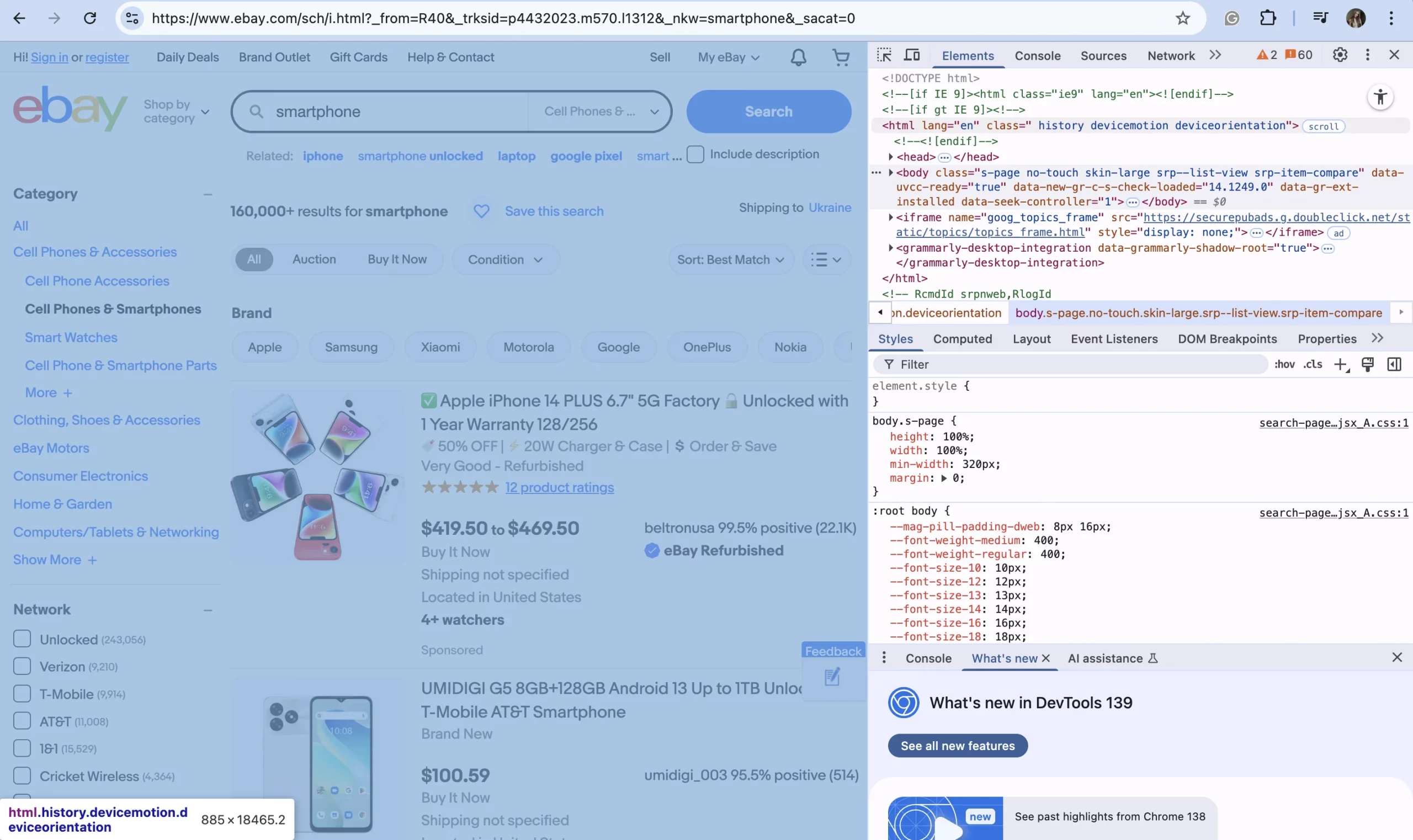

- Search for a specific item on eBay. We’ll be looking for “smartphones”. If you type it in the search bar, you’ll see such results:

2. Save the link for our code: https://www.ebay.com/sch/i.html?_from=R40&_trksid=p4432023.m570.l1312&_nkw=smartphone&_sacat=0

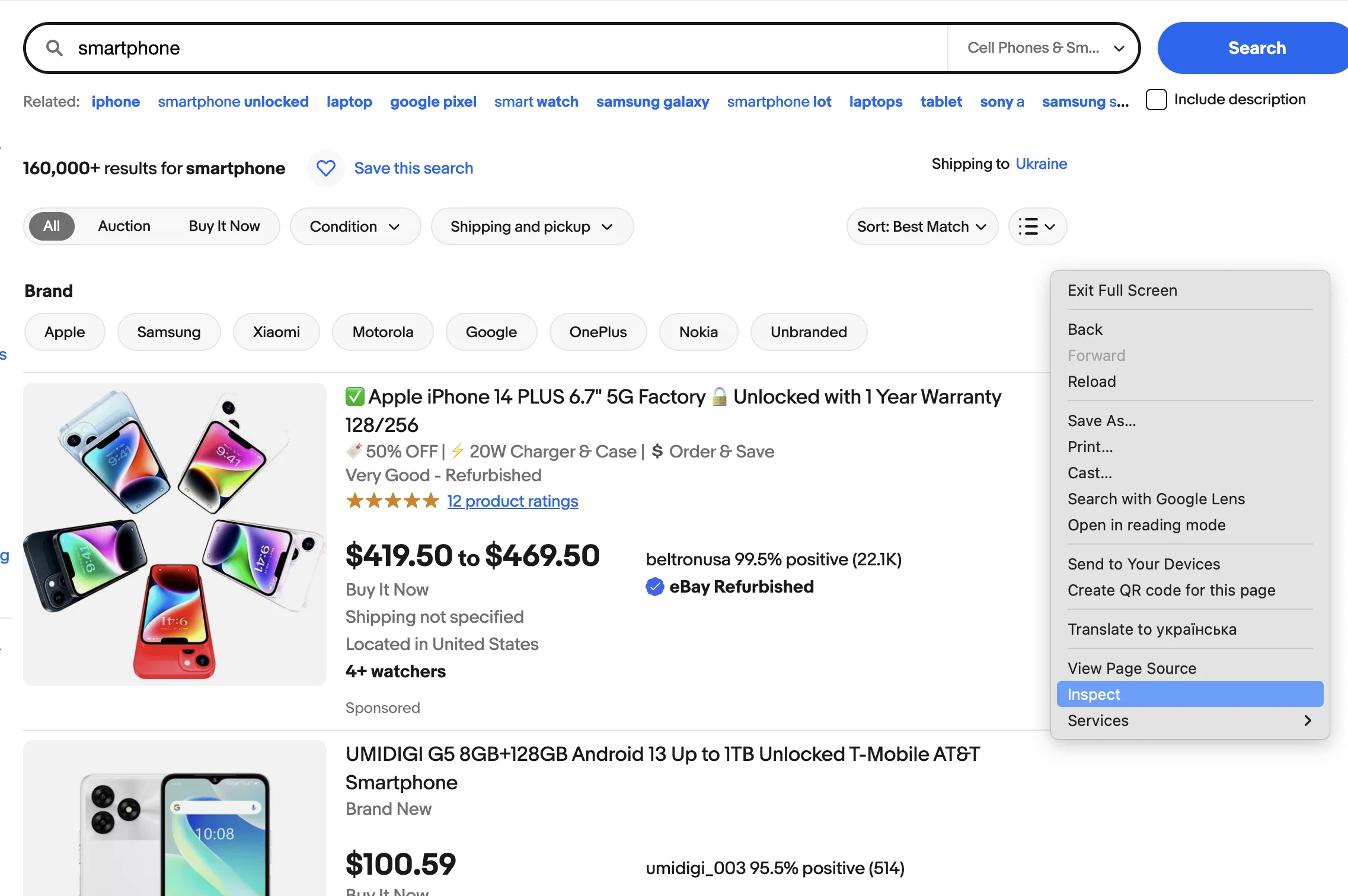

All the product info of the items you see can be extracted. For example, price, title, description, and image. But first, let’s take a closer look at the page’s HTML structure. Right-click the page and select ‘Inspect’ to open the Developer Tools. When you open the source code of the page, you’ll see that all products are listed as HTML list elements.

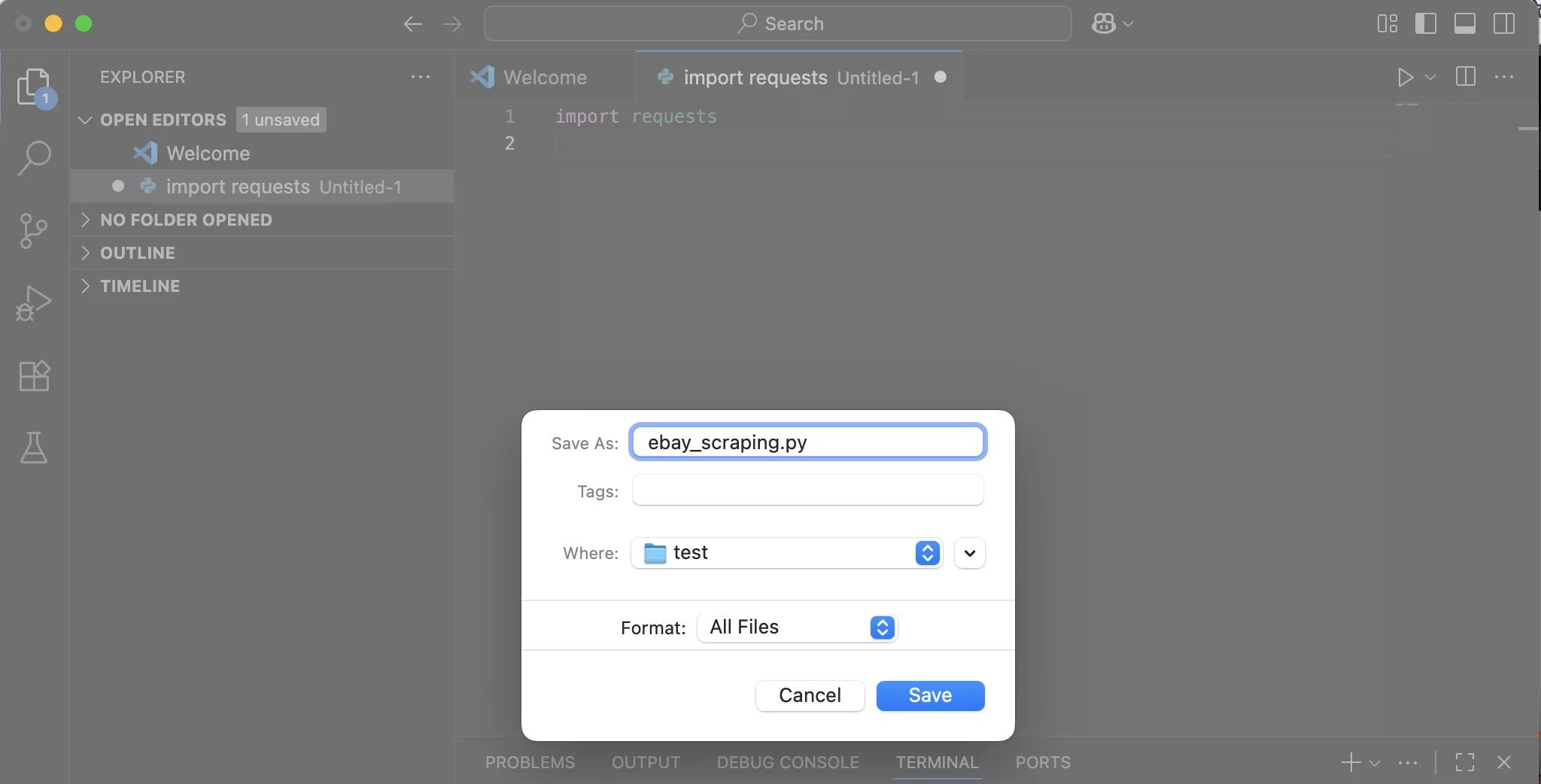

3. Open VS Code → File → New File → Save it as ebay_scraping.py.

Now, let’s start with our first code. Enter it in the main field:

import requests

from bs4 import BeautifulSoup

import csv

url = "https://www.ebay.com/sch/i.html?_from=R40&_trksid=p4432023.m570.l1312&_nkw=smartphone&_sacat=0"

html = requests.get(url)

print(html.text)

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36",

"Accept-Language": "en-US, en;q=0.5",

}

html = requests.get(url, headers=headers)

soup = BeautifulSoup(html.text, "lxml")

listings = soup.find_all("div", class_="s-item__info clearfix")

images = soup.find_all("div", class_="s-item__wrapper clearfix")

Make sure to replace our sample URL with the one you want to scrape.

4. Integrate proxies by inserting your proxy credentials for authentication. The proxy section will come before or after headers and look like this:

proxy_user = "YOUR DATAIMPULSE PROXY LOGIN"

proxy_pass = "YOUR DATAIMPULSE PROXY PASSWORD"

proxy_host = "HOST:PORT"

proxies = {

"http": "http://LOGIN:PASSWORD@HOST:PORT",

"https": "https://LOGIN:PASSWORD@HOST:PORT",

}

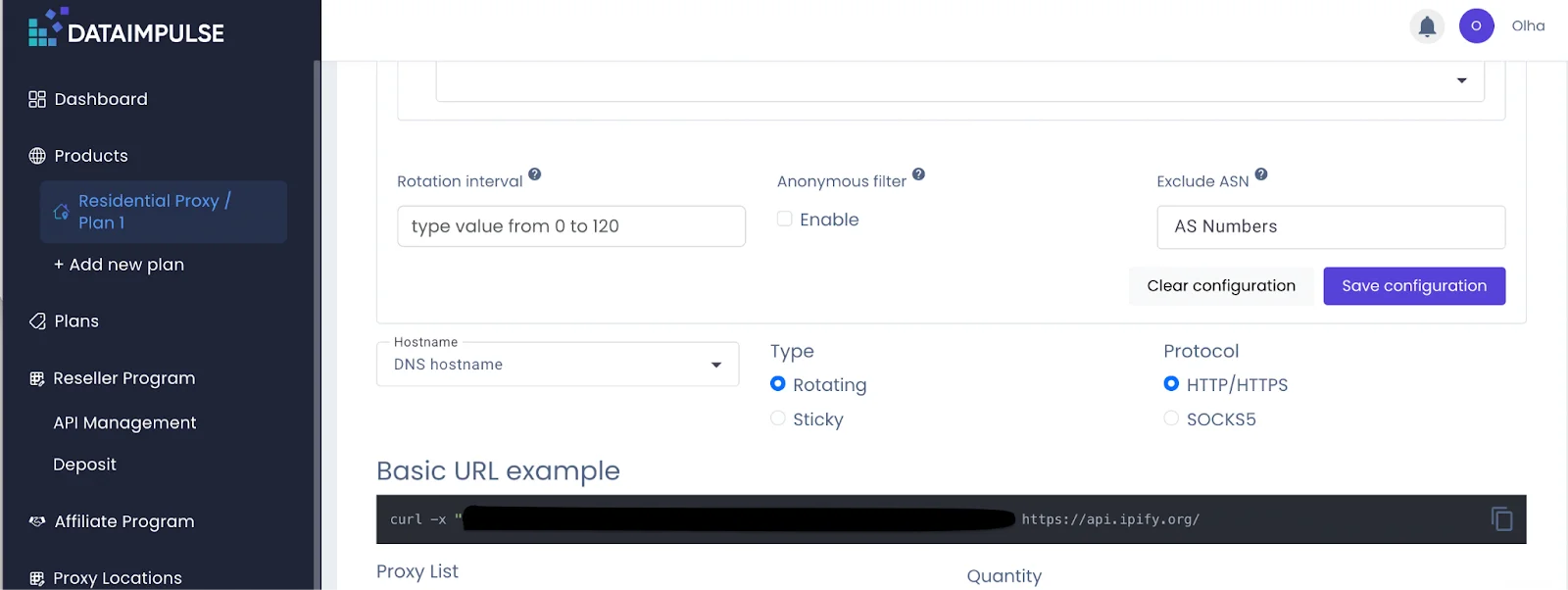

*Where can I find my proxy credentials? You can access all the necessary information in your DataImpulse dashboard, within the details of your selected plan.

You can also copy the right details under the Basic URL example section.

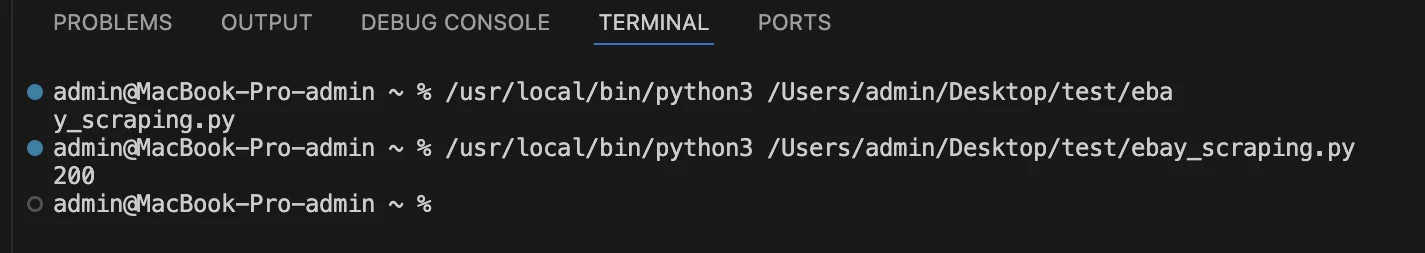

If your proxy is set up correctly, you should see 200 as the status code in the VS Code terminal.

What is User-Agent?

Headers are used to make your request look like it’s coming from a real browser. This helps ensure smoother website interactions. The most important header is User-Agent.

The Full Code for Scraping eBay

Now that our proxy is working, let’s put everything together and write a scraper that can pull actual product information from eBay. The goal here is to extract the most important details that any buyer would want to see: the product title, its price, status, and a direct link to the item. We’ll combine the Requests library with BeautifulSoup for parsing the HTML and extracting the data we need.

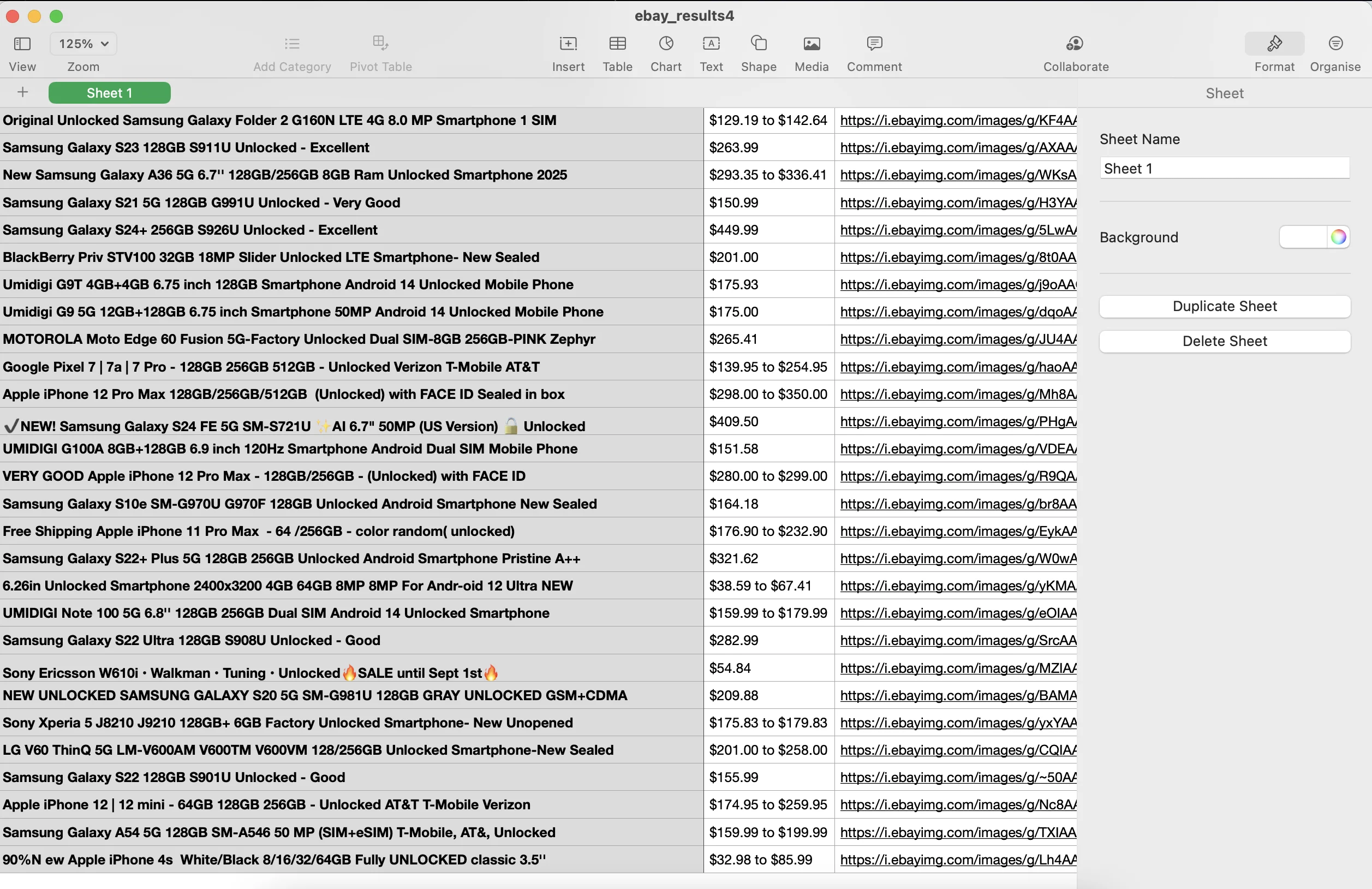

Final Step: Save Your Data

Once you’ve successfully scraped product information, the last step is to store it in a structured format to use later for analysis, comparison, or reporting. Two of the most common formats are CSV (Comma-Separated Values) and Excel spreadsheets. After extraction, we’ll create and save the data to a CSV file, named ebay_results.csv, using Python’s csv.DictWriter, with column names defined in fieldnames.

Here’s the full code with all the parts we’ve created before:

import requests

from bs4 import BeautifulSoup

import csv

url = "https://www.ebay.com/sch/i.html?_from=R40&_trksid=p4432023.m570.l1312&_nkw=smartphone&_sacat=0"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36",

"Accept-Language": "en-US, en;q=0.5",

}

# --- Proxy configuration (with authentication) ---

proxy_user = "YOUR DATAIMPULSE PROXY LOGIN"

proxy_pass = "YOUR DATAIMPULSE PROXY PASSWORD"

proxy_host = "HOST:PORT"

proxies = {

"http": "http://LOGIN:PASSWORD@HOST:PORT",

"https": "https://LOGIN:PASSWORD@HOST:PORT",

}

html = requests.get(url, headers=headers)

soup = BeautifulSoup(html.text, "lxml")

result_list = []

# Find all product items on the page

listings = soup.find_all("div", class_="s-item__info clearfix")

images = soup.find_all("div", class_="s-item__wrapper clearfix")

for listing, image_container in zip(listings, images):

title = listing.find("div", class_="s-item__title").text

price = listing.find("span", class_="s-item__price").text

product_url = listing.find("a")

link = product_url["href"]

product_status_element = listing.find("div", class_="s-item__subtitle")

product_status = (

product_status_element.text

if product_status_element is not None

else "No status available"

)

if title and price:

title_text = title.strip()

price_text = price.strip()

status = product_status.strip()

image = image_container.find("img")

image_url = image["src"]

result_dict = {

"title": title_text,

"price": price_text,

"image_url": image_url,

"status": status,

"link": link,

}

result_list.append(result_dict)

# Write the result_list to a CSV file

with open("ebay_results4.csv", "w", newline="", encoding="utf-8") as csv_file:

fieldnames = ["title", "price", "image_url", "status", "link"]

writer = csv.DictWriter(csv_file, fieldnames=fieldnames)

# Write the header row

writer.writeheader()

# Write the data rows

for result in result_list:

writer.writerow(result)

# Print the result list

for result in result_list:

print("Title:", result["title"])

print("Price:", result["price"])

print("Image URL:", result["image_url"])

print("Status:", result["status"])

print("Product Link:", result["link"])

print("\n")

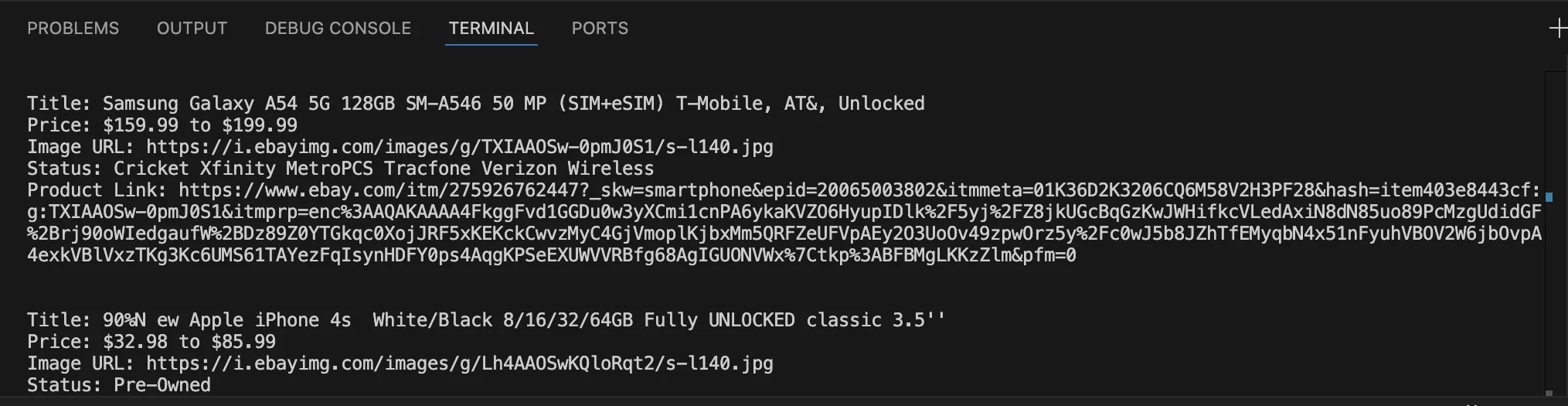

As a result, the VS Code terminal will display a list of smartphones along with their details and attributes.

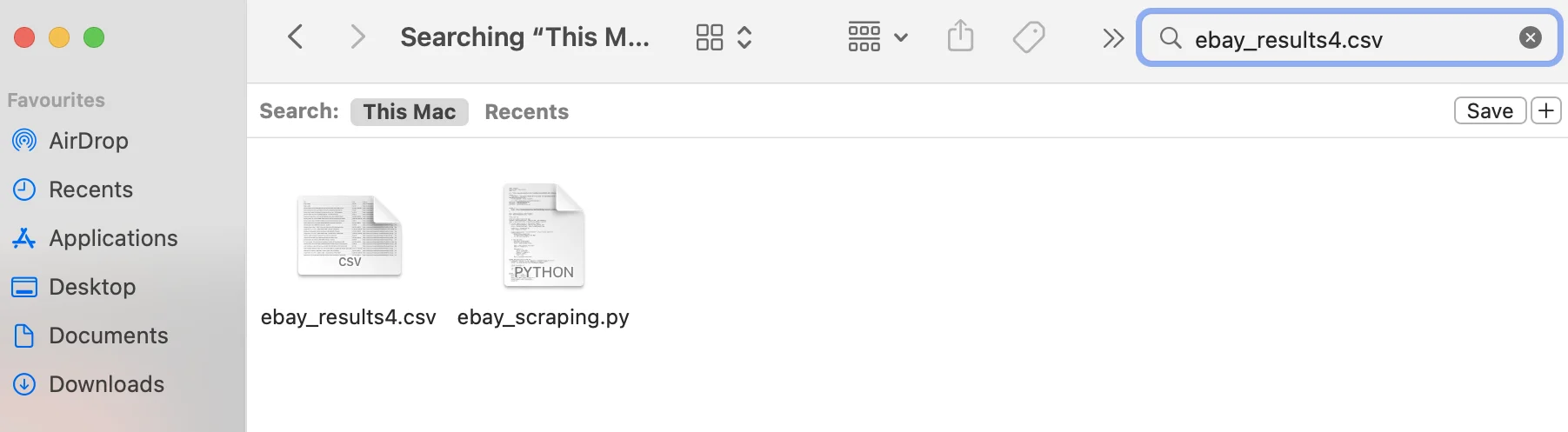

For easier access, locate the file named ebay_results4.csv, open it, and you’ll find all the structured data neatly organized. Pretty convenient, right?

Challenges You May Face

When you’re just starting out with scraping sites like eBay or Amazon, you might run into some challenges you didn’t expect. These platforms are designed to protect their data, so beginners often face obstacles that can make scraping a bit difficult.

- CAPTCHA

If you send too many requests simultaneously, a website can detect unusual traffic, and you may get a CAPTCHA. These tests can stop your scraper. Rotating proxies makes your activity look more natural. - Pagination

Most online marketplaces display products across multiple pages. Scraping just one page won’t give you the full dataset you need. To handle this, your scraper should be able to follow the “next page” links or adjust the page number in the URL automatically. - Rate Limiting

Too many requests at once can get you blocked. Slow down your scraper with a short time.sleep() intervals to mimic human browsing and prevent access denials. - User-Agent Headers

Default headers from libraries like requests look suspicious. Rotate realistic User-Agent strings so your scraper appears more human and has fewer chances to be filtered.

Conclusion

In this tutorial, we covered how to scrape product data from eBay: sending requests, parsing product details, and exporting results to CSV. For production use, always respect eBay’s terms of service, implement proxies to reduce blocking, and add proper error handling with retries for stability. By applying these practices, you’ll build a scraper that’s both efficient and resilient.

*This tutorial was created only for educational purposes.