In this Article

The difference between a slow-moving system and a push-through one often depends on where you keep your data. It’s not an exaggeration to say it affects your digital security. To protect your legacy, you need to know the right actions to take. Splitting data into hot and cold tiers reduces strain on your hardware. It also places critical information at the forefront.

In this article, you will learn how to identify the relevance of your data and the specific storage technologies that can be used for every stage of your data’s life.

The answer lies in the temperature…

In data storage, “temperature” determines how business-critical each piece of information is. The temperature defines the extent to which data is relevant to your operations. Because of this, it’s essential to structure all information according to its necessity, relevance, and frequency of use.

In data analytics, hot data refers to new and active data, while cold data refers to outdated information. This distinction influences cost, speed, infrastructure, and long-term storage strategy.

Hot data is about information that needs to be accessed immediately. For example, transactions, user interactions, sensor readings, operational dashboards, and other real-time workloads. In this case, speed is the priority, so hot data is typically stored on SSDs (Solid-State Drives). They deliver high transactional rates and low latency. HDDs (Hard Disk Drives) may also be used for hot data if frequent read/write cycles are required, but if you want to manage requests quickly, SSDs are preferred. On the other hand, they are more expensive than HDDs. Decide what matters more to you: price or performance.

Where is it stored? Hot data is often kept in edge-storage configurations, closer to end users, to reduce latency. Since it stays in well-protected environments, hot data is less vulnerable to cyberattacks compared to data stored in long-term, less frequently monitored systems. Hot data powers daily business decisions. Losing access to it or storing it incorrectly can slow applications, limit analytics, and negatively impact the user experience.

Cold data is information that is accessed on rare occasions. It must be saved for compliance, records, or future reference. For example, archived projects, legal documents, HR records, financial statements, research files, and historical analytics data. Since cold data doesn’t require high-speed retrieval, it can be kept on low-cost, budget-friendly storage systems.

Where is it stored? On slow hard drives or magnetic tapes, which offer huge capacity. Ideal for long-term retention, disaster recovery, and compliance. Access speeds are significantly lower, and data may be retrieved only occasionally or not at all for years. Cold storage systems are not used for performance but for durability and affordability.

Hot vs Cold Storage: Side-by-side

| Aspect | Hot Storage | Cold Storage |

| Access Frequency | Frequently | Rarely |

| Performance |

Low latency Fast retrieval |

High latency Slow retrieval |

| Storage Medium | SSDs (preferred), sometimes high-speed HDDs | Standard HDDs, magnetic tapes, low-cost cloud tiers |

| Cost | Expensive per GB | Budget-friendly per GB |

| Location | Edge storage or cloud regions close to users | Centralized archives or low-access cloud regions |

| Security | High | Medium |

| Use Cases | Transactional databases, dashboards, IoT data, user sessions | Legal archives, compliance records, backups, historical analytics |

| Cloud Services* |

|

|

*For hot data, cloud providers focus on speed and frequent access. AWS S3 Standard and EBS SSD, Google Cloud Standard Storage, and Azure Hot Blob Storage are the best for real-time workloads, transactional databases, dashboards, and active user data. Prices vary by region and usage. Check the AWS Pricing Calculator, Google Cloud Pricing Calculator, and Azure Pricing Calculator for the latest rates. For cold data, the priority shifts to cost efficiency and long-term retention. Services like AWS Glacier Deep Archive, Google Cloud Archive Storage, and Azure Archive Tier store infrequently accessed data. Fees differ by provider, so it’s best to consult the same calculators to estimate costs based on your region and usage patterns.

Cold, hot… how about warm?

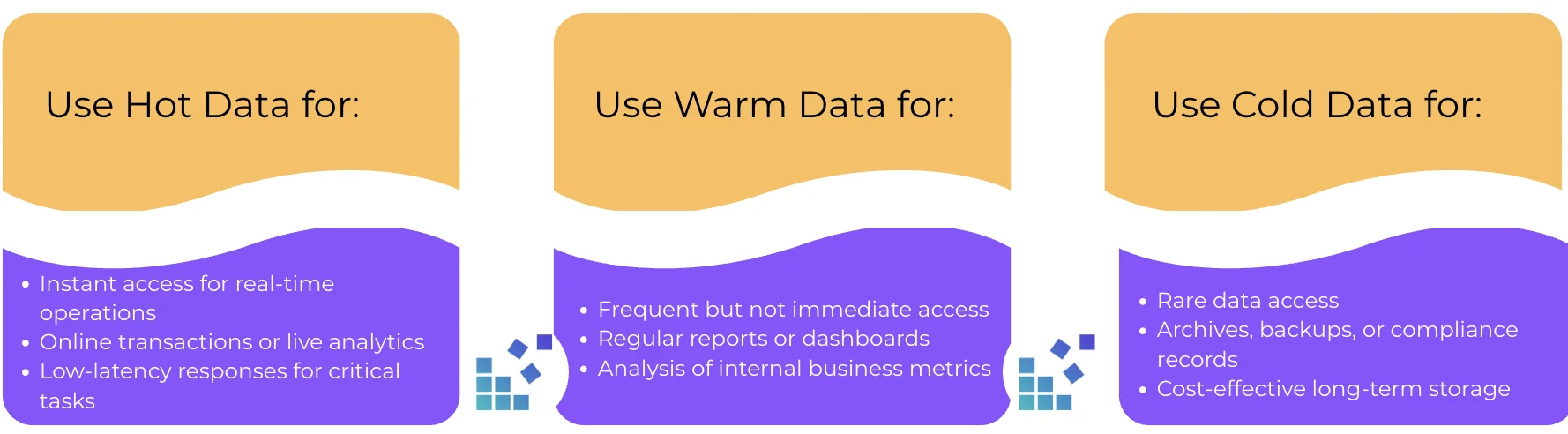

Not all data is categorized as either “hot” or “cold”. Between these two extremes, we have a middle layer in a tiered storage architecture — warm data. It is information that isn’t needed instantly but still requires reasonably quick access. Warm data is typically accessed periodically: more often than cold archives but less frequently than hot datasets. For example, for recently created analytics reports, logs, customer data, media, and any files that are viewed occasionally and remain relevant for weeks or months. As a result, warm storage aims to deliver a balance between speed and affordability.

Where is it stored? In mid-tier cloud storage, where retrieval is quick but not instant (e.g., AWS Infrequent Access, Azure Cool Access). SSDs or hybrid SSD/HDD systems are also commonly used. They offer better read speeds than cold storage at a lower cost than all-SSD hot storage. This middle tier ensures that high-performance storage isn’t wasted on rarely accessed information, while still keeping important data within quick reach when needed.

Recommendations for managing data

- Structure data by usage and relevance

Identify which datasets are hot, warm, or cold. Hot data should be stored on fast SSD storage or edge servers, warm data can reside on mid-tier storage, and archival data (cold) should use low-cost HDDs or cloud archive services.

- Implement lifecycle policies

Automatically move data between tiers as access patterns change. Most cloud providers (AWS, GCP, Azure) allow automated lifecycle rules for better tier transitions.

- Balance cost, performance, and security

- Use Proxies for safe data access

When scraping, aggregating, or analyzing data from external sources, proxies, especially residential ones, protect your systems and preserve anonymity. They provide safe access to large datasets and prevent IP-related service disruptions, so the hot layer receives fresh data without interruption.

- Plan for scalability and disaster recovery

Regularly replicate critical data and ensure backups exist across multiple regions. Hot and warm data may require active replication, while cold archives can rely on long-term, immutable storage.

- Monitor and optimize regularly